Welcome to the Data Systems Group

The Data Systems Group at the University of Waterloo's Cheriton School of Computer Science builds innovative, high-impact platforms, systems, and applications for processing, managing, analyzing, and searching the vast collections of data that are integral to modern information societies — colloquially known as “big data” technologies.

Our capabilities span the full spectrum from unstructured text collections to relational data, and everything in between including semi-structured sources such as time series, log data, graphs, and other data types. We work at multiple layers in the software stack, ranging from storage management and execution platforms to user-facing applications and studies of user behaviour.

Our research tackles all phases of the information lifecycle, from ingest and cleaning to inference and decision support.

News

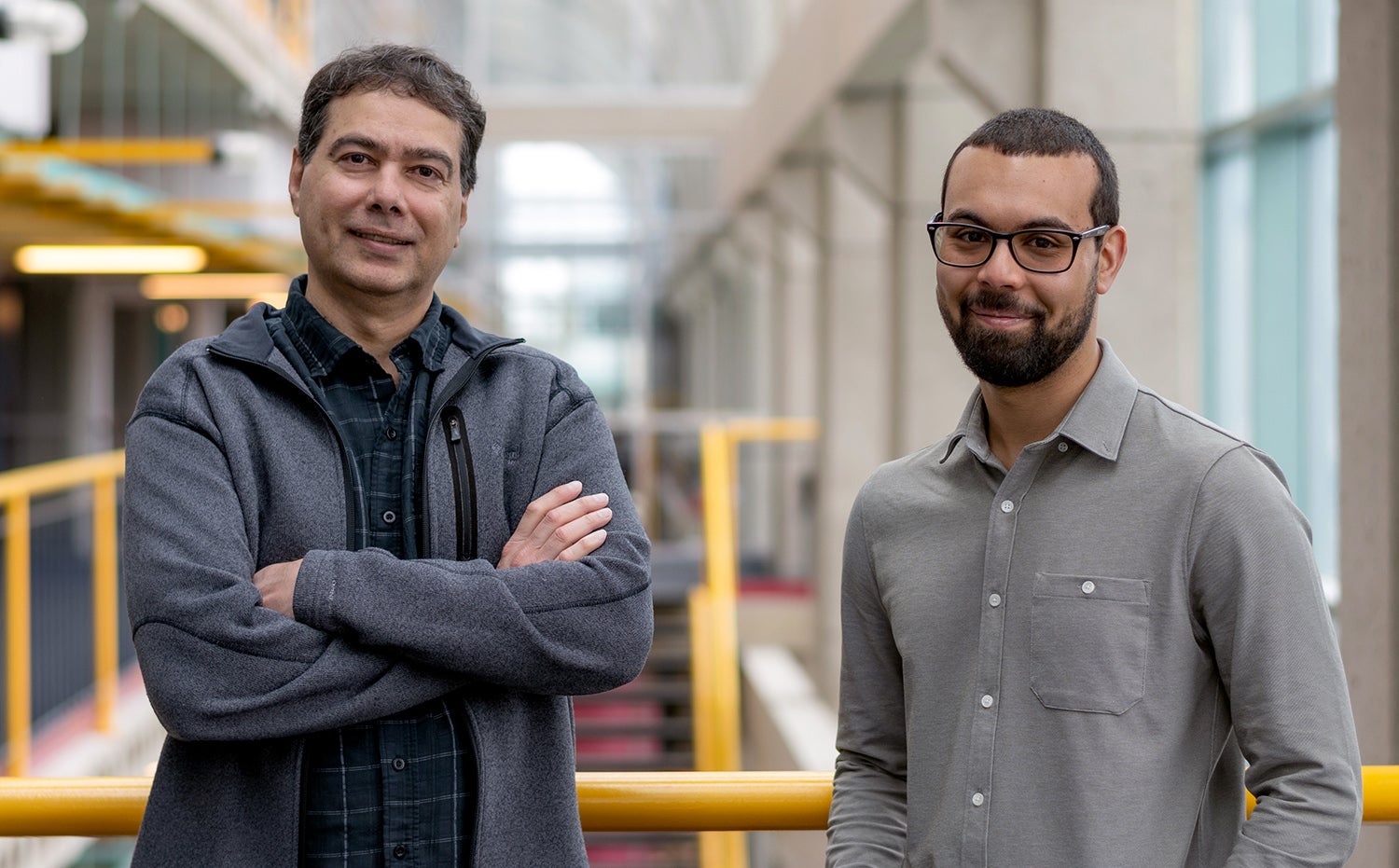

- Mar. 14, 2024M. Tamer Özsu, founding member of the Data Systems Group, receives 2024 IEEE TCDE Education Award

University Professor M. Tamer Özsu has received the 2024 IEEE Technical Committee on Data Engineering Education Award for his fundamental contributions to data management and data science pedagogy. One of four prestigious annual awards conferred by IEEE TCDE, the Education Award recognizes database researchers who have made an impact on data engineering education, including impact on the next generation of data engineering practitioners and researchers.

- Nov. 16, 2023Renée J. Miller named Canada Excellence Research Chair in Data Intelligence, will bring expertise in data science to the School’s world-class Data Systems Group

Professor Renée J. Miller has been named the Canada Excellence Research Chair in Data Intelligence. She is currently a University Distinguished Professor at Khoury College of Computer Science at Northeastern University. She will be joining the University of Waterloo in June 2024 as the Cheriton School of Computer Science’s first Canada Excellence Research Chair, bringing her expertise in data science to the School’s world-class Data Systems Group.

- May 18, 2023DSG graduate Michael Abebe receives Cheriton Distinguished Dissertation Award

Recent PhD graduate Michael Abebe has received the 2023 Cheriton Distinguished Dissertation Award. Now in its fifth year, the award was established to recognize excellence in computer science doctoral research. In addition to the recognition, recipients receive a cash prize of $1,000.