Introduction

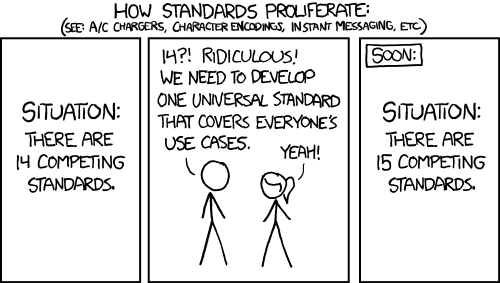

Over the summer, NetLab’s development team is working to contribute to a data integration tool to facilitate research which combines disparate datasets. Before beginning our development work, we did some research into what is currently available. We found a few competing tools, though we felt none of them fully met our goals. However, a python tool called recordlinkage shows significant potential. So, taking a page from xkcd (see the comic below), we have decided to build on top of recordlinkage rather than develop our own competing tool.

This tutorial is meant to introduce features currently implemented in recordlinkage as well as some of the added functionality the NetLab developers have begun to implement. This notebook applies recordlinkage to some of the problems faced by our IDI dataset. First, we will use recordlinkage to assign location information to universities within our dataset. Second, we will use a similar process to find and merge duplicate entities in our IDI dataset.

Before you begin

Before running through this notebook you should make sure you have the necessary python packages installed on your machine: pandas and recordlinkage. You can install pandas by running: pip install pandas in terminal. Similarly, run: pip install recordlinkage in terminal to install the recordlinkage package.

Define functions

Below are some preliminary functions that the NetLab developers have defined to build on the functionality provided by recordlinkage. Additional and up-to-date functions can be found in NetLab’s labutils repository on GitHub.

def fuse(dfA, dfB, dfmatches, authoritative="dfA"):

newDF = pd.DataFrame()

for row in dfmatches.iterrows():

indexA = row[0][0]

DictA = dfA.loc[indexA].to_dict()

indexB = row[0][1]

DictB = dfB.loc[indexB].to_dict()

newDict = {}

if authoritative == "dfB":

for item in DictA:

newDict[item] = DictA[item]

for item in DictB:

newDict[item] = DictB[item]

elif authoritative == "dfA":

for item in DictB:

newDict[item] = DictB[item]

for item in DictA:

newDict[item] = DictA[item]

newSeries = newDict

newDF = newDF.append(newSeries, ignore_index=True)

return newDF

def left_fuse(dfA, dfB, dfmatches):

# Keeps all the rows in dfA, matches each to at most one item in dfB

newDF = dfA.copy()

columns = dfB.columns.values

for col in columns:

newDF[col] = newDF.apply(lambda _: '', axis=1)

for row in dfmatches.iterrows():

indexA = row[0][0]

indexB = row[0][1]

for col in columns:

newDF.loc[indexA][col] = dfB.loc[indexB][col]

return newDF

def deduplicate(df, dfmatches, columns_append=[]):

newDF = df.copy()

for row in dfmatches.iterrows():

indexA = row[0][0]

indexB = row[0][1]

for col in columns_append:

old_set = set(newDF.loc[indexA][col].replace("[", "")

.replace("]", "")

.replace("'", "")

.split(", "))

new_set = set(df.loc[indexB][col].replace("[", "")

.replace("]", "")

.replace("'", "")

.split(", "))

old_set = old_set.union(new_set)

newDF.loc[indexA][col] = str(list(old_set))

newDF = newDF.drop(indexB) # The dropping is going to be problematic with triplicates, etc.

return newDF

def show_duplicates(df, dfmatches):

newDF = pd.DataFrame()

for row in dfmatches.iterrows():

indexA = row[0][0]

DictA = df.loc[indexA].to_dict()

indexB = row[0][1]

DictB = df.loc[indexB].to_dict()

newDict = {}

for item in DictA:

col_name = "A_" + item

newDict[col_name] = DictA[item]

for item in DictB:

col_name = "B_" + item

newDict[col_name] = DictB[item]

newSeries = newDict

newDF = newDF.append(newSeries, ignore_index=True)

return newDF

Task 1 — Assign countries

First, we are going to link the idi_table and university datasets together in an attempt to find country data for institutions in our IDI dataset. To do this, we will compare the "Full Name" variable from the idi_table dataset with the "name1", "name2", and "name3" variables from the university dataset.

To begin, we read in the idi_table data and use recordlinkage’s standardise module to quickly clean the dataset. Then, for the sake of time, we will filter the data to only include those entities that begin with the letter ‘z’. The first five rows from this dataset are shown below.

import pandas as pd

import recordlinkage as rl

from recordlinkage.standardise import clean

# ************

# Get IDI Data

# ************

# Load the idi data

idi = pd.read_csv("idi_table.csv", encoding='latin-1')

# Clean the Full Name column (removes brackets, lowercases, removes accents, etc)

idi["Full Name"] = clean(idi["Full Name"], remove_brackets=False, strip_accents='ascii')

# Filter the data to only include entities that begin with z

# (For the sake of time)

z_idi = idi[idi['Full Name'] > "z"]

| index | Full Name |

|---|---|

| 494 | zurich university hospital |

| 559 | zagazig university |

| 648 | zentrum innere medizin und deutsches zentrum |

| 1237 | zhejiang academy of agriculture sciences |

| 1253 | zambia emory hiv research program |

Next, we can read in the university data and once again use the standardise module to clean the variables of interest. We will also filter the dataset to only include universities that begin with the letter ‘z’. The first five rows from this dataset are shown below.

# *******************

# Get University Data

# *******************

# Read in University Data

uni = pd.read_csv("universities.csv")

# clean the data (alternative names are included)

uni["name1"] = clean(uni["name1"])

uni["name2"] = clean(uni["name2"])

uni["name3"] = clean(uni["name3"])

# Filter the data to only include universities that begin with z

# (For the sake of time)

z_uni = uni[uni['name1'] > "z"]

| index | name1 | name2 | name3 | addr1 | addr2 | addr3 | addr4 | pop |

|---|---|---|---|---|---|---|---|---|

| 117 | zsigmond kirly foiskola | king sigismund college | none | Kelta u.2. | Budapest | 1139 Budapest | Hungary | “1,000,000-5,000,000” |

| 178 | ziauddin university | none | none | “4/B, Shahra-e-Ghalib, Block-6” | Karachi | 75600 Sindh | Pakistan | “over 5,000,000” |

| 204 | zhaotong university | none | “Yunnan Road, Zhaoyang District” | Zhaotong | 657000 Yunnan | China | “over 5,000,000” | |

| 393 | zyegin niversitesi | zyegin university | none | Kusbakisi Cad. No 2 | Istanbul | 34662 Istanbul | Turkey | “over 5,000,000” |

| 409 | zhejiang normal university | none | None | Jinhua | 524088 Zhejiang | China | “1,000,000-5,000,000” |

Once both datasets have been read in, we will use recordlinkage to index the data. This essentially creates candidate pairs that we can later evaluate. In this case, because each of our datasets is relatively small we will use a full index. This will result an index with all possible pairs of records.

# *****

# Index

# *****

# Find all possible entity/university combinations

# Note: this uses recordlinkage 0.9's updated interface

pairs = rl.FullIndex()

pairs = pairs.index(z_idi, z_uni)

Once we have a list of pairs we want to compare, we can go ahead and perform our comparisons. The code block below shows how built-in recordlinkage functionality can be used to do this. Upcoming tutorials will explore how to create your own custom comparison methods, but for now we will just stick with the built-in ones.

Then, once the comparisons have been made we will classify our comparisons to get a list of matches. The code below uses a really simplistic method of filter, where if any of the comparisons resulted in a score higher than 0.75 we will classify it as a match. Other more complicated classification methods exist and some machine learning approaches are also built-in to recordlinkage.

# *******

# Compare

# *******

# Compare the entity name with the primary university name

compare_c1 = rl.Compare(pairs, z_idi, z_uni)

compare_c1.string("Full Name", "name1", name="Name1")

compare_c1.string("Full Name", "name2", name="Name2")

compare_c1.string("Full Name", "name3", name="Name3")

# ********

# Classify

# ********

# Compile list of matched pairs

matches = compare_c1.vectors[compare_c1.vectors.max(axis=1) > 0.75]

matches = matches.sort_values("Name1")

Now that we have a list of matches we can fuse our dataset. The code block below uses one of the functions defined at the beginning of the document to fuse the datasets together. The fusion below results in a table where each IDI record has been kept. Then, the information from the highest scoring match for each record is added to the table. Those records who did not have a match are still included, but their additional columns are left blank. Five rows of the fused dataset are shown below.

# ****

# Fuse

# ****

# Use a RAD developed function to fuse the data (keeps all rows in z_idi)

fused = left_fuse(z_idi, z_uni, matches) #fuse

fused = fused[["Full Name", "name1", "addr1", "addr2", "addr3", "addr4"]] #select columns

| index | Full Name | name1 | addr1 | addr2 | addr3 | addr4 |

|---|---|---|---|---|---|---|

| 2617 | zambia emory hiv research project | |||||

| 3241 | zhejiang university | zhejiang university | “38 Zheda Road, Xihu” | Hangzhou | 310027 Zhejiang | China |

| 4468 | zhongkai university of agriculuture | |||||

| 6863 | zahedan university of medical sciences | zahedan university of medical sciences | None | Zahedan | 98135 Sistan and Baluchestan | Iran |

| 559 | zagazig university | zagazig university | None | Zagazig | 44519 Sharqia | Egypt |

Task 2 — Find duplicate entities

Second, we are going to work on finding duplicate entities within a subset of the IDI dataset. We will use the dataset’s “Full Name” column, which contains named entities which were identified by Stanford’s NLP toolkit.

To begin, we will read in the IDI data from its csv and use recordlinkage’s standardise module to clean the dataset. Then, in the interest of time we will filter the data to only include entities which begin with the letter ‘a’. The first five rows of this dataset are shown below.

# ************

# Get IDI Data

# ************

# Read in the idi data from the csv

idi = pd.read_csv("idi_table.csv", encoding='latin-1')

# Clean the idi data

idi["Full Name"] = clean(idi["Full Name"], remove_brackets=False, strip_accents='ascii')

# Filter the data to only include entities that begin with a

a_idi = idi[idi['Full Name'] > "a"]

a_idi = idi[idi['Full Name']

| index | Full Name |

|---|---|

| 52 | ahvaz jundishapur university of medical sciences |

| 62 | animal et gestion integree des risques |

| 86 | anhui province hospital affiliated anhui medical university |

| 100 | aberdeen university |

| 109 | arabian gulf university |

Since we are doing deduplication, we will only need to read in a single dataset. Despite this, recordlinkage operates in essentially the same way as we saw previously. The following code block indexes the data, but does so using the sorted neighbourhood method. To read about the differences between a full index and sorted neighbourhood index, read recordlinkage’s documentation.

# *****

# Index

# *****

pairs = rl.SortedNeighbourhoodIndex(on="Full Name", window=5)

pairs = pairs.index(a_idi)

Once again, following indexing, we will compare our pairs and then classify the results. The process here follows the same steps we used in Task 1 above. The only major differences are that we will use a threshold of 0.9 for match classification and we will make use of the show_duplicates() function to display the pairs classified as matches. This table is shown below.

# *******

# Compare

# *******

# Compare entity names with one another

compare_c2 = rl.Compare(pairs, a_idi, a_idi) #set up compare object

compare_c2.string("Full Name", "Full Name", name="Full Name") #compare

# *****

# Match

# *****

# Compile list of matched pairs, using threshold of 0.9

matches = compare_c2.vectors[compare_c2.vectors.sum(axis=1)>0.9].sort_index() # You can play with the threshold (0.9)

# Print out the matches found

show_matches = show_duplicates(a_idi, matches)

show_matches[['A_Full Name', 'B_Full Name']]

| index | A_Full Name | B_Full Name |

|---|---|---|

| 0 | ahvaz jundishapur university of medical sciences | ahvaz jondishapur university of medical sciences |

| 1 | anhui academy of agricultural sciences | anhui academy of agriculture sciences |

| 2 | albert ludwigs university of freiburg | albert ludwigs university freiburg |

| 3 | advanced science and technology | advanced science and biotechnology |

| 4 | 309th hospital of chinese people s liberation army | 307th hospital of chinese people s liberation army |

| 5 | applied life sciences | applied life sciences |

| 6 | awadesh pratap singh university | awadhesh pratap singh university |

| 7 | affiliated zhongshan hospital of fudan university | affiliated zhongshan hospital of dalian university |

| 8 | alpert school of medicine of brown university | alpert school of medicine brown university |

| 9 | australia school of biological sciences | australia school of biomedical sciences |

| 10 | aix marseille universite um | aix marseille universite um2 |

| 11 | academy of scientific and innovative research | academy of scientific innovative research |

| 12 | agriculture organization of the united nations | agriculture organization of the united nations fao |

| 13 | agricultural research service | agricultural research service |

| 14 | aids programme of research south africa | aids program of research south africa |

Next, we will actually go about deduplicating the dataset. This uses the deduplicate() function included at the beginning of this tutorial. Note this function is very much a work in progress and won’t work seamlessly with other datasets. The code to perform this is included below.

# ***********

# Deduplicate

# ***********

# Use a RAD developed function to merge duplicates.

# Authors, PMID, City, Country, and Type are aggregated into a single list.

deduplicated = deduplicate(a_idi, matches)

Summary

That concludes the overview of recordlinkage and how it might apply to data of interest to academic labs such as NetLab. Look out for new tutorials introducing the different aspects of recordlinkage, from cleaning, to classifying, to fusing.