Design Team Members: Leila Fiouzi

Supervisor: Prof. P. Fieguth, Prof. H. Corporaal

Background

Transport Triggered Processors (TTA) are highly modular, flexible and scalable. These characteristics allow for easy design reuse by adding or removing extra functional units to or from the existing design. The flexible and parallel processor framework of TTAs makes them highly suitable for use in embedded processors and data processing and communication applications that have limited, but special processing requirements such as Neural Networks (NN).

TTAs are based on instruction level parallelism and are programmed by specifying data transports instead of operations. Instead of having multiple instructions each specifying a different operation to be executed, there is now only one instruction specifying a move to the specific functional unit that executes that instruction. This allows TTAs to have a modular structure composed of various Functional Units (FU)s that are easy to add and remove since they are not instruction dependent.

The MOVE Framework developed at the computer architecture group of the Delft University of Technology, the Netherlands, takes the concept of TTAs one step further by proposing a methodology complete with a set of proprietary tools that uses a C/C++ description of a proposed application to semi-automatically generate a processor framework that can be optimized and used in the implementation of a co- or embedded processor for the proposed application.

Project description

Up to now, there have been no TTA based processors applied to NN computations. This project attempts to change the status-quo by implementing a TTA based processor for speeding up the computations of a Kohonen Self-organizing Feature Map (KSFM) that performs vector quantization of gray scale images.

Vector Quantization (VQ) is a widely used method in signal compression. It performs this compression by mapping a sequence of continuous or discrete signals into a digital sequence suitable for communication over or storage in a digital channel. This mapping is done by representing or encoding each signal vector with the index of one of a finite set of representations or codevectors that are stored in a collection called the codebook. The receiver which is assumed to have a copy of the codebook decodes this encoded image by substituting each index it receives with the corresponding vector from the codebook. VQ achieves a low bit rate in the digital representation of signals while maintaining a minimum loss in signal quality and is composed of two parts, codebook generation and signal encoding. The codebook generation part, trains the quantizer (i.e. generates the codebook) by taking a sequence of vectors from a set of one or more training images. The training images should be chosen such that they are a good representation of the cross-section of images that will be coded with that quantizer in the future. In other words, the quantizer is optimal. The encoding part, simply takes a new image and encodes it using the previously generated codebook.

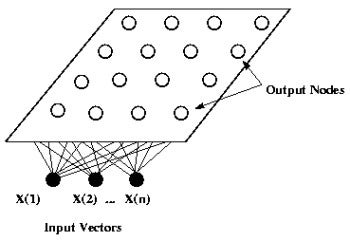

Artificial Neural Networks (ANN) are massively parallel computing structures that combine a number of non-linear computational units or neurons. They usually require large parallel and distributed processing units due to their high demand for parallel computation. KSFM are a subclass of ANNs that are highly suitable for implementing vector quantization because they are unsupervised. This means that they use the statistical information contained within the input data set to cluster it, in other words, generate a codebook. The codebook is manifested in the weights of the neurons. So for a codebook of L levels, we will need a KSFM with L neurons. The neurons in a KSFM are arranged in planar grids of any shape have mutual lateral connections defined by means of what is called a neighberhood function. These lateral connections allow the KSFM to use nearest-neighbour classifying. A general KSFM is shown below.

Design methodology

This project uses a KSFM with 64 neurons and 16 inputs. The input vectors are 4x4 pixel blocks (thus a 16 dimensional input vector) of a 256x256 grayscale image that are fed in parallel to all the neurons. The network uses the KSFM algorithm with a variable learning rate and neighbourhood function to do the clustering, that is train itself. During the encoding stage the network simply uses the weights to determine the departure or distance between the input vector and each codeword. The neuron with the lowest distance is the winning neuron and the input vector is coded as its index.

The design stages are composed of developing a method for extracting and representing the input vectors from the original image, developing a detailed C/C++ model for the KSFM and using this model to design and optimize the required FUs. The hardware design stage has to deal with issues such as the type and optimal number of FUs required per processor, the interconnections and pipelining of FUs, and the need for a central control unit to synchronize the operation of processors when more than one is being used.

Various research papers, books and open source public domain software packages were and are being used in this project. A complete bibliographical index is not possible in this space, but the following links provide a sample of what was or will be used.