Design team members: Jimmy Fan, Rebecca Wong, Selwyn Yuen

Supervisor: Professor M. Kamel

Background

The existing robots in the PAMI (Pattern Analysis and Machine Intelligence) lab all require a remote computer to command its movement, through execution of a script or program. With more computation power being available on the robot itself, it should be possible for commands to be accepted and executed directly on the robot, making it autonomous. In this case, an intuitive and natural interface such as voice or gesture recognition is highly desirable. There are a wide variety of possible applications for such a voice-controlled autonomous robot. The advantages become more obvious when dealing with a team of robots. Instead of programme the robot one at a time, one can simply speak aloud and the commands can simultaneously reach the group of robots.

Project description

The robot that will be available for this project is the Magellan Pro manufactured by iRobot Corporation . It has basic locomotive abilities such as “move forward” and “rotate”, and possesses proximity sensors. The project will be to provide the robot with voice command capability and higher-level navigation functions such as non-trivial traversal patterns with obstacle avoidance. Each new layer of the robot architecture will be designed to be scalable to allow future enhancement by PAMI researchers.

Design methodology

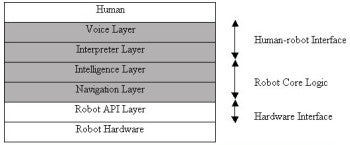

After preliminary high-level design, the voice-controlled robot requires four new architectural layers to be built: voice layer, interpreter layer, intelligence layer, and navigation layer. These are the shaded layers shown in Figure 1. The unshaded layers, which include the robot API and hardware layers, already exist and will come with the robot.

The development methodology chosen for the project is the Waterfall model which consists of five stages, including requirements analysis and definition, system design, implementation and unit testing, system testing, and maintenance.

The development process first starts independently at the voice layer and navigation layer, slowly moving its way towards the intelligence layer in the middle. This is mainly due to the great importance of the voice layer and the navigation layer. The project methodology, based on the Waterfall model, is outlined below.

1. Analyse the requirements for the voice-controlled robot.

2. Define the high-level specification and system architecture.

3. Design, implement, test voice recognition layer.

4. Design, implement, test and integrate interpreter layer with voice recognition Layer.

5. Design, implement, test and integrate navigation layer with robot API layer.

6. Design, implement, test and integrate intelligent layer with interpreter and navigation layers.

7. Deploy into actual robot.

8. Test the integrated system.

9. Document.