Design team members: Kyle Morrison, Al Amir-Khalili, Christopher Best

Supervisor: Alexander Wong

Background

Many of us have been frustrated when try to perform some visual task on the computer, whether it be resizing some data table, moving an object in a CAD program, panning across a document, or selecting some text that you wish to copy. There are times when you wish you could reach into the computer and physically grab that object, and perform the manipulation manually. The aim of this project is to allow such physical manipulation to be integrated with computerized design.

Project description

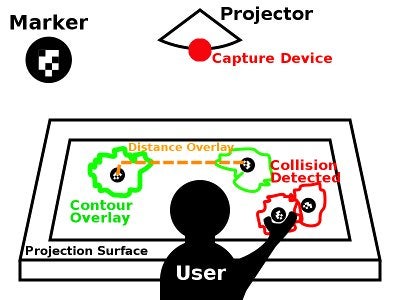

The proposed design draws upon image processing, physical markers and physical image projection to create an integrated physical computing system for the user. The markers will be detected by the camera and processed by the computing system. Based on an analysis of these markers, their meanings will be superimposed on top of them by the projector. The end result is that the user will have real-time physical input to the computerized system, allowing for fast and intuitive manipulation of objects.

It should be noted that the current system is application agnostic. It could be used for applications like board games, urban planning, military strategizing, interior design, or any of the host of applications that can benefit from the ability to physically manipulate a two-dimensional space.

Design methodology

- Design a Marker: The group will design a class of markers which are comfortable and intuitive for a person to manipulate, which can represent a variety of spatial planning tasks, and which can be easily recognized using computer vision for the input to the system. This will involve researching the state of the art in marker design from an image recognition perspective, developing requirements from a user perspective, and combining these into a simple, robust design.

- Implement a Recognition Algorithm: The group will implement a computer vision algorithm which takes an image with the above markers and captures the spatial information they represent. This will involve researching how to design or adapt such an algorithm given the other constraints imposed by the model. In particular user interference like hands and arms temporarily obscuring markers will need to be accounted for. Also, the algorithm will need to compensate for the effects of projecting things onto the markers themselves. The algorithm(s) used will be optimized so that real time feeling performance can be achieved.

- Design a Visual Overlay Model The group will design an overlay which renders additional information from the model, such as dimensions, conflicts, and visual representations for display to the user. It will be necessary to research and determine what information is useful for the chosen application, and how to display it most clearly. For example, a floor planning model might show a warning when a fire exit was obstructed. In a battle planning scenario, it could show line of sight. The visual overlay will be take input in forms which are translatable from the output of the marker recognition subsystem.

- Integrate Into a Physical System: A surface will be designed on which the markers can be manipulated with augmented display information projected onto it in real time. Integrating all these components will give the overall effect of a virtual display which has physical handles by which it can be manipulated.

Research

The

two

areas

of

research

were

for

determining

the

appropriate

hardware

for

the

project,

and

developing

proper

software

systems

to

utilize

this

hardware.

The

first

area

dealt

mostly

with

determining

a

suitable

capture

and

projection

system,

and

we

eventually

decided

on

the

PlayStation

Eye

for

the

capture

device

and

back

projection

to

display

the

virtual

design.

For

the

software,

we

delved

into

state

of

the

art

circle

detection

and

bit

extraction

methodologies,

but

eventually

decided

that

homespun

algorithms

developed

specifically

for

this

application

would

yield

the

best

results.

This

is

because

the

design

of

the

marker

and

projection

environment

is

also

determined

by

us,

and

thus

can

be

designed

in

such

a

way

to

make

detection

and

recognition

tasks

easier.

Design and analysis

A set of markers will be designed to act as machine-recognizable components of a physical scene. An image detection algorithm will be implemented in C++ using the OpenCV computer vision library to recognize these markers in real time. A physical prototype will be created using consumer electronics which allows input into a virtual application through manipulation of the physical markers. The system will project information into the physical scene, making the application fully interactive.

Testing

Different tests are to be done at each of the design stages. Such tests include testing the Worst Case Execution Time (WCET), maximum number of unique markers detected, maximum number of repeated markers detected, response to obstructions, etc. However, for the purpose of a holistic test, the design symposium serves as an ideal medium for obtaining user feedback on the responsiveness and intuitiveness of the finished integrated system.