Introduction

Fake news is an issue today and it falls upon us to identify reality from fiction. Luckily, there are a few ways to easily spot inconsistencies, biases, and falsities in our written media, but what happens when this fake news is presented in our visual and audio media too? We may not always be able to detect misinformation.

However, programmers have begun to develop programs that can create disinformation, called Deepfakes. Deepfakes involve the use of deep learning algorithms to create false audio or videos. Deepfake media usually consists of a face swap where one person’s facial structure and behaviour are near-perfectly mapped onto another’s. Someone’s voice and speech patterns may also be recreated and made to say made up dialogue. Here’s an excellent example of a Deepfake video that shows an iconic scene from Back to the Future with different actors.

Deepfakes started to gain popularity in 2017 [1], and by 2019 [2] there were more than 14,678 Deepfake videos online[3]. The rate at which the videos improve and spread continues to increase. In the early years of Deepfakes, it was very easy to spot the difference between real and computer-generated media. However, Deepfakes are becoming almost perfect and will continue to improve indefinitely until reality and Deepfakes blend together.

Currently, 96% of existing Deepfakes are used to map the faces of well-known celebrities onto the faces of adult video stars [2]. Among other things, they are also used to make parodies, spoofs videos, or to enhance art gallery experiences. At the Salvador Dalí Museum in Florida, Deepfakes are used to mimic the great artist Dali so that he can show and explain his art to guests. It even allows him to take selfies with them!

How it works

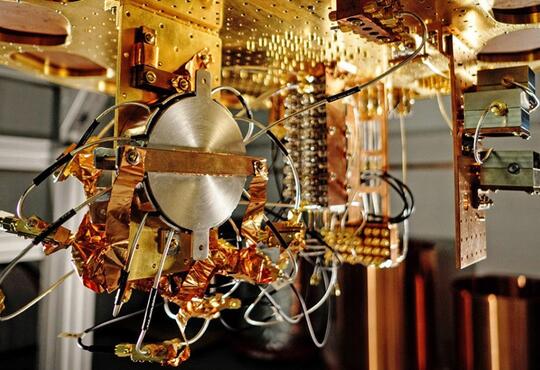

Now, you may be wondering how artificial intelligence mimics humans, their behaviours, and their speech patterns so well. The deep learning system can be fed hours of content from multiple different perspectives until the model they make is a perfect resemblance of the subject.[4]

There are two main ways the deep learning systems create amazing mappings of one person’s face onto another. One way is to use the photos of two people you want to face swap and run their pictures through algorithms known as encoders and decoders. First, one algorithm encodes the pictures by finding and compressing similarities. Then, another algorithm decodes the compressed images and recreates them to match the face one is looking for. The decoders are programmed to find a specific face. Then, to make it swap, instead of giving the decoder person A’s face, one gives it person B’s face, and vice versa; thus, reconstructing the second person’s face in the likeness of the first.[2]

Another way to create Deepfakes is to use a generative adversarial network, better known as Gan. Gan works by making two algorithms work against each other. The first algorithm—denoted the generator—produces synthetic images. The images that the generator produces are then fed to the discriminator. At first it may be nonsense, but after some time in the feedback loop it begins to become very realistic. The discriminator will have both an actual image plus the fake image the generator feeds it. The discriminator then goes through all the data it has and tries to identify which of the images is authentic. This cycle is repeated over and over until the algorithm produces a photorealistic image. The point of the Gan is to make an image so real that the discriminator can no longer tell that it isn’t part of the real data that it was originally given, thus meaning the generator has produced an impeccable computer generated model.[2]

Controversy, danger, and predicted misuse

It may be obvious that there is no way that this technology could exist without its fair share of controversy. Many researchers working with Deepfakes believe that they pose a great risk to life as we know it, and without control or restraint, may change how we perceive truth. Professor Hao Li of the University of Southern California says it best when he says, "The first risk is that people are already using the fact deep fakes exist to discredit genuine video evidence. Even though there's footage of you doing or saying something you can say it was a deep fake and it's very hard to prove otherwise”.[1]

Furthermore, some even go as far as to say that deep fakes may pose a threat to democracy itself. Political parties may be able to sway voters to believe things about other candidates that are not real due to very realistic—but false—evidence. On the other hand, malicious groups of people may create very controversial videos about those in power which could jeopardize their positions. There are already a few cases of it being used today in the political world.[1] For example, in Belgium, a political group created a Deepfake video of the prime minister giving a speech about how Covid-19 was linked to climate change.[3] Clearly, if Deepfakes become common place many will not know what to believe and this could cause mass spread of false information. This is especially dangerous in countries where media literacy is very low.

Scamming and phishing attempts can also become rampant as security measures such as biometrics and facial or voice recognition can easily be synthesized and used without consent. Moreover, phone call scams will become very relevant and many will be highly susceptible to major scams. It may be easy to avoid a scam when a stranger on the phone is asking someone if their fridge is running, but if someone were to mimic the voice of a loved one or a friend and ask the same thing, one would be more inclined to fall for it.[2]

Conclusion

It may be funny to see a comedian make a satirical video where they plaster the face of a political figure on their own, but the power and dangers of deep fakes are very evident. The core issue is clearly described by Professor Lilian Edwards from Newcastle University when she says, “The problem may not be so much the faked reality as the fact that real reality becomes plausibly deniable”.[2] Without proper laws or systems that can identify real from fake, the state of truth as we know it will fade away. Consequently, if the notion of ‘seeing is believing’ is no longer true, then many of us may cease to find truth in our lives.

References

[1] Thomas, D. (2020, January 23). Deepfakes: A threat to democracy or just a bit of fun? Retrieved from https://www.bbc.com/news/business-51204954

[2] Sample, I. (2020, January 13). What are deepfakes – and how can you spot them? Retrieved from https://www.theguardian.com/technology/2020/jan/13/what-are-deepfakes-and-how-can-you-spot-them

[3] Toews, R. (2020, May 26). Deepfakes Are Going To Wreak Havoc On Society. We Are Not Prepared. Retrieved from https://www.forbes.com/sites/robtoews/2020/05/25/deepfakes-are-going-to-wreak-havoc-on-society-we-are-not-prepared/#728d89837494

[4] Shao, G. (2020, January 17). What 'deepfakes' are and how they may be dangerous. Retrieved from https://www.cnbc.com/2019/10/14/what-is-deepfake-and-how-it-might-be-dangerous.html