Notice: The Statistical Consulting and Survey Research Unit was previously called the Survey Research Centre. Our unit is referred to by this name in old newsletters.

Quality Over Quantity: The Pros and Cons of Using Crowdsourcing Platforms to Find Survey Respondents

When conducting survey research, defining the sampling frame is a very important consideration. This involves sourcing survey respondents, and having large samples of respondents can provide more power and validity to survey data and the subsequent findings. Nevertheless, it is important not to sacrifice data quality for quantity.

Crowdsourcing platforms have emerged online, serving as marketplaces for sources that could produce very large numbers of respondents (i.e., survey samples). Researchers can post tasks, such as surveys, via these platforms and for a fee, recruit survey participants. If considering this recruitment approach, the following pros and cons should be considered:

Pros:

Quick Turnaround - These platforms offer access to a large volume of people who may be willing to complete a survey. Depending on the number of respondents needed, data collection could be completed in a day.

Low Cost - With a ‘do it yourself’ approach, the cost of using these various crowdsourcing platforms is generally cheaper than using more traditional sampling approaches. But - at what cost to data quality?

Possible Testing Approach to Pilot Surveys - For grant applications that involve conducting surveys, sample size calculations and power estimations are key elements. Though it is possible to calculate sample size or power estimations without prior data, this will most likely require making extremely rough guesses. Hence, having pilot data could prove very helpful in obtaining more accurate sample sizes calculations and/or power estimations. For example, when calculating sample size for estimating a proportion, though using a crowdsourcing platform has its limitations, it may be a better option than using the worst-case scenario (i.e., that the proportion of interest is around 50%) that would likely result in much larger sample sizes. Likewise, when estimating a continuous variable, having pilot data results in better variance estimates, which will then allow for more accurate sample size calculations (or power estimations).

In addition, piloting a study on a crowdsourcing platform may be an opportunity to test your research questions for wording clarity, to assess whether respondents understand what is being asked. However, using crowdsourcing platforms to test survey length is not recommended due to the high likelihood of encountering ‘speeders’ (see Cons, point one, below).

Cons:

Poor Data Quality - While participants may complete your task, there is the strong possibility that they do not provide good data. Their focus may be on completing the survey as quickly as possible to move on to the next task in order to maximize their incentive amounts, rather than taking the time to provide thoughtful, accurate answers. These respondents are known as 'speeders'. Best practice is to identify and remove speeders from the final data file, which results in additional study costs at two levels: paying a respondent incentive for data that isn’t useful, as well as the added time cleaning a dataset. Correctly identifying speeders is often not an easy task.

Poor Representativeness - Sample representativeness is not guaranteed through crowdsourcing platforms. Participants who may be found through crowdsourcing platforms are those who volunteer to sign up (i.e., self-selected), and may provide a demographic profile (i.e., age distribution, gender distribution, regional distribution) that is vastly different from the population of interest.Therefore, it is not possible with this approach to have a properly defined sampling frame, which would provide accurate sampling inference and estimation.

Furthermore, since respondents from crowdsourcing platforms are self-selected (as opposed to being truly randomly chosen from the population of interest), this means that this approach cannot be considered probability-based sampling. Probability-based sampling and a properly defined sampling frame are two fundamental components of accurate statistical inference and estimation.

Greater Potential to Encounter Professional Survey Takers – People who actively seek out paid survey participant opportunities are often referred to as ‘professional survey takers’. Crowdsourcing platforms present a highly attractive prospect for this group, with the potential to seek out and follow researchers offering the highest incentives. Professional survey takers typically fill out several surveys every month or even every week, – and therefore are very comfortable with surveys. Their experience may lead them to understand how researchers use surveys as measurements, possibly increasing experimenter bias where participants will provide the answer they think researchers want, not necessarily the accurate answer. In addition, their focus is on completing as many surveys and as quickly as possible instead of providing thoughtful accurate results. Hence, many ‘professional survey takers’ tend to also be ‘speeders’. As well, they may also sign up on multiple platforms, which could lead to duplicate data if a researcher launches a survey on multiple platforms. Professional survey takers pose a number of concerns when it comes to data quality, sample representativeness and broadness of reach of a survey sampling frame.

Data Storage - How a survey is set up on crowdsourcing platforms may lead to data storage concerns. That is, data collected by crowdsourcing platforms and/or stored on crowdsourcing platforms are subject to data privacy laws for the country where the crowdsourcing platform is based. This raises ethical concerns as it could impact the data privacy and security assurances given to respondents, which in turn may negatively impact survey response rates.

These are just a few areas to consider when using crowdsourcing platforms for survey data collection. Crowdsourcing platforms may at first glance provide a quick and easy solution to survey data collection, but upon further consideration, begs the question “Is it worth sacrificing data quality for faster and cheaper?”

Experts at the Survey Research Centre are able to provide advice about sampling and survey methodology to assist with collecting high quality survey data. For more information on how we can help you with your research project, please contact us.

"Polls, they're not just for skiing, eh": Tracking the 2018 U.S. Midterm Elections

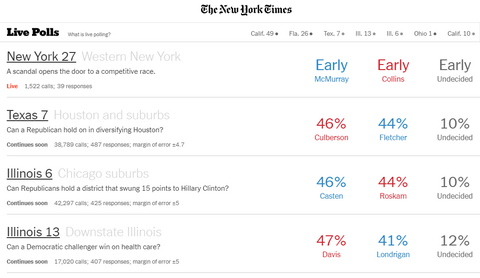

*Screenshot taken of the results that were posted in real time during polling around October 29, 2018.

The United States Midterm elections took place on November 6, 2018. The Survey Research Centre was actively involved in collecting polling data for the New York Times Upshot.

The Midterm elections are held near the midpoint of a president's four-year term of office. Midterm elections are sometimes regarded as a referendum on the sitting president's and/or incumbent party's performance. The party of the incumbent president tends to lose ground during midterm elections. Considering the partisanship of the American electorate and the competing views of the Trump administration within that electorate, everyone was anxiously awaiting the results of these elections.

Federal offices that were up for election during the midterms are members of the United States Congress, including all 435 voting seats in the United States House of Representatives, and 33 or 34 (i.e. one third) of the 100 seats in the United States Senate. 36 governors are also elected during midterm elections.

The Upshot section of the New York Times partnered with Siena College to conduct polls of dozens of the most competitive House and Senate races across the US, including the Midterm elections. The Upshot includes special features such as scoreboard-like data visualizations, where polls are updated in real time, after every phone call. It gives readers a way to understand important and complicated national issues, such as the midterm campaign. With over 30 telephone interviewers working 7 days each week, the SRC was part of an effort that contacted 48,462 registered voters through the conduct of 95 polls.

The polling survey took between 4 to 6 minutes to complete, and consisted of questions such as how likely a person is to vote in the election held on November 6th and for which candidate. In light of current political topics, respondents were asked about their support of the recent U.S. –Mexico –Canada trade agreement, their support of tariffs on steel and aluminum, and also their support of the tax reform bill and other policies put in place by Donald Trump.

The SRC was proud to collaborate with Siena College on this project by collecting polling data for these important U.S. Midterm elections.

"Bin There, Doing That."

It has been over a year and a half since the Region of Waterloo introduced big changes to its waste collection. In March 2017, garbage collection was reduced from 10 bags of garbage collected every week to a maximum of 4 bags of garbage collected every two weeks. Green bin and blue box collection continued to be available for most residents on a weekly basis, but was a recent addition for the surrounding townships.

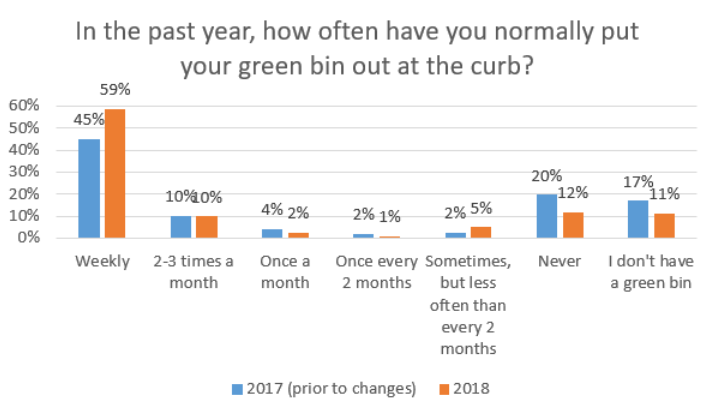

The changes were implemented in an attempt to reduce the amount of garbage flowing into the Region’s single and rapidly filling landfill site. The Region reported on its website that prior to March 2017, over half of residential garbage (by weight) consisted of organic, compostable material that could have be redirected to green bins, while another 14% of garbage consisted of recyclable material that could have been redirected to blue boxes. This data was supported by the initial survey conducted by the Survey Research Centre (SRC) at the University of Waterloo in early 2017, which indicated that less than half (45%) of residents surveyed reported putting their green bin at the curb for collection on a weekly basis in the past year.

Now that the waste collection changes have been implemented for 18 months, the SRC wanted to follow up to see if there has been an increase in green bin use. The SRC conducts its annual Waterloo Region Matters Survey, where university researchers and community organizations with limited budgets can field their own survey questions while sharing the cost of conducting survey research. Questions about the waste collection changes were included in the recent 2018 survey.

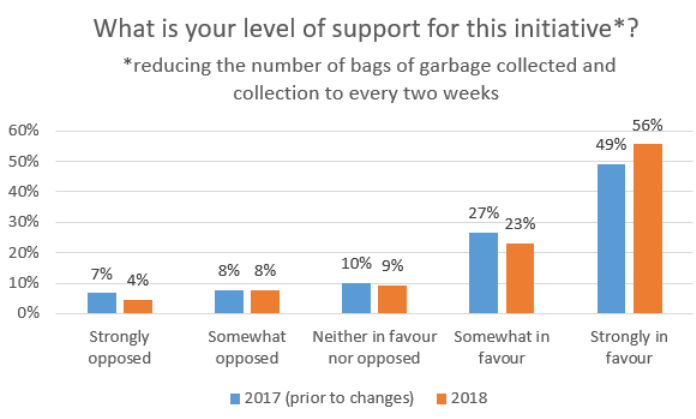

The research shows that 59% of Waterloo Region residents surveyed report they have put their green bin at the curb for collection on a weekly basis in the past year. This is a statistically significant increase over the 2017 survey results. Support for the waste collection changes remains unchanged from 2017 with over three-quarters of respondents indicating that they are somewhat or strongly in favour of the changes. The proportion of residents who indicate that they never put their green bin out at the curb (12%) and who report that they don’t have a green bin (11%) has dropped significantly since early 2017 (20% and 17%, respectively).

The 2017 Waterloo Region Matters Survey was conducted among a random sample of 404 adult residents from February 13 to March 9, 2017. The 2018 Waterloo Region Matters Survey was conducted among a web panel of 248 adult residents from September 5 to 26, 2018

The results are encouraging and the Region of Waterloo continues to explore ways to improve waste management. The more residents can divert organic, compostable material into green bins and recyclable material into blue boxes, the longer the landfill will last. For a refresher on what can and cannot go into green bins and blue boxes, visit the Region of Waterloo website (www.regionofwaterloo.ca/en/waste-management.aspx).

The Survey Research Centre offers survey research services ranging from a simple consultation to a complete package of study and survey design, data collection and top-line analysis – all at a cost recovery price. It provides telephone call centre services, online survey hosting, survey programming and mail survey services. A free one-hour consultation is available to University of Waterloo faculty, staff and students.

Featured Projects

Surveying Local Attitudes Towards Massasauga Snakes

The Nature Conservancy of Canada and Bruce Peninsula National Park are interested in examining the attitudes and opinions towards Massasauga rattlesnakes (Ontario's only venomous snake) among local residents of the Saugeen Bruce Peninsula, where the snakes are mainly found. The goal is to understand people’s opinions in order to help reduce the impact of human activity, and encourage protection and recovery activities.

The SRC provided telephone data collection services for this study, using a Random Digit Dialing (RDD) approach. Survey data collection was conducted from late August to mid-September 2018.

Announcements

Beth McLay, project manager at the SRC, began a secondment with the University of Waterloo’s Department of Co-operative Education in November 2018. She will be in the role of Market Research Manager until November 2019.

Lindsey Webster, project manager at the SRC, is currently on leave, having welcomed a new addition to her family in December 2018. Congratulations, Lindsey! Lindsey will be returning to the SRC in January 2020.