The Canadian Foundation for Innovation and the Ontario Research Fund provide complementary (matching) funding for proposals for new equipment that will propel Canadian academic research(ers) the forefront of science.

The Canadian Foundation for Innovation and the Ontario Research Fund provide complementary (matching) funding for proposals for new equipment that will propel Canadian academic research(ers) the forefront of science.

When I considered the technologies that could revolutionize my research agenda and provide something novel and innovative to the design, construction, and results from agent-based models of land-use and land cover change and ecological models of plant growth… there was no doubt in my mind… unmanned aerial vehicles are the future! Obviously they’re available and frequently found in the media, but their use in academia is sparse and I had not even heard mention of researchers in ABM and land-use science considering the idea.

When I considered the technologies that could revolutionize my research agenda and provide something novel and innovative to the design, construction, and results from agent-based models of land-use and land cover change and ecological models of plant growth… there was no doubt in my mind… unmanned aerial vehicles are the future! Obviously they’re available and frequently found in the media, but their use in academia is sparse and I had not even heard mention of researchers in ABM and land-use science considering the idea.

The idea proposed was to use the UAV as part of a rapid agricultural assessment (RAD). The UAV would provide near-real-time imagery that could be used in conducting a geospatial social survey of land management practices with the farmer as well as collect elevation and ecological data. These data would be combined with fieldwork (e.g., soil samples and vegetation plots) and ingested into a agent-based coupled natural-human systems model (CHANs) of land management. The data would not only provide enhanced realism, boundary conditions, and facilitate model calibration and validation… but get this… they could also be useful to the farmer! Acquired data could help the farmer identify areas of soil erosion, crop stress, and other factors affecting production and environmental sustainability. Incredible… now I’m building a relationship with farmers beyond my weekly trips to the Kitchener market and my childhood friends from Victoria County.

The idea proposed was to use the UAV as part of a rapid agricultural assessment (RAD). The UAV would provide near-real-time imagery that could be used in conducting a geospatial social survey of land management practices with the farmer as well as collect elevation and ecological data. These data would be combined with fieldwork (e.g., soil samples and vegetation plots) and ingested into a agent-based coupled natural-human systems model (CHANs) of land management. The data would not only provide enhanced realism, boundary conditions, and facilitate model calibration and validation… but get this… they could also be useful to the farmer! Acquired data could help the farmer identify areas of soil erosion, crop stress, and other factors affecting production and environmental sustainability. Incredible… now I’m building a relationship with farmers beyond my weekly trips to the Kitchener market and my childhood friends from Victoria County.

The first steps in this research program have taken place. The equipment funding arrived, an unmanned aerial vehicle system (the SkyRanger) was acquired from Aeryon Labs, and I took one of my graduate students (Andrei Balulescu) with me for some flight training last week. Part of the equipment and training involves using Pix4D Mapper to create orthorectified mosaics and 3-dimensional surface or object models. This software is enhanced by GPGPUs and our lab has recently acquired an NVIDIA K4200 GPGPU that resides in a 12 core workstation. Complementing these items are a couple servers, and with the lab equipped with these advanced tools we’re are ready and keen to conduct exciting, novel, and high-impact research. If you’re interested in researching with these tools then have a look at my previous post and send me some information. Funding is available for students interested in a Master’s and PhD related to modelling land-use and land-cover change as well as ecosystem modelling. Bringing your own funding and research is equally exciting.

The first steps in this research program have taken place. The equipment funding arrived, an unmanned aerial vehicle system (the SkyRanger) was acquired from Aeryon Labs, and I took one of my graduate students (Andrei Balulescu) with me for some flight training last week. Part of the equipment and training involves using Pix4D Mapper to create orthorectified mosaics and 3-dimensional surface or object models. This software is enhanced by GPGPUs and our lab has recently acquired an NVIDIA K4200 GPGPU that resides in a 12 core workstation. Complementing these items are a couple servers, and with the lab equipped with these advanced tools we’re are ready and keen to conduct exciting, novel, and high-impact research. If you’re interested in researching with these tools then have a look at my previous post and send me some information. Funding is available for students interested in a Master’s and PhD related to modelling land-use and land-cover change as well as ecosystem modelling. Bringing your own funding and research is equally exciting.

The remainder of this post documents some of the training and the end product of a test flight around a local church as shown above and below.

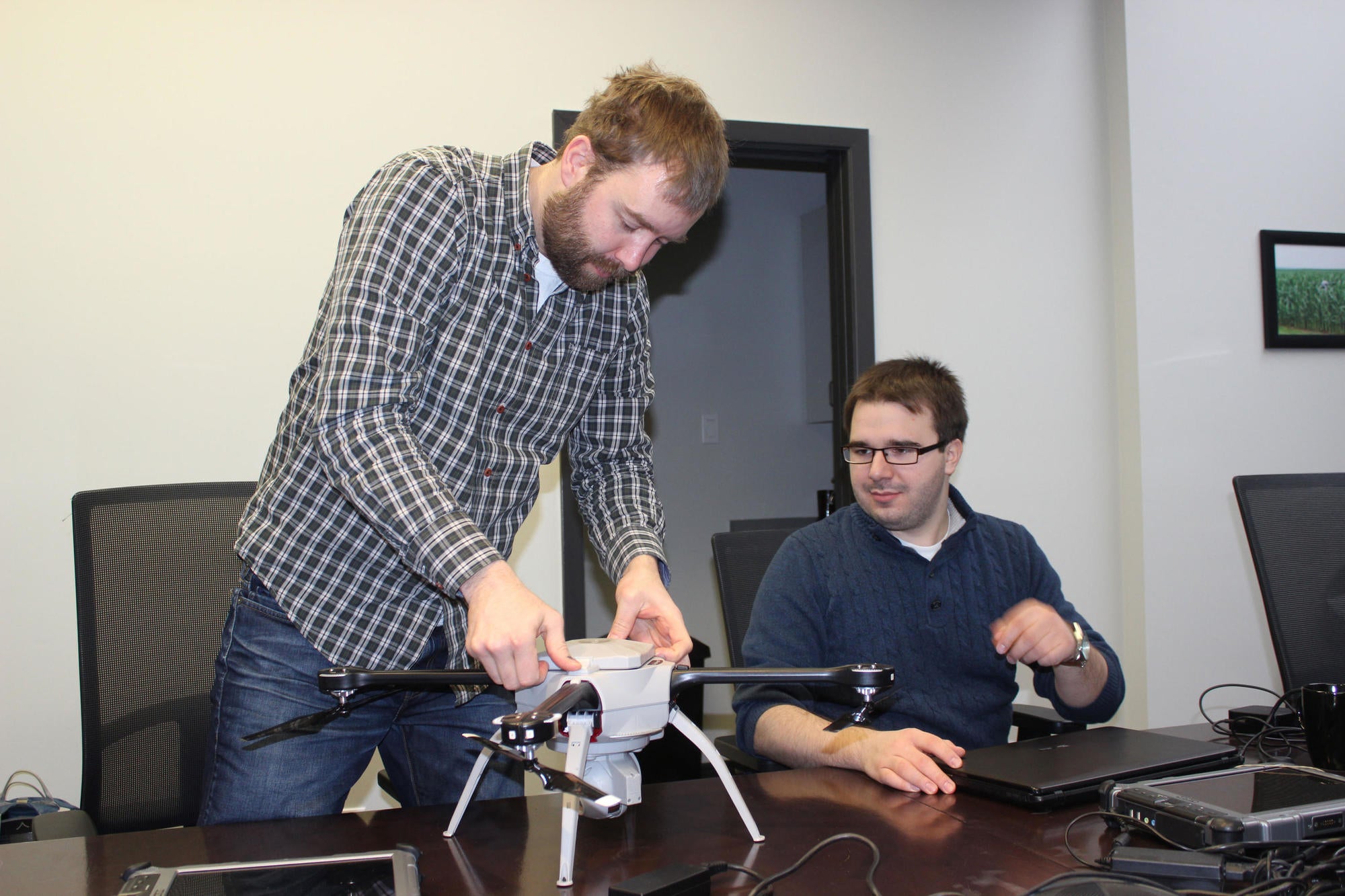

In the above photo we are assembling and disassembling the skyranger.

Starting to set up our second day of flight training.

The base station is mounted on the car, which communicates directly with the flyer and channels our flight paths and flight instructions from the table to the flyer.

In 35 km/hr winds and quite a bit of snow, we both enjoyed a couple flights and testing the safety mechanisms of the flyer. Including, breaking off communication with the flyer and watching it return home without incident.

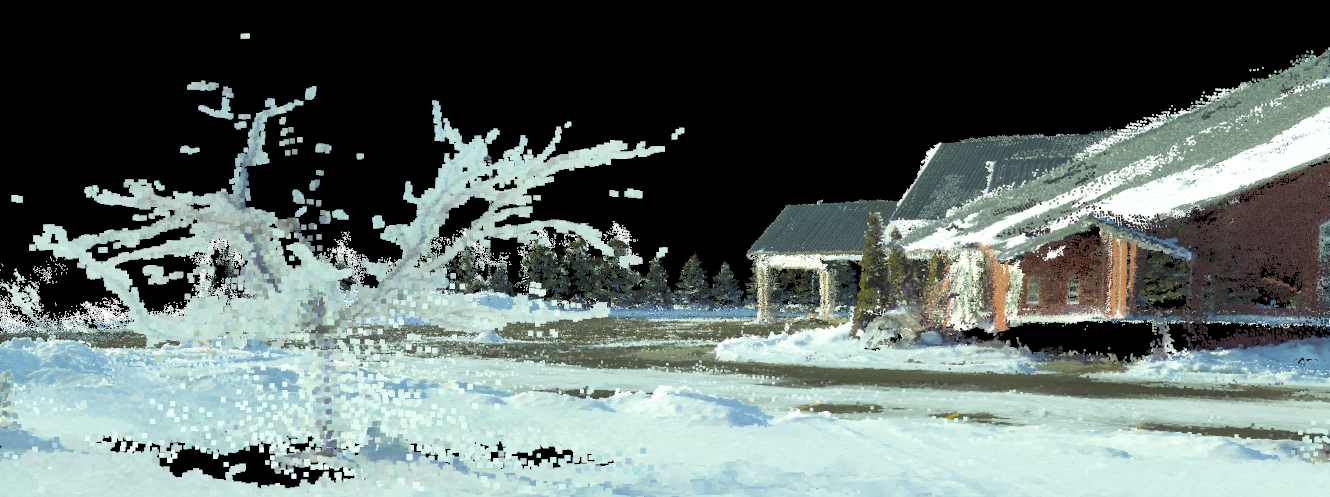

Screen capture of a portion of the point cloud derived from a 20 minute flight around a local church using Pix4D.

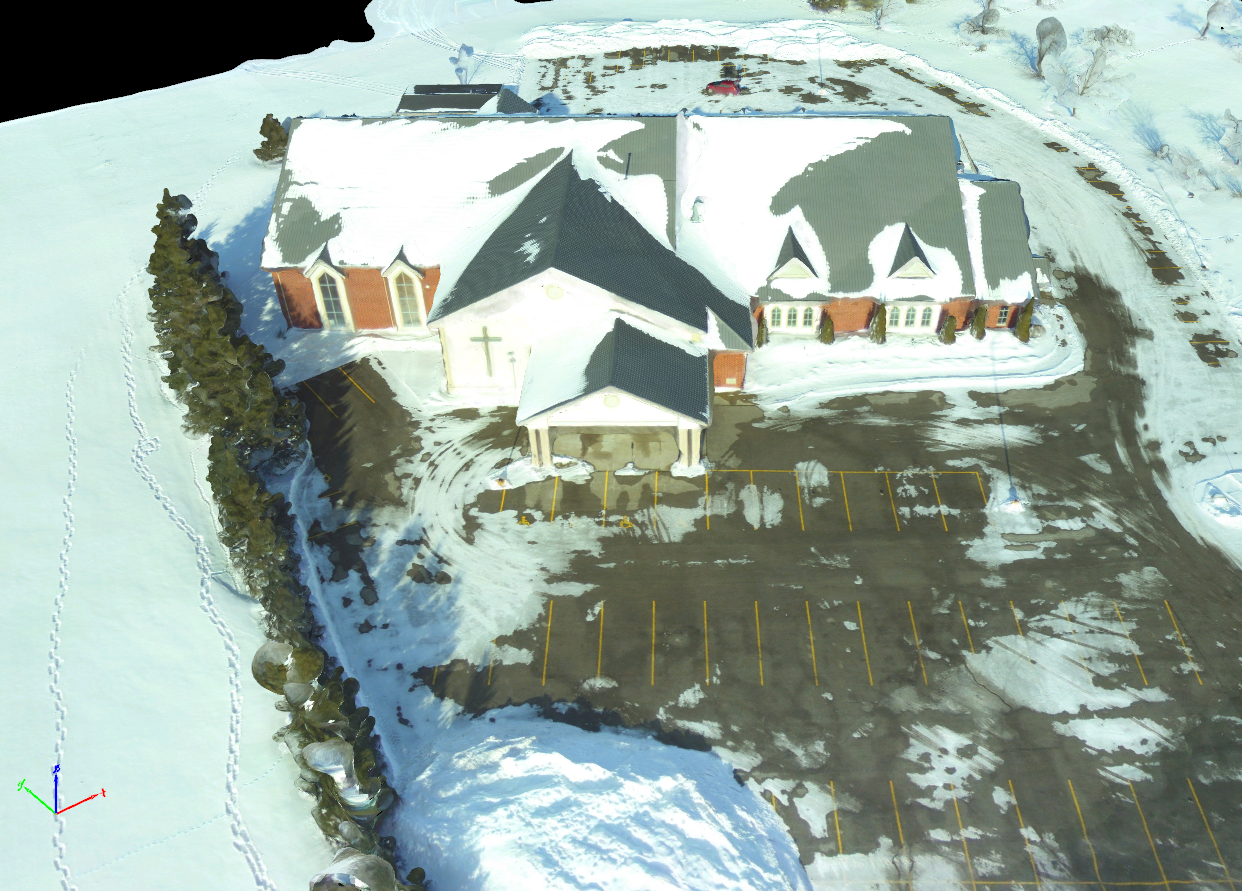

A triangular mesh interpolation of point cloud data using Pix4D.

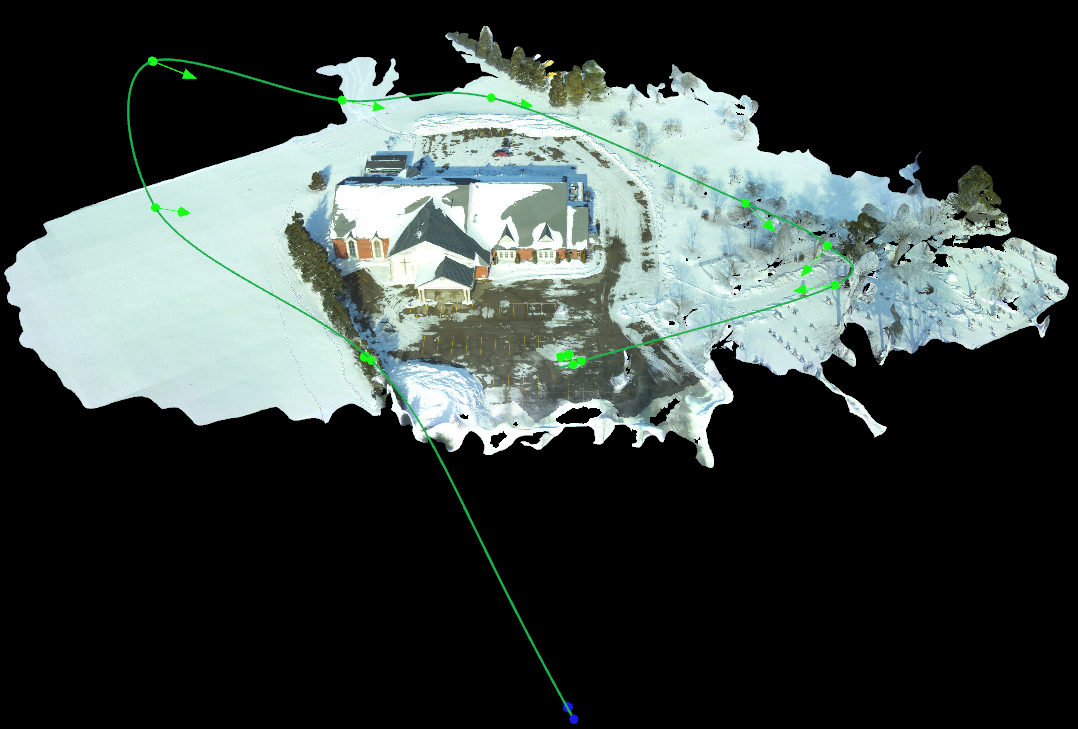

Post processing flight plan created with Pix4D, which you see in the animation at the top and bottom of this post.