We build upon the recent Haptic Experience (HX) model and report on progress towards measuring the five foundational constructs for designing haptic experiences.

Haptic technology is becoming an essential tool for designers seeking to create great user experience (UX). There is mounting evidence that haptic feedback of different types can contribute to existing UX measures. However, these metrics give little insight into how and why haptics contributes to peoples’ experience, and little direction for hapticians to improve their designs.

Currently, designers, researchers, and hapticians use qualitative methods to gain deeper insight into their designs. Practitioners favour small, in-person acceptance tests to evaluate their designs, iterating until it just “feels right,” and in-person demos to persuade stakeholders. Neither approach scales to large quantitative studies or translate to remote work, and while some hapticians employ general scales like the AttrakDiff questionnaire, there is still a desire for more targetted measurement tools.

In this paper, we report on initial findings from conducting scale development to measure haptic experience (HX). Scale development – designing and validating a measurement instrument – is considered critical to building theoretical knowledge in human and social sciences. We employed scale development to better understand the HX model, a proposed standard for defining haptic experience in terms of its pragmatic (usability) and hedonic (experiential) factors.

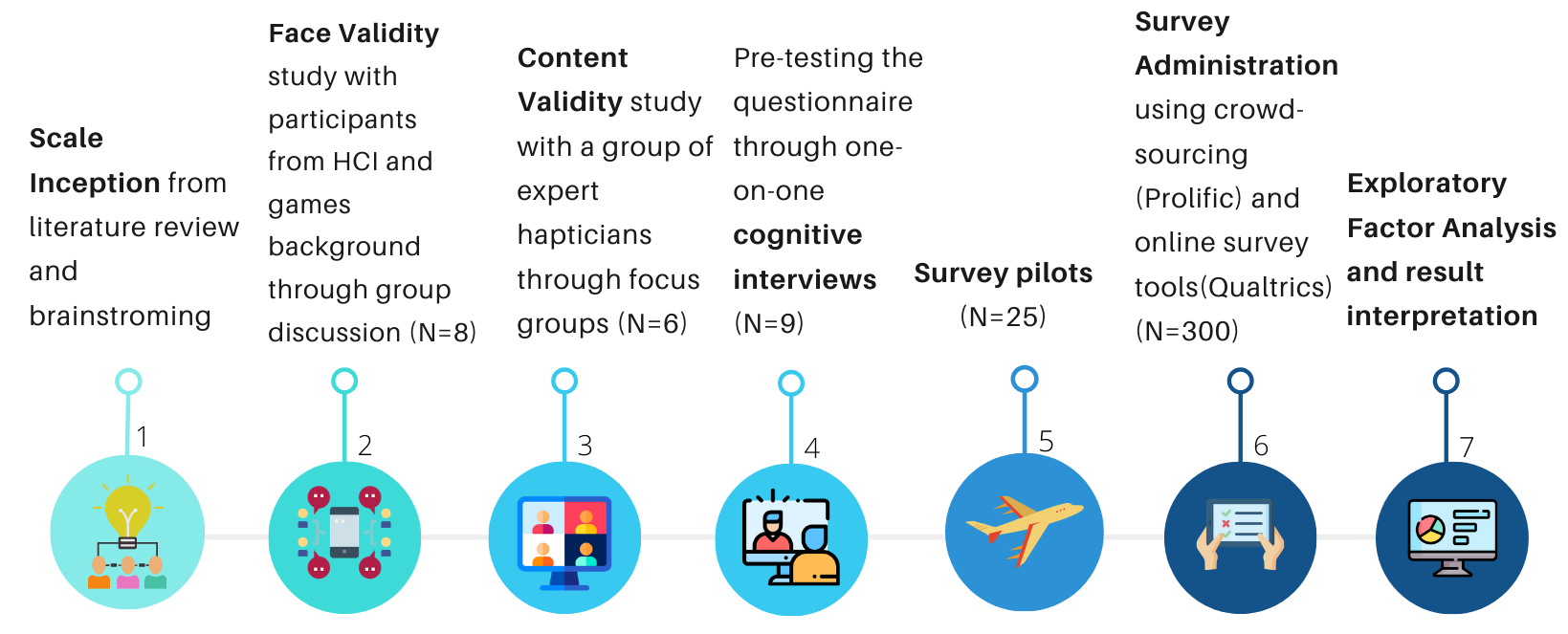

We conducted the first steps of scale development to assess how the HX model fits different devices and applications, understand user’s expectations and emotions, and analyze whether the HX model is supported by empirical evidence. Using the results of a series of 5 user studies and exploratory factor analysis (EFA), we developed an initial draft of a measurement instrument, and here report on the theoretical and practical findings.

We focused on vibrotactile devices to enable a remote study, but are actively expanding our findings with other devices. Please note that the questionnaire is still being developed, and not ready for deployment. We will provide instructions once we have a validated instrument.

Evidence for a 5-factor HX model

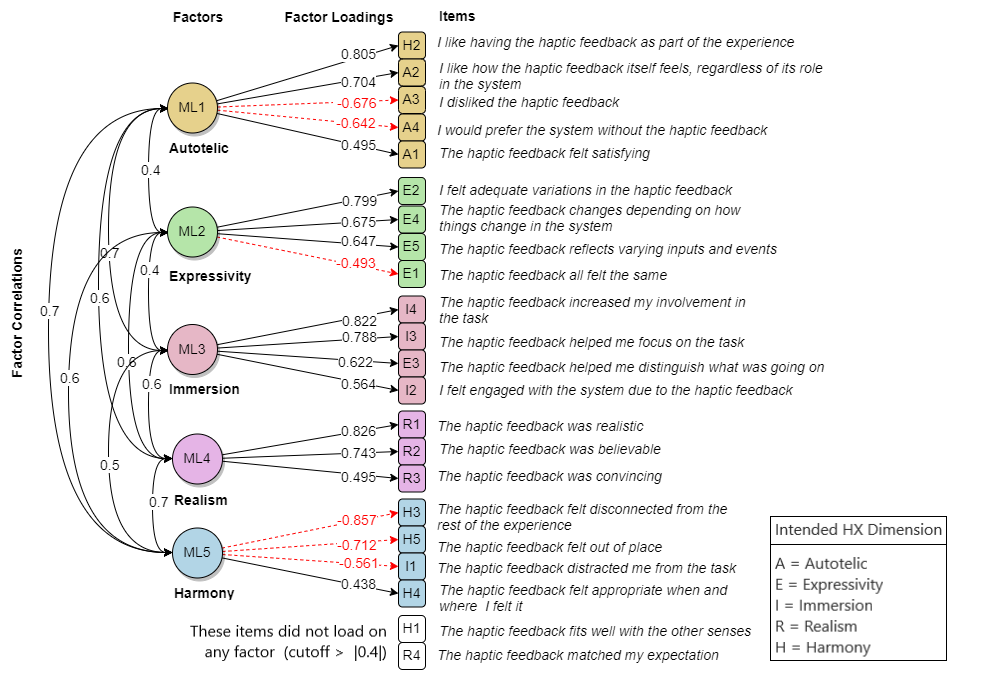

The results from EFA closely resemble the proposed theoretical HX model: the best-performing model had 5 factors, each of which closely relates to one HX factor. This suggests that the underlying factors of the HX model are distinct (though related) entities that can be measured, and was best modeled with 5 factors. While there might be concerns that this was a “re-discovery” of the 5 factors we used to design the items, best practices in scale development suggest starting with a strong theoretical basis.

Enriched description of the HX model’s constructs

Autotelic (feeling good itself): Autotelics turned out to be the strongest factor with maximum influence on its variables. The high factor loading of all the items is an indicator of how important it is to design a feedback that is likable and satisfying to the user from the sensation alone. While Autotelic by definition means that the feedback feels good in and by itself, the factor loadings indicate that the context of the system might significantly influence the likability of the feedback. These results suggest that, for an application to have a good autotelic experience, it necessarily requires context and cannot be considered in isolation.

Expressivity (variety of output): One item intended for expressivity (“The haptic feedback helped me distinguish what was going on”) loaded on Immersion instead of Expressivity, suggesting that Expressivity has more to do with variance of feedback than causality, and that feedback of someone’s actions is connected to Immersion. We thus suggest that Immersion “allows users to feel their input make an impact on the feedback received” [9], not Expressivity.

Immersion (being immersed in an environment): All positive items of Immersion loaded together, confirming our existing understanding of the dimension. We conclude that increased involvement, focus, and engagement is an indicator of an immersive experience. This lends support to the idea that when users can both affect and be affected by the system, they become more immersed in it (as described by the concept of Presence).

Realism (believable output): One interesting question raised during content validity discussion was about realism vs believability. Some of the experts argued that believability might be a construct of its own and some agreed that it is mostly an aspect of realism. Since R2 (“The haptic feedback was believable”) has a high factor loading of 0.743, we suggest that the factor “realism” and “believability” are closely linked, since “believability” varies with realism. However, we do not yet have enough information to suggest which might be primary. As “realistic” is a higher loading item compared to “believability” and since it’s consistent with the HX model, we decided to label this factor as “realism” - the debate continues.

Harmony (linked with system, other senses): Harmony was originally loaded with 3 negative items and 1 positive item. All the negative items were positively correlated with the factor and the positive one was negatively correlated. This factor was in fact the opposite of the construct Harmony. We decided to label this factor as harmony, since it simply appears to be dominated by “negative factors”, and can be interpreted with all signs flipped. In other words, Harmony might be detectable from the absence of a disruptive feedback. For example, if the haptic feedback does not feel “disconnected” from the system or “out of place,” it may be harmonious.

Practical guidelines for quantitatively measuring HX

1)

A

questionnaire

is

viable

for

measuring

HX:

We

repeatedly

debated

whether

a

questionnaire

was

the

right

choice

for

evaluating

HX.

Measuring

experience

is

difficult.

Focusing

on

a

particular

type

of

feedback

while

keeping

in

mind

the

overall

experience

is

even

more

challenging.

Through

brainstorming

and

reviewing

work

around

measurement

instruments,

we

were

convinced

that

a

well-designed

questionnaire

can

collect

systematic

data

of

user

emotions

and

expectations

about

a

system

or

design.

We

found

participants

typically

interacted

with

haptic

experience

for

5

minutes,

with

the

entire

task

and

questionnaire

taking

10

minutes.

This

suggests

that

participants

can

complete

a

20-question

instrument

in

less

than

5

minutes,

suitable

for

evaluating

systems

without

being

too

onerous

on

participants.

However,

approximately

12%

of

our

survey

participants

reported

that

they

did

not

feel

any

haptic

feedback

in

the

guided

task

and

their

responses

consisted

only

of

neutral

options.

Measurement

of

HX

may

only

be

applicable

for

applications

with

a

prominent

haptic

feedback

component,

or

when

participants

direct

their

attention

to

the

haptics.

2) “Haptic feedback” needs to be defined for general participants: We initially referred to the unit measured as “feedback”, in order to keep it generic and simple. However, “feedback” was ambiguous as participants were confused about what feedback we were referring to. We then decided to change it to “haptic feedback” based on the input from experts. This change could potentially make the question- naire difficult to comprehend for novice participants: 57.7% of our survey participants had not heard of the word or unsure of what it means. Thus, we included a simple definition of haptic feedback (as described in Results). We found that defining haptic feedback was necessary to help users understand the items from 82.9% of survey respondents.

3) Not all factors apply in all contexts: In order to build our studies around measuring haptics, we had to provide participants with applications that are experienced as a system, but also have recognizable haptic feedback. For example, watching a haptic ad - which is very similar to watching any video with sounds and visuals. We conducted an initial screening with smartwatches and fitness tracking devices and discovered that not all our constructs may apply to simpler applications, such as a timer or a “step goal reached” alert. Some applications don’t have a real-world equivalent, so “believability”, “realistic” and “convincing” seem irrelevant to the experience, or confusing; one participant interpreted “believable” as believing that the vibration came from their FitBit. Thus, any resulting questionnaire should ideally be designed with sub scales that can be selected depending on the needs of the researcher and the context of the study.