Automation and Machine Learning

The word Luddite originated in England during the late 1700’s. During this period, there was an increase in faster working and cheaper cotton spinning technology which could replace more skilled workers. This development led to destruction of the machines by the workers, identified by the name Luddites coined by the textile activist Ned Ludd, to stand up for their practice and fair wages. To the original Luddites’ disappointment, automation has entwined itself within our current means of production. Modernly, the term Luddite has shifted to signify an opposition to technological change, in contrast to the original movement protesting the rights of workers alongside technological development.

A form of automation that currently is a large topic of discussion, is generative AI. General misinformation and distrust are spread across media platforms about machine learning, allowing the modern definition of Luddite to become a resistance to technological innovation. This blog post will not be an excessive discussion of artificial intelligence within society, instead a look into a 1984 perspective of AI through the book Artificial Intelligence on the Commodore 64 by Keith and Steve Brain.

Artificial Intelligence in 1984

In fantasy, artificial intelligence takes on an anthropomorphic role, conflict often arising when Issac Asimov’s three laws of robotics are crossed:

- The First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- The Second Law: A robot must obey the orders given to it by human beings except where such orders would conflict with the First Law.

- The Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The 1968 film 2001: A Space Odyssey exhibits this through HAL 9000, the artificial intelligence antagonist of the film. HAL can process natural language, make weighed decisions, interpret emotions and art, and pilot the spacecraft, but eventually turns against the crew when they try to shut him down.

In reality, the most advanced program available during 1984 was ELIZA, created to study the relationship between humans and computers. ELIZA was one of the first to be studied with the Turing Test, a test of a computer's intelligence and distinguishability from a human.

Coding Artificial Intelligence in BASIC

This raises the question of what stands in the way of ELIZA becoming advanced like HAL. Artificial Intelligence on the Commodore 64 consists of the methods and problems that arise when programming a BASIC form of artificial intelligence.

One of the initial issues is the communication barrier between machines and natural language. A programmer must consider down to the detail of punctuation, distinguishing between types of words and tenses (verbs, object, adjective, adverb...), where words occur in a sentence, among all the grammatical exceptions in the English language.

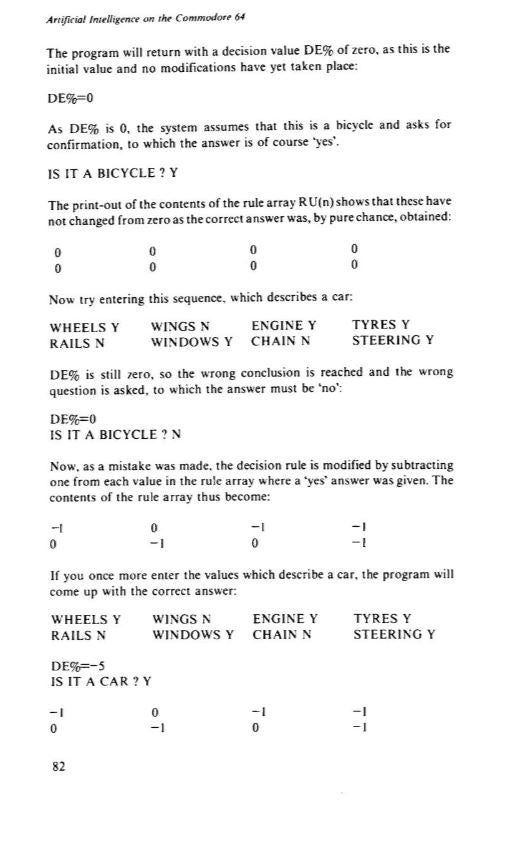

At this point “our computer has displayed only slightly more intelligence than a parrot,” regurgitating simple output to input (Brain, p.52). For this program to meet the bare minimum of usefulness, many lines of BASIC code will need to be filled with data, a similar process to the machine learning process today. A benefit of the computer is that it “does not make subjective judgements, become bored, or accidentally forget to check all the information in its memory,” yet the programmer still needs to train the computer to compare the data it has been given (Brain, p.66). The text followed a method of providing the information in DATA arrays, the computer makes an assumption, which is then corrected by the programmer. The process continues where if the guess is correct, it is stored as 0, likewise if the guess is incorrect, it stores the incorrect aspects as –1, creating a bias and a set of rules.

Training the Computer

Keith and Steven Brain. Artificial Intelligence on the Commodore 64, page 82. 1984.

A more conventional way to mimic problem solving is feeding information AND conclusions to the computer so that it creates rules on its own.

The programmer can add other elements depending on the purpose of the computer, such as Soundex coding which matches the initial letter of the word and creates a numerical categorical system for the user’s input to prevent miscommunication from spelling. For a program used for learning purposes, one would training the computer following a similar method to the one pictured. The computer would create a bias (stored in an array) from questions answered correctly versus incorrectly, subsequently increasing certain areas in difficulty.

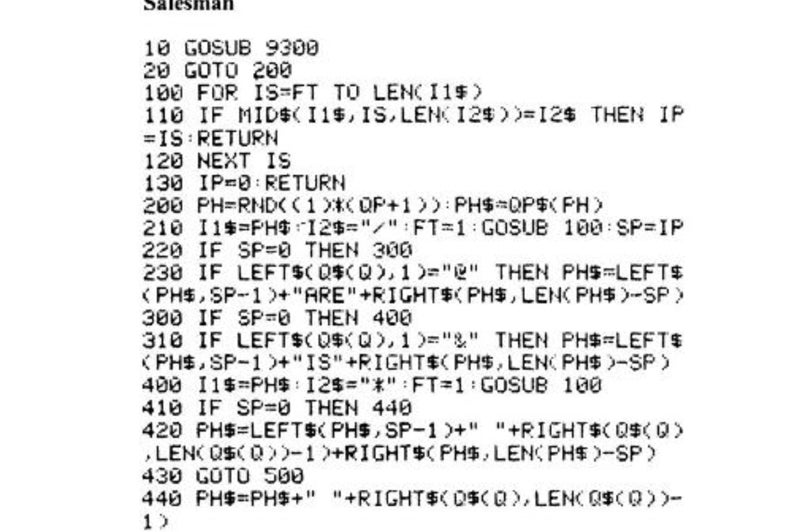

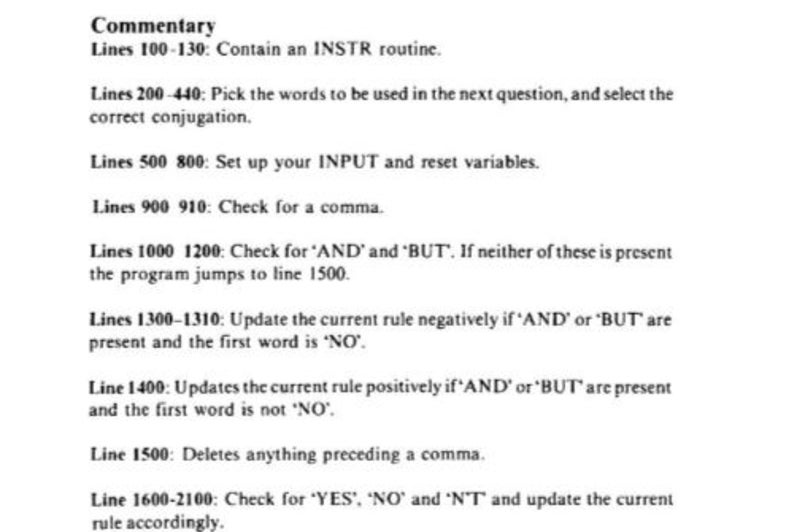

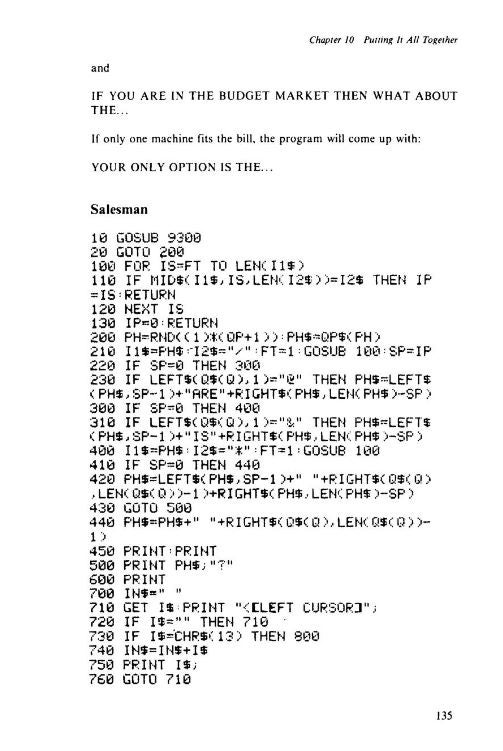

The Computer Salesman

The authors leave the reader with a six-page, artificial intelligence, code to try on their own titled the Computer Salesman. The program compares cost and profits in separate arrays and forms a conclusion from a corresponding array of (phrase describing feature, cost, profit) to consider the consequences of the requests. From the perspective of a current university student, reading this has made me extremely grateful that I am not spending countless hours correcting my BASIC code.

To read more of the code (pages 135 to 142), view the entire text here.

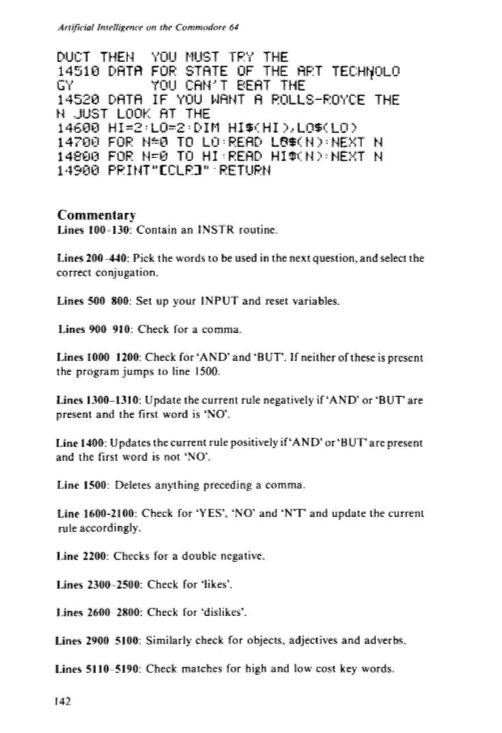

A Sample of The Computer Salesman Code

1984 vs Modern Machine Learning

The extent of detail that goes into a program with mediocre communication abilities, decreases my resistance to artificial intelligence from the 1980’s, and allows me to better understand modern machine learning. The authors of the text suggest, within their eerily accurate prediction of modern artificial intelligence, that a Luddite should stand for worker’s rights in the face of technological development. Perhaps if it comes to a day that a HAL like machine takes over humanity, its natural language abilities will be so developed that humanity will have to communicate in the now long dead language of BASIC.

A Final Note From the Authors

Keith and Steven Brain. Artificial Intelligence on the Commodore 64, page 142. 1984.

About the Author

Charlotte is a Physics and Astronomy student currently in her 2B term at Waterloo. She enjoys tinkering and creating all forms of art in her free time. She works at the Computer Museum as their current Winter 2024 coop student.