The current research program involves two major streams. The first one is basic research in the areas of risk & reliability analysis and probabilistic mechanics. The other equally important stream is the industrial research specific to the life cycle management of nuclear plants.

- Objectives and structure

- Stochastic process models for aging management

- Degradation prediction models

- Maintenance management models

- Efficient meta-modeling for system reliability analysis

- Uncertainty analysis in fitness for service assessments

- Risk aggregation due to multiple hazards for site-wide risk assessment

- Reliability analysis of large structural systems

- Advanced Bayesian models for reliability analysis

- Advancement of seismic PRA

Objectives and structure

The long-term objectives of the research program are to:

- Advance the fundamental understanding of probabilistic analysis techniques that are relevant to estimating reliability and remaining life of critical engineering systems;

- Formulate methods, tools, and models for optimizing inspection, maintenance and replacement strategies that will ensure the economic life cycle performance and safety of engineering systems;

- Develop mechanics, material modelling, and computational methods relevant to risk assessment of practical engineering problems; and

- Integrate nuclear asset management with modern theories of investment and financial decision making for the development of a streamlined life cycle management (LCM) model.

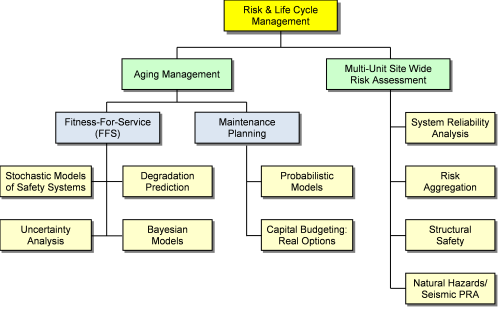

The overall structure of the program is shown in the figure below.

All the elements of the research program share a common foundation based on rigorous mathematical theory of probability and random processes, and rational principles of decision-making. Therefore, at the core of the research program is the development of mathematical methods and tools in the areas of stochastic processes, system reliability, and Bayesian modeling.

The theory of stochastic processes is a versatile approach for modeling uncertainties inherent with the occurrence, progression rate, magnitude and duration of random events. For example, aging related degradation of performance by corrosion, creep and fatigue requires stochastic modeling. External hazards like earthquakes, high winds and floods are also modeled as stochastic processes. System reliability analysis, such as probabilistic safety assessment (PSA), involves the analysis of complex functions of random variables and stochastic processes, in addition to sensitivity analysis. The ultimate objective of these analyses is to provide input to prudent and consistent decision making, which is facilitated by the Bayesian philosophy. The Bayesian approach is also useful for modeling parameter (or epistemic) uncertainty and updating predictions based on new information.

Stochastic process models for aging management

System, structures and components (SSCs) in a nuclear power plant (NPP) operate in a dynamic and stressful environment where damage and wear accumulate over time as a result of various degradation processes, e.g., corrosion, fatigue and creep [18]. As the cumulative damage exceeds a critical threshold, it can lead to failure, or it may render the equipment unfit for service. To control the adverse effects of aging, nuclear utilities must develop and implement aging management plans in both the pre- and post-refurbishment life of the NPP.

Aging management is defined as

the engineering, operational, inspection, and maintenance actions that control, within acceptable limits, the effects of physical aging and obsolescence of SSCs occurring over time or with use.

The scope of aging management covers all those SSCs that can, directly or indirectly, have an adverse impact on the safe operation of the NPP.

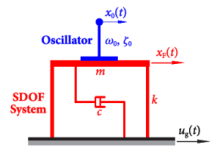

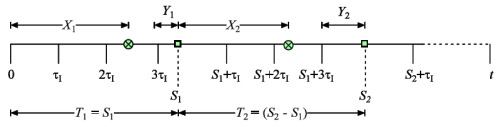

In this project, the main theme is the generalization of the stochastic alternating renewal process (ARP). In this model, a shock arrives randomly at some time and results in the system failure. The time to repair is also a random variable. After repair, the system is put back into service.

In summary, a system’s operating life is an alternating sequence of up and down time. This versatile model is fundamental to the analysis of a number of problems related to the unavailability of safety systems and the probabilistic modelling of common cause failures (CCF).

Degradation prediction models

Although a wide range of degradation mechanisms, such as corrosion and fatigue, are well known, new surprises are occasionally encountered. For example, the degradation of Inconel X750 alloy by transmutation and helium absorption reduces the strength of annulus spacers in CANDU fuel channels. Developing and calibrating probabilistic models for challenging degradation mechanisms with limited data are the main themes of this project.

Examples of past and on-going work in this area include:

- Degradation of annulus spacers in fuel channels

(probabilistic model for predicting the life expectancy of the spacers) - Stress corrosion cracking (SCC)

(new probabilistic approach for predicting the long-term behavior of Alloy 800 steam generator tubing) - Fatigue of welded joints

(stochastic fatigue process model based on strain-based fracture mechanics (SBFM)) - Corrosion of buried piping

(probabilistic models for corrosion in buried piping using field data from the industry) - Flow accelerated corrosion (FAC) of feeder piping

(integration of inspection results into degradation rate and lifetime predictions)

Maintenance management models

In the nuclear industry, time-based maintenance (TBM) policies are typically used to control the adverse effects of degradation on reliability. It is now realized that a TBM policy is not effective for the following reasons:

- Accelerated pace of degradation may cause failure before the maintenance,

- Systems with slow aging are maintained more frequently than necessary, which increases the cost of the maintenance programs, and

- Emerging degradation mechanisms cannot be intercepted in a timely manner.

Therefore, the main objective of this project is to develop more practical tools to guide maintenance programs and reduce the impact of degradation in a cost effective manner.

Most of the literature on maintenance modeling is based on expected or average cost of a program. The expected cost does not give an idea about the potential deviations or variability in the overall cost. Therefore, we have developed a comprehensive reformulation of the life cycle cost analysis by analytically deriving the full probabilistic distribution of the damage cost. A special case of this model has been applied to seismic risk analysis.

Efficient meta-modeling for system reliability analysis

A reliability analysis in general involves the evaluation of the random response (or performance) of a system, which typically depends on a vector of random variables, via a functional relationship. The solution of such a problem has two key elements:

- Probabilistic properties of the performance, namely, mean, variance and the distribution of the response, and

- Sensitivity of the performance to the input random variables.

In case of practical nuclear plant systems, the performance function is a complex, nonlinear and implicit function which requires intensive numerical modeling and computation (such as a finite element code). In such cases, neither the text book type analytical methods nor the crude Monte Carlo method are useful.

This project involves the further development and application of a simpler “meta model” approach based on the multiplicative dimensional reduction method (MDRM). In MDRM, a complex reliability problem is represented by a meta (or surrogate) model with a much simpler analytical structure, which allows the computation of moments and the distribution of the response. The sensitivity of input random variables is a by-product of the analysis. Novel applications of this model include fire safety analysis and the reliability analysis of machine tools.

Uncertainty analysis in fitness for service assessments

The integrity of the primary heat transport piping system is critical to assuring the safety of a nuclear plant. The piping integrity can be challenged by aging related degradation mechanisms, such as delayed hydride cracking in fuel channels, flow accelerated corrosion in feeder pipes, and stress corrosion cracking of dissimilar metal welds.

Traditionally, leak before break (LBB) assessment has been used to demonstrate that a through-wall flaw will first cause a leak, which can be detected fast enough so that the reactor can be safely shut down before pipe rupture. This assessment has been purely deterministic, and has not considered any uncertainties associated with flaw generation, flaw growth, material properties, internal pressure and many other system variables. In the backdrop of risk-informed decision making (RIDM), the conventional deterministic assessment is replaced by a probabilistic formulation of fitness for service (FFS) assessment problems, including LBB.

The general objective is to develop a unified probabilistic framework for

- Structuring the FFS problem,

- Defining the methodology,

- Quantifying uncertainties,

- Developing acceptance criteria, and

- Establishing a compliance monitoring protocol.

A key innovation here is a method of uncertainty assessment to quantify the credibility of the analysis in terms of the statistical confidence that can be placed on the estimated risk. In the proposed method, a logical separation of aleatory and epistemic uncertainty is essential. This method has been applied to the leak-before-break assessment of nuclear piping, as well as the uncertainty analysis of non-destructive testing (NDT) methods.

Risk aggregation due to multiple hazards for site-wide risk assessment

The main objective of this project is to develop a rational approach to the aggregation of risk arising from multiple and diverse hazards at a nuclear station. In the post-Fukushima climate, this project is extremely important to the Canadian industry’s need for a sound methodology for whole-site risk assessment. This project will address challenges associated with risk aggregation of different hazard types (e.g., internal hazards, seismic, fire, flood, etc.) and risks due to multiple on-site radiological sources (i.e., multiple reactor units and spent fuel bays). All post Fukushima Task Forces and Expert Panels have recognized the risk aggregation problem as the most pressing issue for the industry.

Recognizing that the currently used aggregation rules are based on a special case of the homogeneous Poisson process (HPP) models, a more general approach is developed based on the superposition of stochastic processes. A method for aggregating uncertain failure frequencies is also under development in this project.

Reliability analysis of large structural systems

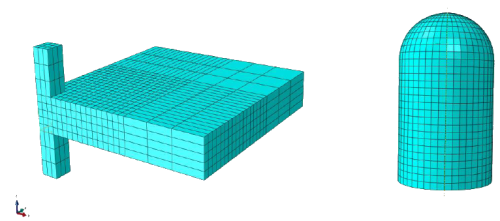

In a nuclear power plant (NPP), the concrete containment, the vacuum building, and the spent fuel bays are key barriers to the accidental release of radioactivity into the environment. In addition, concrete shear walls, footings, slabs, retaining walls and steel frames provide physical protection to the plant. Experience from the Fukushima earthquake has emphasized the need for high reliability of plant structures under beyond design basis accident (BDBA) conditions. In response to this need, Canadian utilities have undertaken a systematic review of structural safety in our nuclear plants. To support this endeavour, this project is intended to develop a framework for realistic reliability analysis of plant structures under general conditions of loading and non-linearity of structural response.

Example applications include a simulation module that has been written using the PYTHON language and then interfaced with the ABAQUS package. This has facilitated accurate simulation-based reliability analysis, while the multiplicative dimensional reduction method (MDRM), discussed above, has also been successfully integrated with the finite element software and applied to the analysis of large concrete structures.

Advanced Bayesian models for reliability analysis

As part of aging management, this project is developing a suite of Bayesian probabilistic models for the detection, monitoring and trending of degradation in a wide variety of nuclear plant systems. These models will help to formulate appropriate strategies and requirements for inspection, surveillance, testing, sampling, and monitoring programs. The need for inspection and measurement of degradation increases with the aging of reactor components. However, an increased scope of inspection means longer outage time and potentially adverse safety impacts on inspectors. Therefore, one of the main aspects of this project is developing more advanced methods for setting the sample size based on statistical decision theory and the concept of value of information. Example applications of this work include the detection of degraded spacers in fuel channels and the assessment of fretting of reactor instrumentation lines.

Degradation monitoring and trending models are also an important part of this project. The statistical models developed here will help to identify the presence of degradation and determine the rate of progression, so that corrective actions can be taken to prevent system failure. Technically, this work is based on change point detection models used in statistics. Example applications in this area include the detection of defective fuel bundles in an operating reactor, degradation of the fire retardant fluid (FRF) used in the steam turbine electro-hydraulic control system, and the statistical analysis and modelling of the change in reactor inlet header temperature (RIHT) over time. In addition to regression methods, a fairly original approach to these problems is currently being developed based on the Empirical Mode Decomposition (EMD) approach.

Advancement of seismic PRA

Seismic design loads for SSCs in older Canadian plants were estimated using the Design Basis Earthquake (DBE) response spectrum. The shape of this DBE response spectrum was defined by prevailing practices and standards of the nuclear industry in the 1970’s, which were highly influenced by the California earthquake data. As the older CANDU stations are planning for refurbishment, a reevaluation of seismic safety of these plants in line with modern international standards has been undertaken. As a lesson learned from the Fukushima earthquake, the Canadian Regulator has asked for the evaluation of seismic safety in BDBA. The main objective of this project is to develop advanced tool sets for seismic PRA of systems, structures and components in CANDU plants.