The project objective is to develop methods for assuring the safety of systems that rely on machine learning, such as automated vehicles.

Machine Learning (ML) in Driving Automation

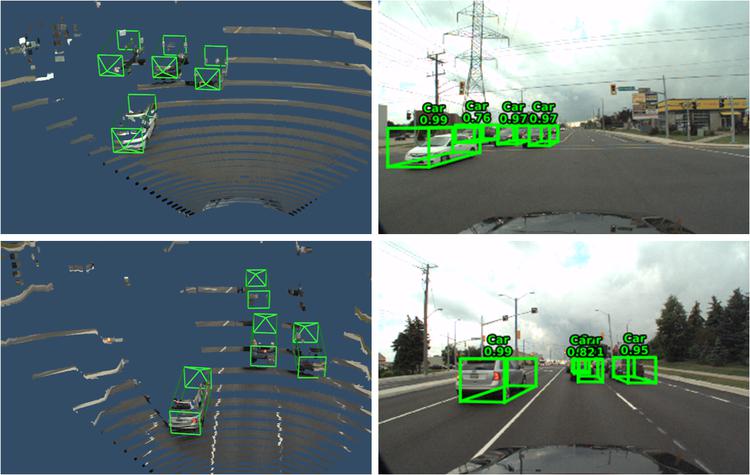

Figure 1 3D vehicle detection using AVOD, a deep network deployed on UW Moose

The use of machine learning (ML) is on the rise in driving automation systems, such as Advanced Driver Assistance Systems (ADAS) and Automated Driving Systems (ADS). ML is indispensible in the development of perception functions, such as vehicle and pedestrian recognition. For example, deep neural networks outperform classical computer vision methods for perception tasks, and current ADS rely on deep learning for perception (Figure 1). ML techniques, such as reinforcement learning, are also increasingly used to develop planning functions.

ISO 26262 Functional Safety Assurance of Automotive Software and Hardware

Safety assurance of automotive software and hardware has traditionally relied on the concept of functional safety assurance, which is the use of an adequate level of rigor in the application of

- hazard analysis methods to identify and assess hazards;

- best practices to reduce the risk of defects in specification, design and implementation that may cause these hazards;

- fault-tolerance methods to mask or mitigate software and hardware failures at runtime;

- verification methods, such as inspections, walkthroughs, static analysis, and testing, to check design and implementation against safety requirements specification;

- validation testing to validate the safety measures at vehicle level, such as in closed-course and field tests;

in order to reduce the risk of injury due to software and hardware failures to a reasonable level.

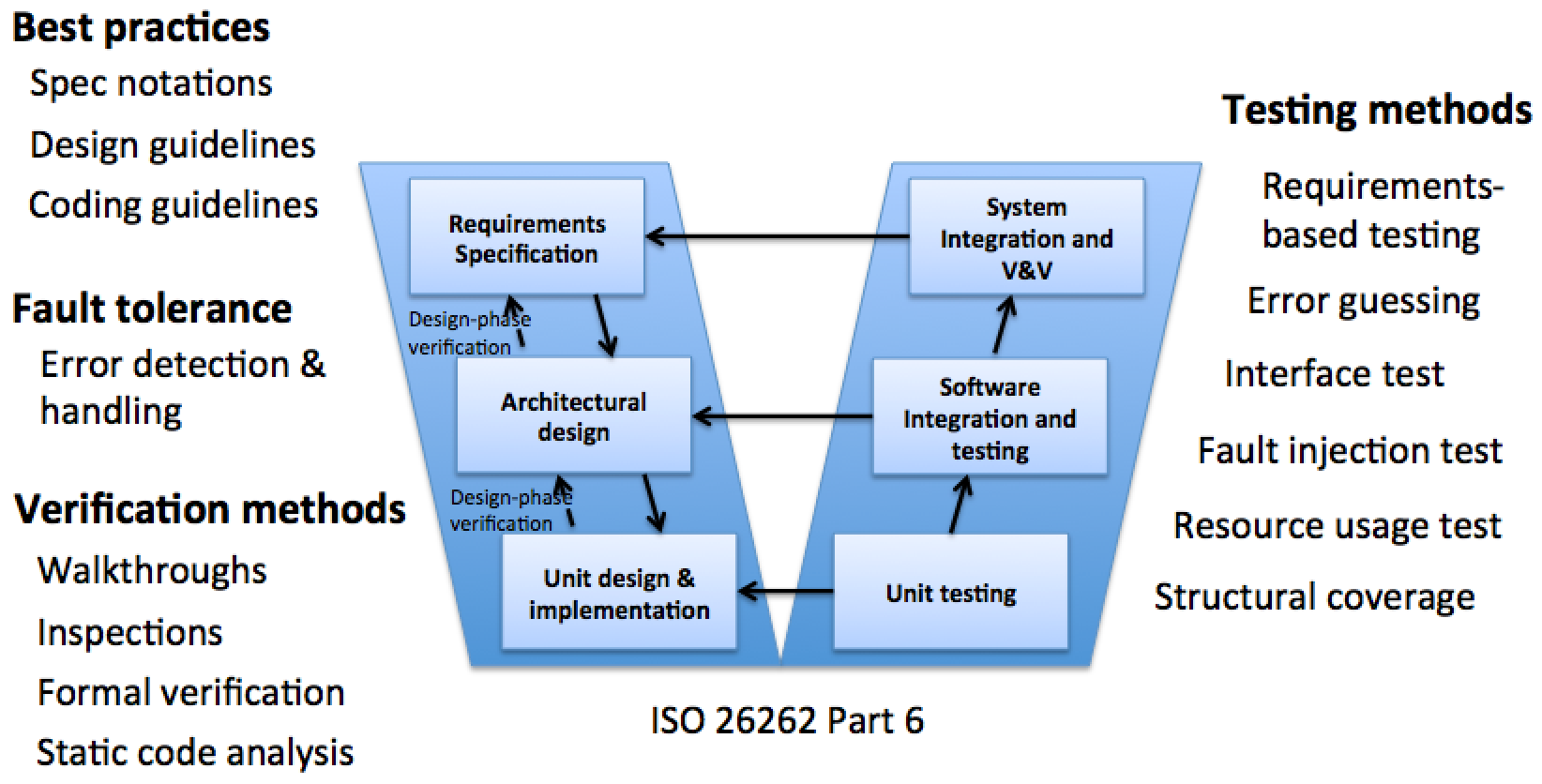

Figure 2 Summary of assurance methods in ISO 26262 Part 6

The automotive functional safety standard ISO 26262 defines a safety assurance process based on the V-model (Figure 2). It also defines the requirements that establish the adequate level of rigor depending on the criticality of a function under development, as classified by the so-called Automotive Safety Integrity Levels (ASILs). Figure 2 summarizes the assurance methods used in ISO 26262. The availability of a complete safety-requirements specification is a prerequisite to the successful application of most of the V&V methods in ISO 26262.

Obstacles to Safety Assurance of ML-based Functions

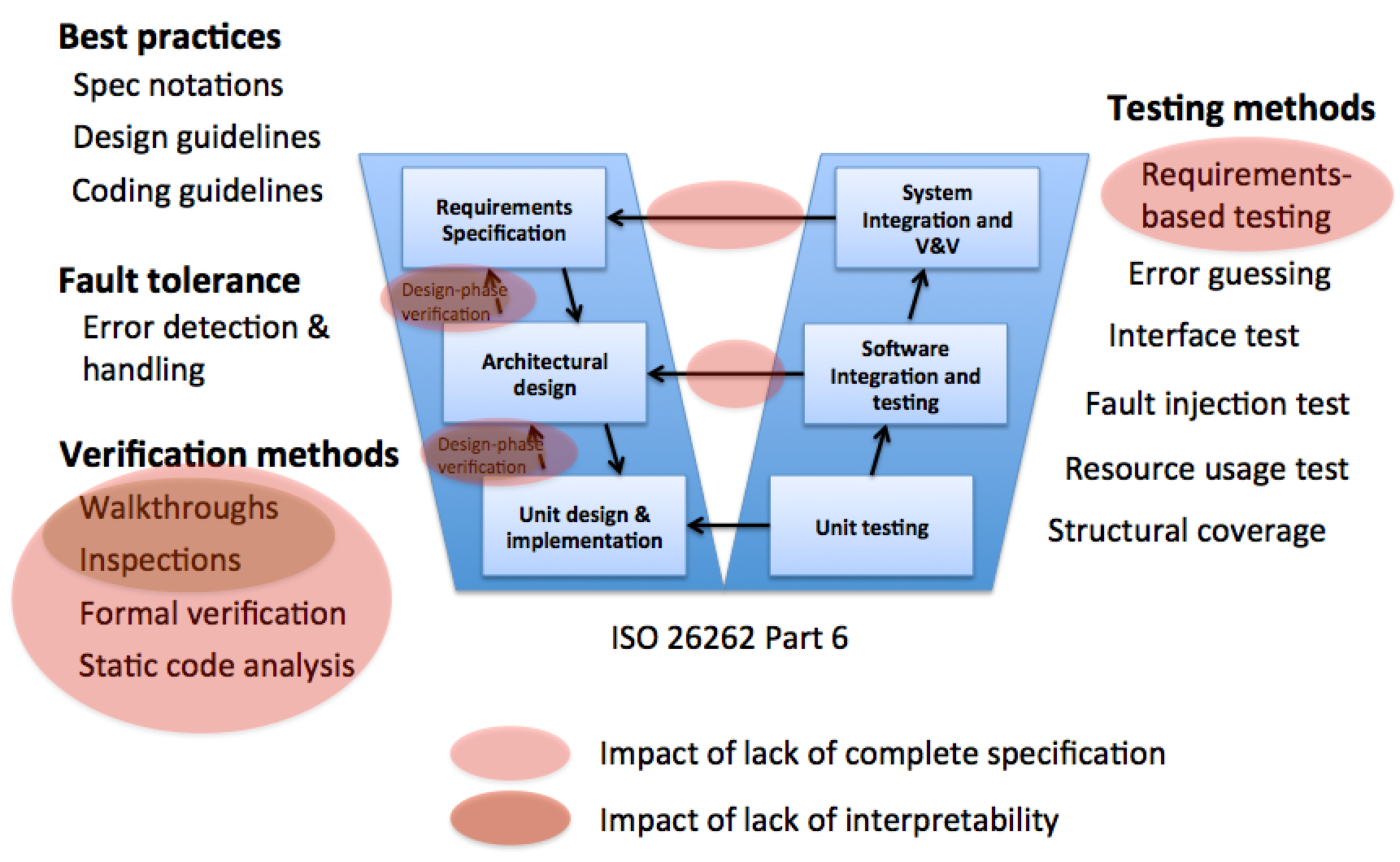

Figure 3 Verification methods in ISO 26262 Part 6 impacted by the lack of specification and interpretability of ML-based functions

Functions developed using machine learning (ML) pose two major obstacles to the application of functional safety assurance as defined in ISO 26262 (Figure 3):

- Lack of complete specification: In contrast to traditional programming against a specification, ML-based functions are produced by ML algorithms using a dataset, which is not a complete specification. The lack of a complete specification poses an obstacle to the application to verification and validation methods that require such a specification.

- Lack of interpretability: High-performance ML approaches such as deep learning represent functionality in a way that cannot be directly interpreted by humans the way program code can. This non-interpretability poses an obstacle to verification and validation methods that rely on interpretability, such as inspections and walkthroughs.

Adaptation and extension of ISO 26262 for ML-Based Functions

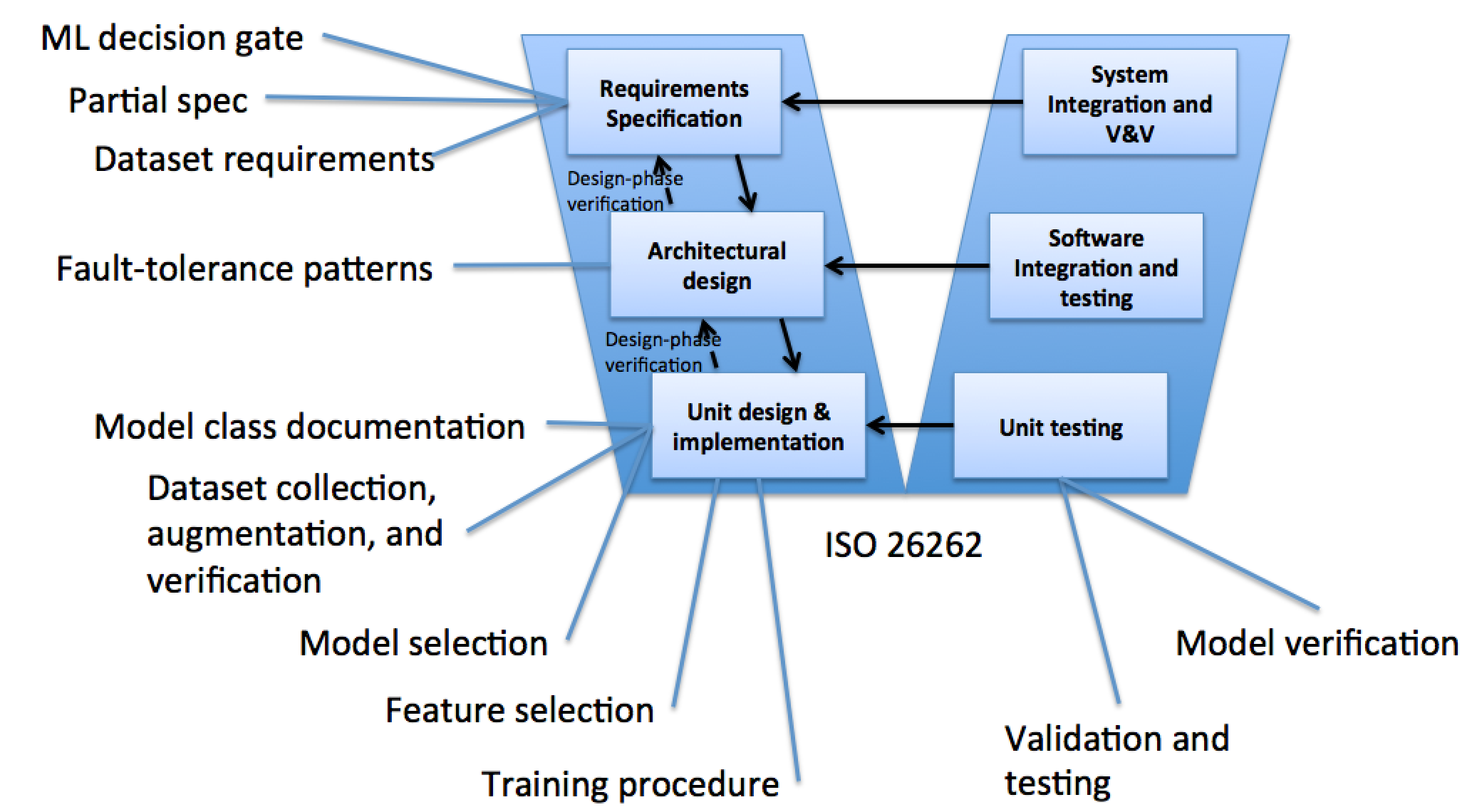

Figure 4 Summary of the extensions if ISO 26262 Part 6 for assuring ML-based functions

The following report provides a detailed analysis of the process requirements and methods in ISO 26262 Part 6 for their applicability to ML-based functions, and on that basis, it adapts and extends the standard to address safety assurance of ML-based functions. Figure 4 gives an overview of the new assurance activities related to ML-based functions defined in the report. The report also provides an extensive review of literature on safety assurance of ML-based functions.

Rick Salay and Krzysztof Czarnecki. Using Machine Learning Safely in Automotive Software: An Assessment and Adaption of Software Process Requirements in ISO 26262. WISE Lab Technical Report, 2018, arXiv:1808.01614 [cs.LG]

A short version of the analysis part of this work appeared earlier as a conference paper:

Rick

Salay,

Rodrigo

Queiroz,

and

Krzysztof

Czarnecki. An

Analysis

of

ISO

26262:

Machine

Learning

and

Safety

in

Automotive

Software. SAE

Technical

Paper

2018-01-1075,

2018,

DOI:

10.4271/2018-01-1075

(earlier

version

arXiv:1709.02435

[cs.AI])

Managing Perceptual Uncertainty for Safe Automated Driving

Automated driving relies on perception to establish facts about the real world with acceptable perceptual uncertainty, as required for the dynamic driving task. Thus, perceptual uncertainty is a key performance measure used to define safety requirements on perception. This measure must be estimated and controlled.

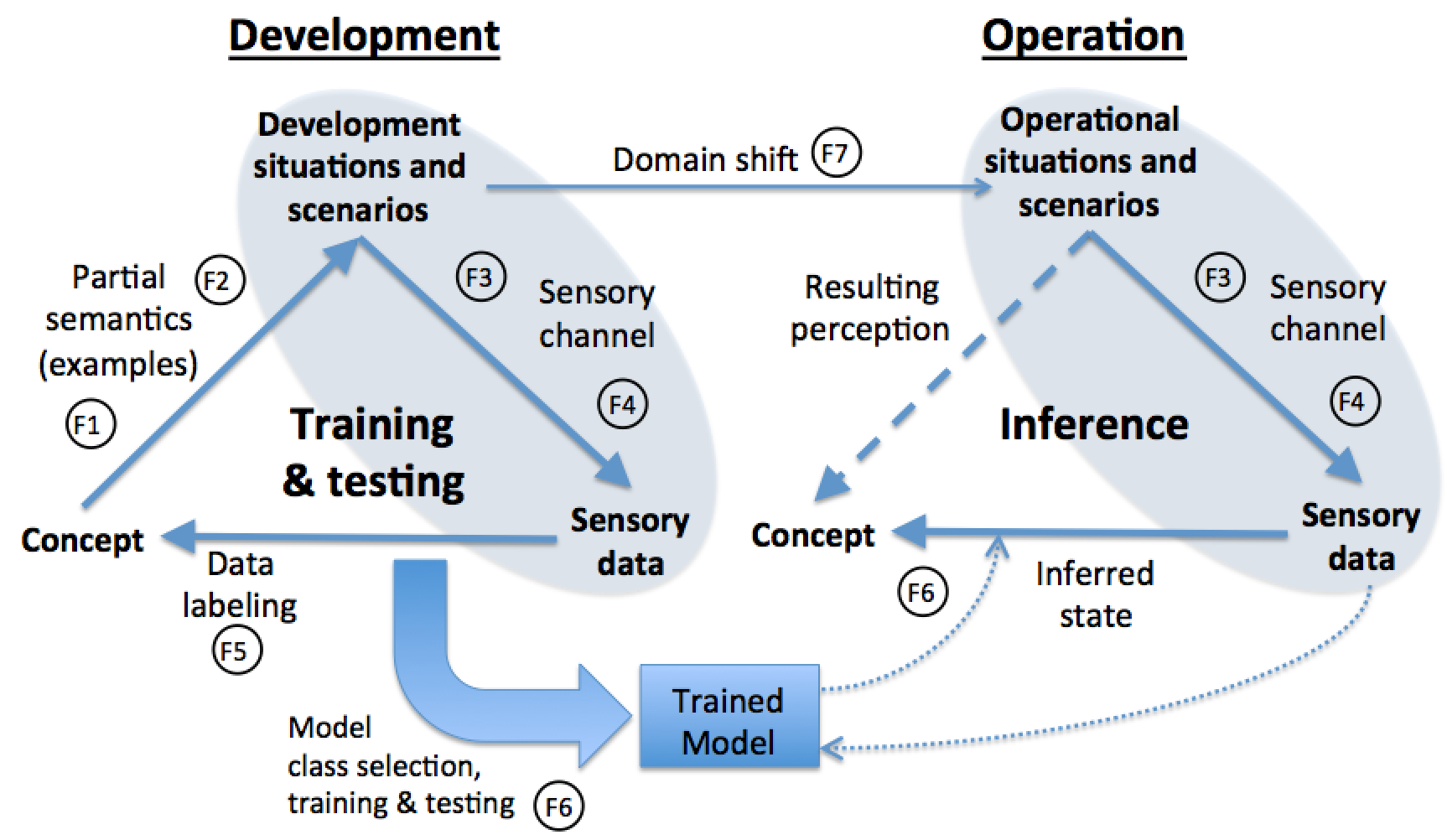

Figure 5 Overview of factors influencing perceptual uncertainty when using supervised machine learning

The following paper identifies seven factors influencing perceptual uncertainty (see Figure 5, F1-F7) when using supervised machine learning. This work represents a first step towards a framework for measuring and controling the effects of these factors and supplying evidence to support claims about perceptual uncertainty.

Krzysztof Czarnecki and Rick Salay. Towards a Framework to Manage Perceptual Uncertainty for Safe Automated Driving. In Proceedings of International Workshop on Artificial Intelligence Safety Engineering (WAISE), Västerås, Sweden, 2018

We are looking for postdocs and graduate students interested in working on all aspects of autonomous driving.

For more information, visit Open positions.