Live virtual workshops

The workshops will be held online, with live presentations and interactive sessions spread over two half days:

- July 19

11:30 AM to 3:30 PM (CEST) | 5:30 AM to 9:30 AM (EDT) - July 22

11:30 AM to 3:30 PM (CEST) | 5:30 AM to 9:30 AM (EDT)

- See below for detailed program.

- Link to Humanoids 2020 registration page.

Objectives

Robots will continue to permeate our daily lives in the coming future, making human-robot interaction (HRI) a crucial research topic. Robots will find themselves interacting with humans in a variety of situations, such as manufacturing, disaster recovery, household and health care settings. Many of these situations will require the robot to enter in direct contact with humans, resulting in very close physical HRI (pHRI) scenarios. To make humans feel comfortable with the interaction, robots need to act not only in a reliable and safe way, but also in a socially and psychologically acceptable one. Current pHRI research largely focuses on interacting with the human through an object or passively waiting for the human to start the interaction. On the other side, social HRI (sHRI) is so far mainly concerned with distanced HRI through speech and gestures.

The objectives of this workshop are to:

- bring together the pHRI and sHRI research communities, and

- generate discussions consolidating these two fields, for instance on the social and ethical implications of physical contact in HRI, as well as the use of non-verbal communication through gestures, robot design, appearance or control

What would you like to hear in this workshop? Send us your question(s) for the panel discussion that will be held on July 22!

Topics of interest

- Humanoid robots

- Wearable robots, exoskeletons

- Physical human-robot interaction

- Social human-robot interaction

- Non-verbal communication

- Emotional body language

- Ethics

- Safety

- Physical and social aspects of human-human-collaboration

- Sensor technology for physical-social human-robot interaction

- Controllers for physical-social human-robot-interaction

Expected attendance

We expect to attract an audience from the fields of physical and social HRI research, including industrial and academic participants, users and developers of robots that work closely with people.

Organizers

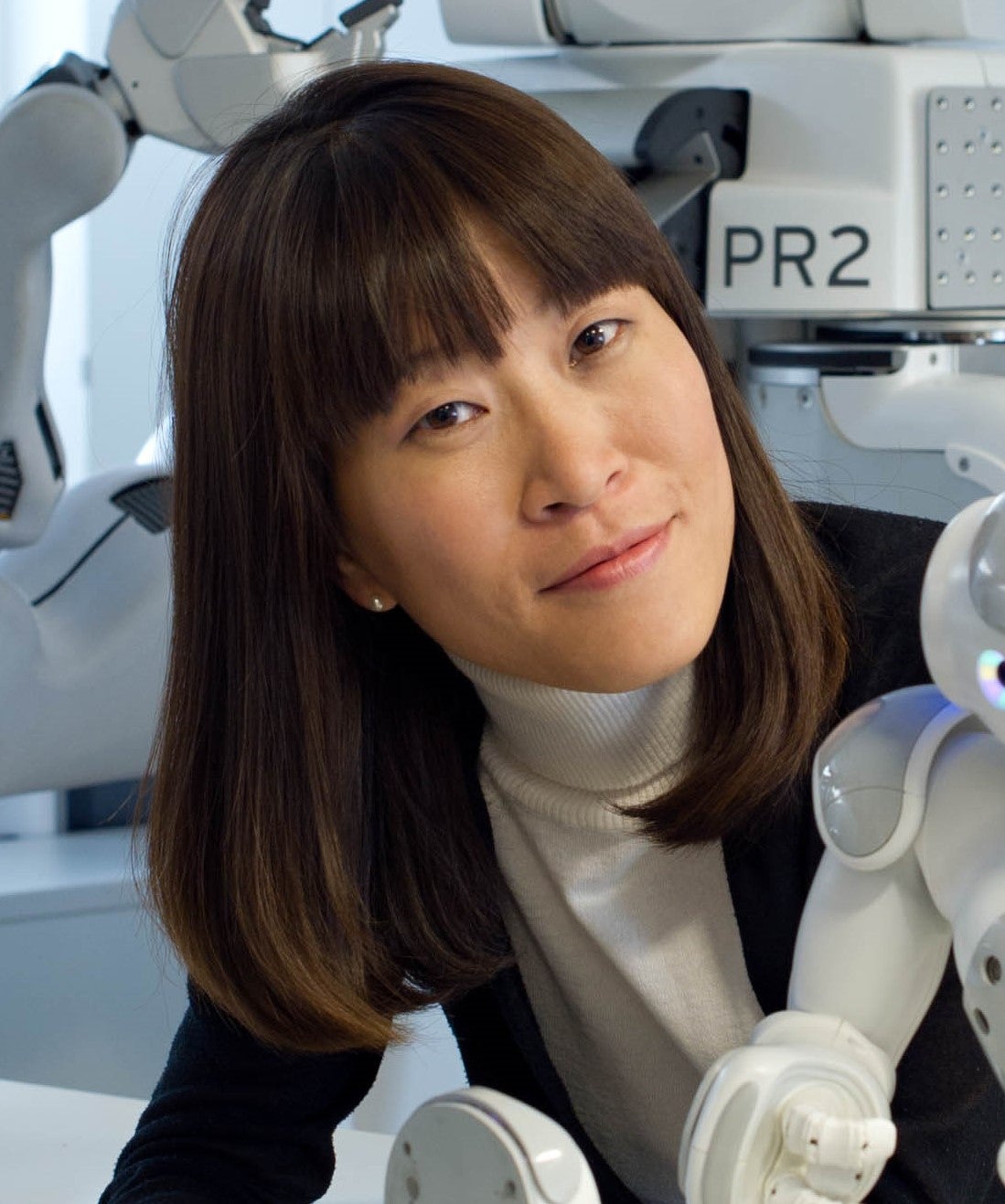

Dr. Marie Charbonneau

Dr. Marie Charbonneau

Post-doctoral fellow in the Human-Centred Robotics and Machine Intelligence lab, University of Waterloo

Email: marie.charbonneau@uwaterloo.ca

Dr. Francisco Andrade Chavez

Dr. Francisco Andrade Chavez

Post-doctoral fellow and lab manager in the Human-Centred Robotics and Machine Intelligence lab, University of Waterloo

Email: fandrade@uwaterloo.ca

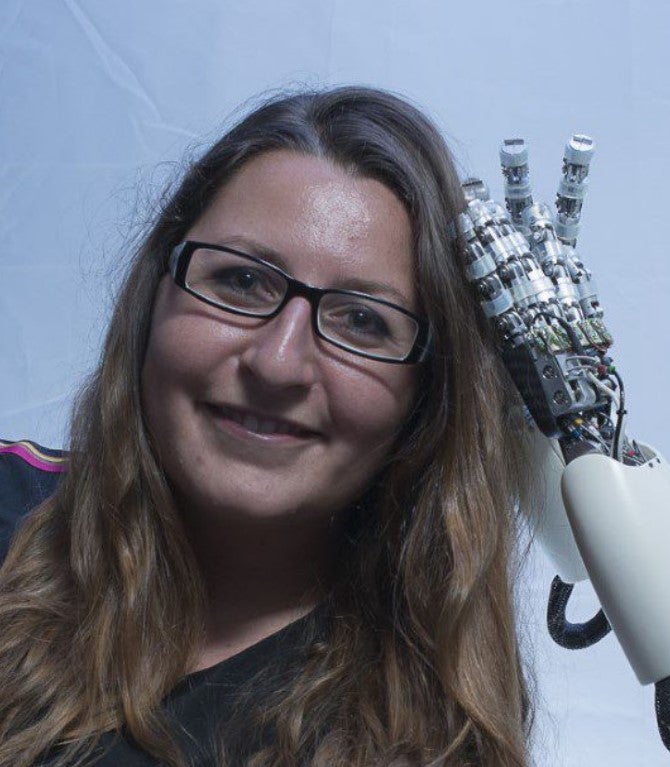

Professor and Chair Katja Mombaur

Professor and Chair Katja Mombaur

Canada Excellence Research Chair in Human-Centred Robotics and Machine Intelligence, University of Waterloo

Email: katja.mombaur@uwaterloo.ca

List of speakers

Istituto Italiano di Tecnologia

Socio-physical Interaction Skills for Human-plus-Robot Manufacturing Systems.

This talk will introduce new core robotic technologies for cooperative human-robot systems. The objectives are to achieve a reconfigurable and resource-efficient production and improve human comfort in automation. Therefore, I will give an overview of the human anticipatory models and robot reactive controllers developed in the HRI2 laboratory of the Italian Institute of Technology.

Technical University of Munich

Whole-body multi-contacts control of humanoid robots during interactions

In this talk, I will present the whole-body multi-contacts control of humanoid robots during interactions. I will demonstrate the effectiveness of our approaches with humanoid robots covered with artificial multi-modal robot skin similar to human skin.

University of Waterloo

The physical and the social aspects of HRI - Two sides of the same Coin?!

My talk will draw on my experience in human-robot interaction experiments that often included both social and physical interaction. I will briefly describe a few example projects and associated challenges.

PAL Robotics

Developments in HRI to advance humanoid robots.

Improving Human-Robot Interaction in robotics is essential to enable robots to help more and more in our daily lives, for example at home, in healthcare and in industry. In order for successful interactions in such settings, robots must collaborate with humans, involving improvements in multiple areas not limited to AI, natural language processing and manipulation. Robots should be able to predict the behaviour of humans in such settings in order to plan their own behaviour. As well as predicting human behaviour, there are other essential elements such as a safe interaction with humans. In addition, environments such as home settings, healthcare and even industry are dynamic and unpredictable.

This presentation will include PAL Robotics latest developments in humanoid robots, including software advancements to enable stronger HRI for example in TALOS, TIAGo, REEM-C and ARI, as well as an introduction to the latest biped robot we are working on, Kangaroo. In addition we will talk about pushing forward in HRI, together with project partners in our collaborative projects with humanoid robots, for example for use cases such as agri-food and ambient assisted living.

Czech Technical University in Prague

Whole-body awareness for safe and natural HRI: from humans to humanoids.

During physical human-robot interaction, safety can be in principle achieved in two ways. First, in the so-called power and force limiting regime, it is by limiting the impact forces a moving robot can exert on the human collaborator. I will show our recent work on collaborative manipulators where we introduce a data-driven methodology to learn a 3D collision-force-map which, unlike the existing ISO standards, importantly considers the robot position in the workspace. Second, safety can be warranted through speed and separation monitoring. I will illustrate how combining both regimes can further boost productivity. Finally, I will show how robots with artificial electronic skins and awareness of the space around them (peripersonal space / interpersonal social zones) can further boost performance and, importantly, acceptance by humans.

Tokyo University of Agriculture and Technology

Interact with me: active physical HRI.

Two research directions have been trying to breach the barrier between humans and robots: physically (pHRI), and socially (sHRI), which have been evolving without many intersections. But for robots to really coexist and collaborate with humans, it is necessary to take into account both physical and social interactions. We define active physical human-robot interaction (active pHRI) as a type of interaction during the robot should be able to achieve tasks optimally, efficiently, safely, and at the same time take into account the perception of human users. In this talk, I will illustrate the experiments performed to target a fundamental step towards achieving active pHRI: understanding the relationships between the humans perceptions and their measurable data when a robot takes direct active physical actions on the human, with insights on outcomes, applications, and developments.

Osaka University

Studies on proximity HRI.

One direction in the research of robots that interact with humans is the research and development of robots that directly interact with humans.

In this talk, we will discuss the possibility of robots that can directly interact with humans.

INRIA

Collaborating with humanoids: mixing social and physical interaction.

When humanoids collaborate with humans to help them in some tasks, it is very critical to ensure that the physical interaction between the two partner is safe: this requirement is the most important objective for the whole-body control of the robot, which must ensure an appropriate control of the physical interaction forces to not compromise the equilibrium of the two and possibly to minimize the efforts.

However, physical interaction is only one type of nonverbal interaction; it should at least consider the effect of other communication channels, typically studied in social interaction.

In this talk I will present some of our experimental studies that involve humans interacting physically and socially with humanoid robots. I will discuss our findings regarding verbal and non-verbal communication during physical contact, trust towards the humanoids decisions, intention prediction, and how we are considering some of these findings to improve the humanoids control.

Technical University of Munich

Effects of biomimetic Robot Light Touch Haptic Feedback on Human Body Sway Dynamics.

(Abstract to come)

Dongheui Lee is Associate Professor at the Department of Electrical and Computer Engineering, Technical University of Munich (TUM). She is also leading the Human-centered assistive robotics group at the German Aerospace Center (DLR). Her research interests include human motion understanding, human robot interaction, machine learning in robotics, and assistive robotics. She obtained her B.S. and M.S. degrees in mechanical engineering at Kyung Hee University, Korea and a PhD degree from the department of Mechano-Informatics, University of Tokyo, Japan in 2007. She was a research scientist at the Korea Institute of Science and Technology (KIST), Project Assistant Professor at the University of Tokyo and joined TUM as professor. She was awarded a Carl von Linde Fellowship at the TUM Institute for Advanced Study (2011) and a Helmholtz professorship prize (2015).

Technical University of Munich

Haptic Control Sharing Based on Game-Theoretical Concepts

One of the main challenges in HRI is to enable robots to be collaborative partners or assistants to humans. In order to achieve this, an understanding between the partners would need to be established so that the task-relevant actions are shared appropriately. This represents a major challenge in human-robot interaction as it requires modelling of human decision-making behaviour and a task-relevant and human-centric control sharing between collaborative partners. Game theory is a promising mathematical framework because it enables the design of the robot controller that understands the behavior of the human and reacts optimally. In this talk a game-theoretic concept for shared control design is introduced with the focus on haptic human-robot interaction.

Monash University

Robots in Public Space: A human-centered, interdisciplinary methodology

Dr Leimin Tian is a research fellow at the Human-Robot Interaction group in the Faculty of Engineering, Monash University. Her research focuses on developing interactive systems that incorporate social intelligence, such as emotion-aware human-robot interaction. She also works on human-centered AI in the healthcare context, such as using multimodal behavioral analytics technology to support the diagnosis of neurological conditions.

University of Sherbrooke

Touch interpretation and multi-modal attention recognition during kinesthetic manipulation of a humanoid robot.

A large portion of human-human communication is implicit and simply by intuition, learned from years of social interaction with layers that go beyond speech. If robots are to successfully work alongside humans in a truly collaborative manner, they must be able to understand this implicit communication and their human partners intention and state. My talk will highlight our recent works on interpreting touch semantics during kinesthetic manipulation of a humanoid robot through the two-touch kinematic chain paradigm, a method for generating intuitive touch semantics for manipulation applicable to non-backdrivable position-controlled robots. Furthermore, I will discuss our on-going work on multi-modal attention and intention recognition for ensuring safe kinesthetic manipulation by combining tactile sensors, 3D human pose and face gaze.

- Steve N'Guyen, Pollen Robotics: Reachy: open source interactive humanoid platform to explore real world applications

- Claudia Latella, Istituto Italiano di Tecnologia: Human wearable technologies for agent-robot perception framework.

Talks by organizers:

- Katja Mombaur: Challenges and applications of close physical-social human robot interaction

- Marie Charbonneau : Physical HRI with the REEM-C

Workshop schedule

|

CEST |

EDT |

Monday, July 19 |

Thursday, July 22 |

|---|---|---|---|

| 11:30:00 AM | 5:30:00 AM | Intro by the organizers | Arash Ajoudani |

| 11:40:00 AM | 5:40:00 AM | Yue Hu | |

| 11:50:00 AM | 5:50:00 AM | Hiroshi Ishiguro | |

| 12:00:00 PM | 6:00:00 AM | Selma Music | |

| 12:10:00 PM | 6:10:00 AM | Dongheui Lee | |

| 12:20:00 PM | 6:20:00 AM | Matej Hoffmann | |

| 12:30:00 PM | 6:30:00 AM | Claudia Latella | |

| 12:40:00 PM | 6:40:00 AM | Francesco Ferro | |

| 12:50:00 PM | 6:50:00 AM | Katja Mombaur | |

BREAK |

|||

| 1:50:00 PM | 7:50:00 AM | Gordon Cheng | Leimin Tian |

| 2:00:00 PM | 8:00:00 AM | ||

| 2:10:00 PM | 8:10:00 AM | Serena Ivaldi | Steve N'Guyen |

| 2:20:00 PM | 8:20:00 AM | ||

| 2:30:00 PM | 8:30:00 AM | Kerstin Dautenhahn | Marie Charbonneau |

| 2:40:00 PM | 8:40:00 AM | Set-up for panel discussion | |

| 2:45:00 PM | 8:45:00 AM |

Panel discussion |

|

| 2:50:00 PM | 8:50:00 AM | Christopher Yee Wong | |

| 3:00:00 PM | 9:00:00 AM | ||

| 3:10:00 PM | 9:10:00 AM | ||

| 3:20:00 PM | 9:20:00 AM | ||

Contact us if you are having trouble accessing one of our sessions.