Screening tool uses AI to weed out fake news

Motivated by the spread of online disinformation, Waterloo Engineering researchers develop new system to help separate fact from fiction

Motivated by the spread of online disinformation, Waterloo Engineering researchers develop new system to help separate fact from fiction

By Brian Caldwell Faculty of EngineeringResearchers at Waterloo Engineering have developed a new artificial intelligence (AI) tool that could help social media networks and news organizations weed out false stories.

Motivated by the proliferation of disinformation online, the system uses deep-learning AI algorithms to determine if claims made in posts or stories are supported by other posts and stories on the same subject.

“If they are, great, it’s probably a real story,” says Alexander Wong, a professor of systems design engineering. “But if most of the other material isn’t supportive, it’s a strong indication you’re dealing with fake news.”

Alexander Wong is a professor of systems design engineering and a founding member of the Waterloo Artificial Intelligence Institute (Waterloo.ai)

Chris Dulhanty, a master’s student who led the project, describes online material fabricated to deceive or mislead readers, typically for political or economic gain, as a “deep social problem” that urgently requires a solution.

“We need to empower journalists to uncover the truth and keep us informed,” he says. “This represents one effort in a larger body of work to mitigate the spread of disinformation.”

The new tool advances efforts to develop fully automated technology capable of detecting fake news by achieving 90 per cent accuracy in a key area of research known as stance detection.

Given a claim in one post or story and other posts and stories on the same subject that have been collected for comparison, the system can correctly determine if they support it or not nine out of 10 times.

That is a new benchmark for accuracy by researchers using a large dataset created for a 2017 scientific competition called the Fake News Challenge.

While scientists around the world continue to work towards a fully automated system, the Waterloo technology could be used as a screening tool by human fact-checkers at social media and news organizations.

“It augments their capabilities and flags information that doesn’t look quite right for verification,” says Wong, a founding member of the Waterloo Artificial Intelligence Institute (Waterloo.ai). “It isn’t designed to replace people, but to help them fact-check faster and more reliably.”

AI algorithms at the heart of the system were shown tens of thousands of claims paired with stories that either supported or didn’t support them. Over time, the system learned to determine support or non-support itself when shown new claim-story pairs.

In the next phase of the project, researchers will work to understand which features or elements of stories the AI system focuses on to make decisions. That information would boost confidence in its results and provide insights to increase its accuracy.

Jason Deglint and Ibrahim Ben Daya, both systems design engineering PhD students, collaborated with Dulhanty and Wong, a Canada Research Chair in Artificial Intelligence and Medical Imaging.

A paper on their work, Taking a Stance on Fake News: Towards Automatic Disinformation Assessment via Deep Bidirectional Transformer Language Models for Stance Detection, was presented this month at the Conference on Neural Information Processing Systems in Vancouver.

University of Waterloo researchers Olga Ibragimova (left) and Dr. Chrystopher Nehaniv found that symmetry is the key to composing great melodies. (Amanda Brown/University of Waterloo)

Read more

University of Waterloo researchers uncover the hidden mathematical equations in musical melodies

Read more

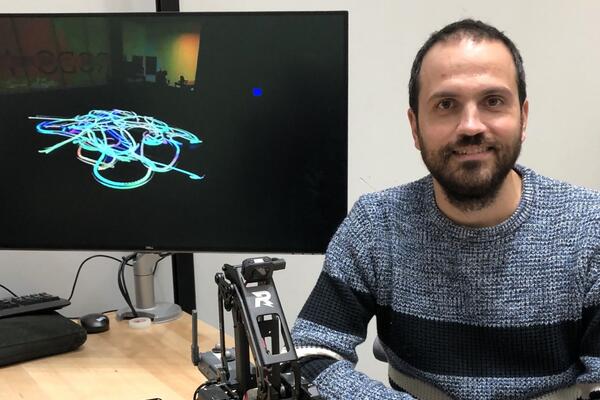

Robots the size of a soccer ball create new visual art by trailing light that represents the “emotional essence” of music

Read more

Waterloo prof leads a team of researchers to improve water quality through a community-focused approach underpinned by technical excellence

The University of Waterloo acknowledges that much of our work takes place on the traditional territory of the Neutral, Anishinaabeg, and Haudenosaunee peoples. Our main campus is situated on the Haldimand Tract, the land granted to the Six Nations that includes six miles on each side of the Grand River. Our active work toward reconciliation takes place across our campuses through research, learning, teaching, and community building, and is co-ordinated within the Office of Indigenous Relations.