Humans easily recognize and identify actions in video but automating this procedure is challenging. Human action recognition in video is of interest for applications such as automated surveillance, elderly behavior monitoring, human-computer interaction, content-based video retrieval, and video summarization [1]. In monitoring the activities of daily living of elderly, for example, the recognition of atomic actions such as ”walking”, ”bending”, and ”falling” by itself is essential for activity analysis [2].

So far, we have been mainly focused on improvement of different components of a standarad discriminative bottom-up framework (such as widely used Bag-of-Words approach (Fig. 1)) for action recognition in video. We have three main contributions on local salient motion feature detection, action representation, and action classification. Fo references on our improvements on robust salient feature detection refer to [1], [2], [3],[4]. Our contributions on more descriptive action representation and better classification are under review and will appear here as they are publishing. Stay tuned ;)

Fig.1: Standard Bag-of-Words framework for human action recognition. This section focuses

on how to improve the detection of spatio-temporal salient features.

Improved Spatio-temporal Salient Feature Detection for Action Recognition

Existing salient feature (or key point) detectors use a non-causal symmetric temporal filter such as Gabor. In contrast, the biological vision and the recent publications are in favor of efficient temporal filtering using asymmetric filters. We have thus designed three causal and asymmetric temporal filters of asymmetric sinc, Poisson, and truncated exponential [1]. The complex form of these multi-resolution filters are biologically consistent with the human visual system to obtain a phase-insensitive and contrast-polarity insensitve motion map[2]. Figure (1) shows our salient feature detection framework using the introduced complex temporal filters.

Here, we provide some results for the motion map (R) and feature detection using our novel asymmetric sinc filtering. For more details, please refer to [1].

Multi-resolution motion map:

To detect local video events with different spatial an temporal scales, the motion map should be computed at multiple resolutions. This video clip shows the motion map at nine different spatio-temporal scales (\sigma, \tau). The top-left shows the motion map at the finest scale of (2,2) and the bottom-right shows the motion map at the coarsest scale of (4,4).

Fig. 2: Motion maps at nine different spatio-temporal resolutions.

Spatio-temporal salient features:

This video clip shows the salient features detected at nine different spatio-temporal scales (\sigma, \tau). The corresposning fetaures are highlighted by red box on the original video. Below to each video, the local volume representing the salient features are on and the rest of non-salient regions are off. The top-left shows the results at the finest scale of (2,2) and the bottom-right shows the results at the coarsest scale of (4,4).

Fig. 3: Salient fetaures detected at nine different spatio-temporal resolutions. The symmetric Gabor filter and our three asymmetric temporal filters are tested comprehensively under three scenarios: precision of the detected salient features, reproducibility under geometric deformations, and human action classification. For more details, please refer to [1].

1- Precision and robustness tests

Precision requires salient features to be from the foreground. Robustness requires re-detection of the same fetaures under different geometric deformation such as a view/scale change or affine tranformation. As Table (1) shows the asymmetric temporal filtering has better performance than symmetric Gabor filtering for both the precision teset and the robustness tests. Among asymmetric filters, our novel asymmetric sinc performs the best.

| Temporal Filters | |||||

|---|---|---|---|---|---|

| Test | Type | Gabor | Trunc. exp. | Poisson | Asym. sinc |

| Precision | classification data | 64.8% | 78% | 74.7% | 79.6% |

| robustness data | 91.9% | 94.7% | 93.6% | 96.1% | |

| Reproducibility | rotation change | 55.5% | 71.3% | 73% | 73.4% |

| view change | 86.6% | 88% | 87.8% | 93.3% | |

| shearing change | 84.1% | 88.4% | 86.8% | 91.8% | |

| scale change | 50.7% | 47.5% | 47.1% | 45.2% | |

Table 1: Experimental results showing better performance of asymmetric sinc filtering.

2- Action classification

To evaluate the quality of the features detected using different temporal filters, we used the baseline discriminative bag-of-words classification framework for action recognition. Our experiments on three different benchmark datasets of the Weizmann, the KTH, and the University of Central Florida (UCF) sports dataset show that (A) salient features detected using asymmetric filters perform better than those detected using a symmetric Gabor filter. (B) The salient features detected using our novel asymmetric sinc filter provide the highest classification accuracy on all datasets [1].

| Temporal filters | |||||

|---|---|---|---|---|---|

| Test | Type | Gabor | Trunc. exp. | Poisson | Asym. sinc |

| Classification | Weizmann | 91.7% | 93.1% | 94.6% | 95.5% |

| KTH | 89.5% | 90.8% | 92.4% | 93.3% | |

| UCF sports | 93.3% | 76% | 82.5% | 91.5% | |

Table 2: Higher average classification accuracy is obtained using salient features detected by a complex, asymmetric sinc filtering.

Comparison of structured-based and motion-based salient features:

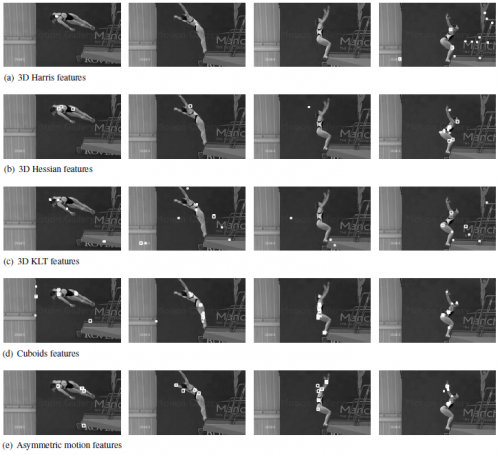

We have recently compared the structured-based spatio-temporal salient features (3D Harris, 3D Hessian, 3D KLT) with the motion-based salient features detected using the best asymmetric temporal filter (i.e., asymmetric sinc filter) and the symmetric Gabor filters. This evaluation is performed in a common BOW framwork on several benchmark human action recognition including: KTH, UCF sports under two different protocols of nine/ten categories, and the challenging Hollywood Human Actions (HOHA I) datasets. As Table 3 shows, in all three datasets, the motion-based features provide higher classification accuracy than the structured-based features.

More specifically, among all of these sparse feature detectors, the asymmetric motion features perform the best as they capture a wide range of motions from asymmetric to symmetric. With much less computation time and memory usage, these sparse features provide higher classification accuracy than the dense sampling as well.

| Dataset | Structure-based features | Motion-based features | ||||

|---|---|---|---|---|---|---|

| 3D Harris | 3D Hessian | 3D KLT | Cuboids | Asymmetric | ||

| KTH | 63.5% | 67.5% | 68.2% | 89.5% | 93.7% | |

| UFC sports | 9 classes | 72.8% | 70.6% | 72.6% | 73.3% | 91.7% |

| 10 classes | 73.9% | 70.2% | 72.5% | 76.7% | 92.3% | |

| HOHA | 58.1% | 57.3% | 58.9% | 60.5% | 62% | |

Table 3: Motion-based salient features are more informative in encoding human actions than structured- bsed features.

Fig. 4: 2D projection of different spatio-temporal salient features on sample frames from diving action from UCF sports dataset. Among all the detectors, the asymmetric motion features are more localized on the moving body limbs with much less false positives from the background.

Fig. 4: 2D projection of different spatio-temporal salient features on sample frames from diving action from UCF sports dataset. Among all the detectors, the asymmetric motion features are more localized on the moving body limbs with much less false positives from the background.

Comparison with the state-of-the-art methods:

Table 4 presents the classification rate of using asymmetric motion features and other published methods on three different datasets. As can be seen, the asymmetric motion features provide the highest accuracy on both the UCF and HOHA datasets. On the KTH dataset, our 93:7% accuracy is comparable with 94:2% [29] accuracy obtained using joint dense trajectories and dense sampling which require much more computation time and memory compared to our sparse features. In a comparable setting with Wang et. al [BMVC 2009], the asymmetric motion features perform better than other salient features and dense sampling. This is in contrast to the previous observations about better performance of dense sampling/trajectories, showing the importance of effective temporal filtering for robust and quality salient feature detection.

| Method | KTH | UCF sports | HOHA | |

|---|---|---|---|---|

| 9 classes | 10 classes | |||

| Schuldt et al. (ICPR 2004) | 71.7% | - | - | - |

| Laptev et al. (CVPR 2008) | - | - | - | 38.4% |

| Rodriguez et al. (CVPR 2008) | 86.7% | 69.2% | - | - |

| Willems et al. (ECCV 2008) | 88.3% | - | 85.60% | - |

| Wang et al. (BMVC 2009) | 92.1% | - | 85.60% | - |

| Rapantzikos (CVPR 2009) | 88.3% | - | - | 33.6% |

| Sun et al. (CVPR 2009) | - | - | - | 47.1% |

| Zhang et al. (PR 2011) | - | - | - | 30.5% |

| Shabani et al. (BMVC 2011) | 93.3% | 91.5% | - | - |

| Wang et al. (CVPR 2011) | 94.2% | - | 88.2% | - |

| Asymmetric motion features | 93.7% | 91.7% | 92.3% | 62% |

Table 4: Asymmetric motion features can better encode the human actions and hence, they provide the highest classification accuracy. These features compete with computationally-expensive dense sampling and dense trajectories.

CONCLUSION

We introduced three asymmetric temporal filters for motion-based feature detection which provide more precise and more robust salient features than the widely used symmetric Gabor filter. Moreover, these features provide higher classification accuracy than symmetric motion features in a standard base-line discriminative framework. We also compared different salient structured-based and motion-based feature detectors in a common discriminative framework for action classification. Based on our experimental results, we recommend the use of asymmetric motion filtering for effective salient feature detection, sparse video content representation, and consequently, action classification.

References

-

Shabani, A. H., D. A. Clausi, and J. S. Zelek, "Improved Spatio-temporal Salient Feature Detection for Action Recognition", British Machine Vision Conference, University of Dundee, Dundee, UK, August, 2011. Details

-

Shabani, A. H., J. S. Zelek, and D. A. Clausi, "Robust Local Video Event Detection for Action Recognition", Advances in Neural Information Processing Systems (NIPS), Machine Learning for Assistive Technology Workshop, Whistler, Canada, December, 2010. Details

-

Shabani, A. H., J. S. Zelek, and D. A. Clausi, "Human action recognition using salient opponent-based motion features",7th Canadian Conference on Computer and Robotic Vision, Ottawa, Ontario, Canada, pp. 362 - 369, March, 2010. Details

-

Shabani, A. H., D. A. Clausi, and J. S. Zelek, "Towards a robust spatio-temporal interest point detection for human action recognition", IEEE Canadian Conference on Computer and Robot Vision, Kelowna, BC, Canada, Kelowna, British Columbia, Canada, pp. 237-243, February, 2009. Details

Related people

Directors

Alexander Wong, David A. Clausi