We are inviting undergraduate students to participate in an Early Undergraduate Research Experience program (EREP/UR2PHD) for Fall 2025. The goal of this program is to help ease undergraduate students with no prior research experience into their first research experience, preparing them to seek out URA opportunities afterwards.

In this program, undergraduate students will

- work in teams (2-4 students) on a research project with a graduate student mentor

- attend a mandatory, virtual and synchronous research methods course, taught by Computing Research Association (CRA), 2 hours per week over 12 weeks

- attend a mandatory in-person weekly research meetup (on Fridays 12:30pm-1:20pm) with all undergraduate students and graduate mentors in the program, 1 hour per week over 12 weeks

CRA’s research methods course will cover the foundations of research with the goal of supporting students in completing their pre-identified research project. Participants will develop and apply practical research skills, like reading and interpreting research papers, conducting literature reviews, and analyzing and presenting data. Students will hone their research comprehension and communication skills, develop an understanding of research ethics, and build their confidence in their identity as a researcher. All concepts and skills will be taught in the context of students’ projects. The course will also provide participants with an opportunity to foster a peer network of support.

Upon successful completion of the CRA course and other program requirements, students will receive a certificate of completion from CRA, as well as CS399 course credit (graded based on their performance in the CRA course and in research). Please note that CS399 is just like any other courses, i.e., students are responsible for paying tuition for the course.

Eligibility Criteria

- Major: Must be enrolled in a Computer Science (CS) program.

- Academic Level: Must have reached or surpassed the 2A academic term.

- Term: Must be enrolled in a study term (not a co-op term) in Fall 2025.

Note: Only students who meet both of the above conditions will be considered eligible.

Course Schedule

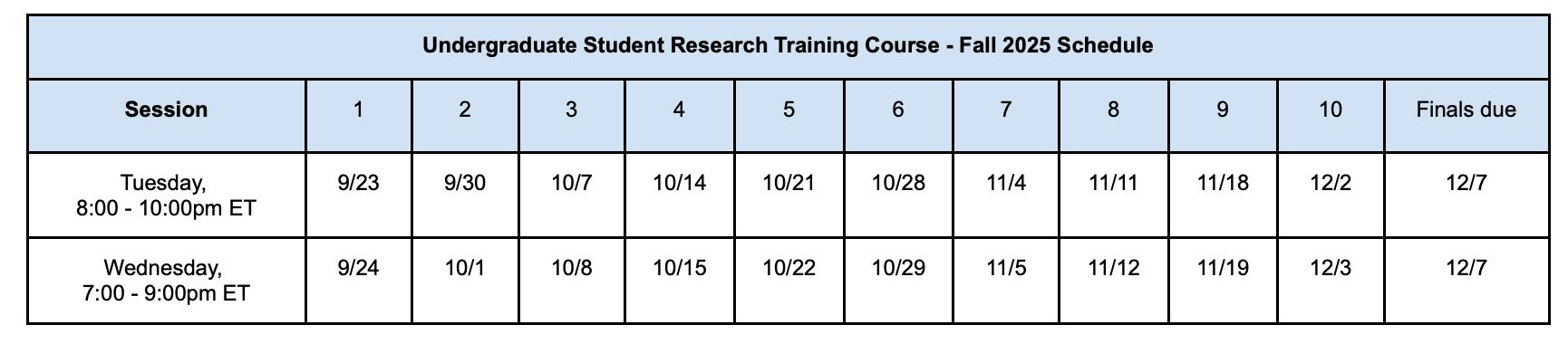

The course is virtual and will start on September 23, 2025. You will be asked to select which session (Tuesdays or the Wednesdays) to attend.

Research Projects

Human-Computer Interaction

Shanza Shanza (Dr Urs Hengartner and Leah Kennedy)

A-1-1

ChatSec: Exploring the Use of AI Chatbots for Personalized Privacy and Security Advice.

To explore how individuals engage with AI chatbots to seek advice on digital privacy and security, and assess the effectiveness, trustworthiness, and cultural relevance of chatbot-delivered guidance.

A-1-2

PrivAware: Designing a Privacy and Security Learning App for Older Adults in Canada

To design, build, and evaluate a culturally relevant, accessible mobile application that educates older adults in Canada on essential digital privacy and cybersecurity practices through simple, scenario-based learning.

A-1-3

Margins of Safety: Exploring Cross-Cultural Privacy and Security Practices Among Low-SES Communities in Canada

To examine how cultural background, education level, and economic constraints shape the privacy and digital security practices of less-educated, low-SES individuals in Canada and to inform the design of culturally and economically appropriate digital safety interventions.

Lucas Gomez Tobon (Edith Law)

A-2-1

My research focuses on the future of education, specifically, how we can bring AI into the classroom to augment human intelligence.

This project explores how an agent can simulate the role of a peer, allowing students to practice by teaching the agent. The goal is to design learning scenarios where the agent makes intentional mistakes or asks questions, prompting the student to explain, correct, or guide the agent’s understanding. In turn, the agent can also challenge the student’s reasoning, enabling a two-way feedback loop.

By structuring interactions around this reciprocal process, we aim to study how teaching an agent affects student understanding, engagement, and metacognitive skills. This work contributes to rethinking the role of AI in education, not as a tutor or tool, but as a collaborative learning partner.

A-2-2

This project focuses on personalizing language model behavior by aligning outputs with the values of individual users. While most alignment techniques optimize for general safety or community norms, they often ignore the personal, situational preferences that shape real-world interactions, such as whether a user prefers directness or empathy, creativity or structure. Our aim is to build a system that adapts to these differences through interaction rather than fine-tuning.

We are designing a context model that identifies the user’s intent and retrieves value-aligned prompt suggestions based on that context. These prompts help steer the language model’s response toward values that are relevant to the user’s current goal, for example, encouraging thoroughness in a medical explanation or warmth in emotional support. The system will present these suggestions as options the user can accept, reject, or modify.

Through this lightweight feedback, the model will learn over time to personalize its recommendations using online learning. The long-term goal is to support dynamic, transparent, and user-driven alignment that makes AI systems more adaptable in everyday use.

A-2-3

My research also focuses on the future of work, particularly the role of AI agents within organizations.

In this project, we use both real and simulated email data from within a company to explore how agents can construct a comprehensive model of organizational structure. Each stakeholder and task is mapped using activity theory, allowing the agent to represent roles, objectives, tools, and interactions across the workplace.

Our goal is to develop a methodology that equips agents with enough social context to understand workplace dynamics. This would enable agents to assist employees in navigating organizational norms, social rules, and cultural practices within the environments they inhabit.

Anthony Maocheia-Ricci (Edith Law)

A-3-1

Designing a platform for technological collaboration in art-making:

Disciplines within the creative arts such as theatre, music, and exhibition creation lend themselves to be collaborative by nature. Where some technology is already used on the more logistical side of artistic collaboration (planning, communicating remotely, storing files, etc), with the advent of generative AI, there exist novel ethical and technical questions on technology’s role in other aspects of collaborative art-making. Is technology only a tool, or an equal collaborator in the creation of art? In what ways does technology contribute to the actual art made? What are disciplinary differences in technology’s role as a collaborator/tool within the arts? In this project, the student will go through a user-centered design process to research how collaboration in the creative arts is currently facilitated, and if/how technology can play a role in these processes. Duties will involve reviewing literature on collaboration within the arts and technology’s role in the creative arts, crafting specific research questions based off of said literature, scaffolding a research plan and interview guide, and rapid (visual) prototyping of a technological solution based off of existing findings.

A-3-2

Designing a platform for participatory deliberation and decision-making

Technologies for collective decision-making are becoming more and more common in our daily lives, particularly in digital civics and government. In Human-Computer Interaction (HCI) literature, there exists a gap in technology for participatory forms of decision making, where the participants are taking a direct role through voting or deliberation rather than letting a representative speak for them. Examples of these kinds of decision-making processes can range from Citizen's Assemblies to public, collaborative art pieces. Furthermore, this form of deliberation can extend beyond the civic and political space, and can be used in any group looking to generate ideas “bottom-up” (community-led rather than board-led). In this project, the student will go through a user-centered design process to research the current state of technologies for deliberation, novel methods for participatory deliberation, and different spaces where these technologies can be used. Duties will involve reviewing literature on deliberative technologies in HCI, crafting specific research questions based off of said literature, scaffolding a research plan and interview guide, and rapid (visual) prototyping of a technological solution based off of existing findings.

A-3-3

Developing a task-agnostic platform for value-based deliberation

Deliberative processes are commonly used for complex or value-driven problems, where there isn’t a clear answer to the question or issue at hand. Deliberation is more than simple discussion and debate, and is meant to be more careful about respecting everyone’s opinions and values to come to a common ground. Technologies have begun to be used in deliberative processes as a means of facilitating them virtually or introducing novel methods for in-person collaboration. Moreover, there’s interest among facilitators to include more visual or creative ways to enable deliberation where technology can play a role. For complex problems such as value alignment, these creative or “arts-based” methods can be one such avenue to manage differences in opinions. In this project, the student will lead a research-through-design process in the creation of rapid (developed) prototypes of a platform to support value-based deliberative processes for any form of task. Duties will involve reviewing literature on deliberative processes and existing technologies, developing a live tool to support deliberation based on the literature, and rapid iteration based off of pilot studies done among the team.

AI / Machine Learning

Desen Sun (Sihang Liu)

B-1-1

Diffusion acceleration. Diffusion model is a promising model recently, especially in multi-modal area, such as image, video, or audio generation. However, to generate one item, it takes forever to do the computation. Although some studies try to use cache or sparse computation to accelerate it, these methods are still not general or scalable enough. This project will propose to build a more efficient system to help generate images or videos more quickly.

B-1-2

Diffusion security. Although generative models, such as diffusion models, can help users improve their work efficiency, some malicious users may want to leverage these models to generate some not suitable for work (NSFW) contents. These attacks may happen in inference or train stage. Therefore, this project will classify these attack methods and then defend them.

B-1-3

Carbon reduction. With the growth of AI related applications, the concern of their effects to the environment has been raised. The carbon produced by those AI models are increasing. Therefore, this project will analyze the carbon proportion in a specific application (model serving, video live), and reduce the carbon emissions while satisfying the service quality.

Ali Falahati (Prof. Lukasz Golab)

B-2-1

This project aims to explore how values and preferences get embedded into generative AI models over time. When large language models (LLMs) are trained and retrained, their training data often shifts from real, human-created content to a mix of synthetic outputs and feedback curated by two main groups: the model owners (such as companies or research labs) and the public (users who give feedback or choose outputs). These two groups often have different priorities—owners might care more about safety and consistency, while the public might value usefulness or creativity.

As part of this project, students will help build a game-theoretical model that captures this dynamic “tug of war” between owners and the public. The goal is to investigate questions like: If both sides keep influencing the training data, do the models eventually settle into a stable set of values, or do conflicts and diversity persist? Students will formalize the problem, run experiments with simulated feedback loops, and analyze how different strategies affect the long-term outcomes

B-2-2

The goal of this project is to investigate how understanding and manipulating the “inner workings” of LLMs can help create more diverse and useful synthetic data. Mechanical interpretability is about figuring out what different neurons or components inside an AI model actually do. Recent research shows that certain neurons are responsible for specific concepts, styles, or reasoning patterns in generated text.

The goal of this project is to combine these insights with synthetic data generation. Students will experiment with identifying the most active or important neurons in a pretrained LLM during text generation, then apply small, targeted perturbations (changes) to these neurons at inference time. By comparing the outputs before and after these neuron-level tweaks, students can measure how much these changes affect the diversity, creativity, and usefulness of the generated text.

B-2-3

This project explores new ways to trace and protect text generated by large language models (LLMs) using semantic watermarking techniques. Traditional watermarking often requires access to the internal workings of the AI model and can degrade text quality. In contrast, this project focuses on post hoc (after generation), model-agnostic methods that operate at the sentence level and are easy to use—even with black-box models accessed via APIs.

Tasks include implementing the watermarking pipeline, experimenting with different paraphrasing models, and analyzing how well the watermarks can be detected even after adversarial edits.

Xuliang Wang (Ming Li)

B-3-1

We have developed LLM based data compressors whose compression ratio doubles current solutions. Intuitively, the better the LLM predicts the next token, the more compact we can compress the data. By introducing RAG into our LLM based compressors, we have a chance to further improve the compression ratios.

B-3-2

We have developed LLM based data compressors whose compression ratio doubles current solutions. However, these compressors are hard to use due to speed and volume. We can create compression-targeted smaller and faster LLMs with BitNet, making these compressors working on cell phones.

B-3-3

We have developed LLM based data compressors whose compression ratio doubles current solutions. However, these compressors can only handle a single modality, while in real life, large volumes of data exists with a mixture of modalities (e.g. records of chat, people chats with text and pictures). We could compress these multi-modality data together, utilizing relevance between the modalities to compress the data more compact.

Algorithm and Complexity

Helia Yazdanyar (Sepehr Assadi)

C-1-1

Algorithms for k-Coloring Special Restricted Graphs

Vertex coloring is a central problem in graph theory and algorithms: given a graph, assign colors to its vertices so that no two adjacent vertices share the same color, using at most $k$ colors. While the problem is generally NP-hard, it becomes more tractable on restricted families of graphs. In particular, graphs that do not contain short induced paths—such as $P_4$-free or $P_5$-free graphs—have been shown to be $k$-colorable in polynomial time for small fixed $k$. These results are often proven using intricate structural theorems and combinatorial arguments, but explicit algorithms are rarely described.

This project explores the algorithmic side of these results. The goal is to design simple, efficient algorithms for $k$-coloring such restricted graph classes, focusing on those that exclude short paths (like $P_4$ or $P_5$) as induced subgraphs. The project will start by understanding the known structural results and identifying whether they can be turned into efficient procedures. Then, we will attempt to design new algorithms—possibly based on greedy methods, local rules, or decomposition strategies—that produce valid $k$-colorings on these graphs.

The project balances theory and practice: it offers an opportunity to prove algorithmic guarantees for special graph classes while also encouraging implementation and experimentation.

C-1-2

Improved Sublinear Algorithms for Quantum Graph Coloring

Quantum computing has opened up new frontiers in algorithm design, including for classic problems in graph theory. One such problem is $(\Delta+1)$-vertex coloring: assigning one of $\Delta+1$ colors to each vertex of a graph of maximum degree $\Delta$, such that adjacent vertices get different colors. While there are almost tight classical bounds on the query and time complexity of coloring algorithms in various models (e.g., sublinear, streaming, or distributed), the quantum setting remains much less understood.

This project explores the use of quantum query models to design sublinear-time algorithms for graph coloring. Specifically, the focus is on identifying whether quantum access to the graph—via adjacency, neighbor, or degree queries—can help improve the bounds for $(\Delta+1)$-coloring in comparison to classical methods. Known results in the quantum query model exhibit gaps between upper and lower bounds, and little is known about how to optimally leverage quantum access patterns in coloring tasks.

A natural starting point is to review the existing classical and quantum literature on graph coloring, sublinear algorithms, and quantum query models. From there, we can explore algorithmic strategies or even aim to prove new lower bounds. This project is well-suited for students with interests in graph theory, quantum computing, and sublinear algorithms.

C-1-3

Implementing Known Sublinear Algorithms for $(\Delta + 1)$-Coloring

Graph coloring with $(\Delta + 1)$ colors is a foundational problem in algorithms, with applications in scheduling, register allocation, and network resource management. In recent years, several powerful sublinear algorithms have been developed for this problem, particularly in models like Local Computation Algorithms (LCA) and semi-streaming. These algorithms provide strong theoretical guarantees while accessing only a small portion of the input graph.

One such algorithm is based on Asymmetric Palette Sparsification (APST), which builds on probabilistic techniques to assign small random palettes to each vertex and then efficiently find a valid coloring using only local information. While these methods are provably efficient, their practical performance on large graphs—especially those arising in real-world data—remains largely untested.

This project focuses on implementing recent sublinear $(\Delta + 1)$-coloring algorithms, including APST, and evaluating them on synthetic and real-world large-scale graphs. We aim to understand how the theoretical performance translates into practical runtime, space usage, and quality of output. Empirical studies can help uncover bottlenecks, suggest simplifications, and validate the algorithms' robustness on different graph topologies.

Beyond theoretical interest, such implementations are valuable for bridging the gap between modern algorithm design and practical large-scale graph processing. The project is well-suited for students who enjoy combining algorithmic thinking with coding and experimentation, and are curious about how recent theoretical insights hold up in practice.

Data systems and Networking

Zhengyuan Dong (Renee Miller)

D-1-1

Data/Model Lake cross NLP: There are good question-answering systems over tables. But these systems do not consider extending tables to better answer the questions. In this work, we will use a current question-answering system over tables but extend it to consider finding additional attributes for the table (joinable tables) or additional tuples for the table (unionable tables) when such extension can provide better answers. The student will learn how to use current question-answering systems and also how to extend them to answer more fine-grained or detailed questions. The student will also learn how to apply the latest scalable table search methods from data and model lakes.

We expect to produce a demo paper containing several illustrative case studies that showcase scenarios where our extended paradigm clearly outperforms the original table-QA pipeline. These studies will pinpoint convincing examples—such as multi-source schema integration or enriched tuple coverage—where joinable and unionable table extensions yield deeper, more accurate answers. The advanced goal is to evolve this work into a full benchmarking paper that systematically evaluates both baseline and extended QA systems across diverse datasets and query types.

D-1-2

Model Lake across Agent framework: Using agents to verify model cards and table discovery over model lakes. Currently model cards report performance results for models across different benchmarks but there is no reliable way to confirm that these values are correct. We will develop autonomous agents that (1) parse a model card’s reported metrics, cross-check them against the original benchmark sources, and flag any discrepancies, and (2) evaluate newly discovered tables from our table-discovery pipeline to ensure their data quality and semantic consistency. The student will learn to architect and deploy state-of-the-art agent frameworks—leveraging tools like LangChain, AutoGen, Camel, or others—on platforms such as Hugging Face model lakes.

D-1-3

Model Lake across Link Prediction (Graph): Link prediction over data and model lake graphs. Model lakes like Hugging Face provide semantic links between models and tables that can be modeled as a graph. An example is when one model card references a paper that is also cited by another model card. But this graph may not be complete. We will use the latest link prediction methods to infer missing edges in a model-lake graph. We will also incorporate data-to-data similarity edges—capturing when two datasets share structural or semantic overlap—and explicitly model model-data training relationships (which datasets were used to train each model) alongside model-model variant relationships (fine-tuned, quantized, distilled, etc.). By building a heterogeneous graph of these node and edge types, we can both validate existing connections (flagging improbable or inconsistent links) and predict high-confidence new relationships. The student will learn to apply already developed graph tools to jointly reason over model–data–model interactions.

Kimiya Mohammadtaheri (Mina Tahmasbi Arashloo)

D-2-1

This project explores how formal verification can help test network transport layer code by automatically generating useful test cases. Formal verification checks whether a program behaves correctly in all situations, which is especially useful in networking, where small bugs can cause big problems. We will use a tool called KLEE, which uses symbolic execution. Instead of running a program with specific inputs, it tries all possible input values to find bugs and edge cases. However, KLEE has some limitations. It does not handle loops or input/output very well because these can create a huge number of different scenarios to explore. Transport layer code often involves both. For example, it might send many packets in a loop or copy received data to memory, and KLEE can easily get overwhelmed trying to explore all paths. One way to work around this is by simplifying the code. Instead of actually sending n packets in a loop, the program could simply record that it would send n packets. These kinds of shortcuts, or abstractions, can make the code much easier for KLEE to analyze without losing important behavior. In this project, we will explore different ways to abstract transport layer code so that tools like KLEE can be used more effectively.

D-2-2

This project aims to use AI to automatically generate code and unit tests from network protocol specification documents, known as RFCs. These documents describe how a network protocol should behave and are meant to guide programmers when building systems that follow that protocol. While RFCs are usually well-written and detailed, turning them into actual code can be a slow and tedious process. That’s where AI can help. Our goal is to build an AI that can read these documents and generate working code based on the protocol descriptions. Additionally, we would need to verify the correctness of the generated program. So we also want the AI to create test cases that check whether the generated code behaves correctly. Before using these test cases, we will manually verify them to make sure they truly reflect what the protocol expects. This way, we can be confident in the results when the tests are run. Overall, this project focuses on designing and building the AI system that can handle these tasks, as well as creating a test environment where we can run the generated code and validate its correctness.

D-2-3

This project focuses on exploring and understanding different types of distributed network applications and categorizing them based on their performance needs. Distributed applications are programs that run across multiple machines connected over a network and must coordinate or exchange data regularly. These include systems like distributed databases, AI training, and AI inference services. In recent years, there have been many efforts to improve network performance, such as increasing throughput, reducing latency, or boosting reliability. While these improvements are useful, not all types of applications benefit from them in the same way since each of these has different communication patterns and performance requirements. In this project, we aim to study and experiment with various distributed applications, understand their specific networking needs, and see how they perform under different network conditions. The goal is to define meaningful categories for these applications based on their behavior and requirements, which can help guide future work in designing and optimizing networks for different types of distributed applications.

Software Engineering

Bihui Jin (Pengyu Nie)

E-1-1

WildCursor captures developer IDE interactions—code edits, execution outputs, and LLM suggestion feedback—via a VS Code extension. Undergraduates will help build data-filtering pipelines to extract and curate one of several focused sub-datasets, laying the groundwork for future benchmarks and model training.

Sub-dataset Options: code co-editing, code-comment update, code-test update, and LLM suggestion acceptance. Student Responsibilities: Define Filtering Criteria (e.g., regex), Implement Extraction Scripts (e.g., Python, parsers), Validate Data Quality. Undergrads will be helped by the leading PhD student to learn above methods and to design the procedures.

Students will gain hands-on experience in large-scale data processing, code analysis, and the challenges of preparing real-world interaction-driven benchmarks (all within the context of LLM-powered software development).

E-1-2

Modern browsers support thousands of third-party extensions that enrich functionality but may collect sensitive data and degrade performance. In our study, we collected 21,012 Chrome extensions across 18 categories. Undergraduates will help design and execute experiments to explore how an extension’s software structure and processing pipelines influence its runtime overhead.

Key tasks include: Instrumentation (measuring metrics like runtime, CPU, and energy usage), Structural Analysis (analyzing code metrics, e.g., cyclomatic complexity, and build pipelines, e.g., event listeners, background scripts), and Data Analysis (using statistical methods).

Students will be helped by the leading PhD student to learn above methods and to design the procedures. Students will gain hands-on experience in web automation, performance profiling, statistical analysis, and interpreting the performance trade-off in real-world browser extensions.

E-1-3

Kaggle hosts tens of thousands of interactive Jupyter notebooks covering diverse ML problems. However, notebooks often contain execution outputs, environment-specific metadata, and inconsistent cell structures that hinder large-scale analysis and downstream tasks (e.g., code-editing benchmarks).

Key Tasks: Notebook Parsing & Filtering, Quality Validation, Metadata Extraction, and Deliverables.

Undergrads will help design a pipeline to clean and normalize a Kaggle‐sourced notebook corpus. Students will gain hands-on experience with large-scale data wrangling, automated testing, and preparing real-world code artifacts for machine learning research.

Responsibilities and Expectations

Participants will need to certify that they understand and will adhere to the following responsibilities and expectations:

- Engage in a research project with your research group (1-3 other undergraduates), a graduate student mentor, and a faculty member (if applicable)

- Actively participate in the same course session and time as their research team (i.e., attending all course sessions with camera on, arriving on time, participating in discussion when prompted, completing course assignments in a timely fashion). Students will be allowed no more than 2 unexcused absences throughout the course.

- Participate in program evaluation efforts, as requested

- Attend in-person program requirements, including in-person research meetings and meetups