Design team members: Aishwar Muthuraman, Jesse Ross-Jones, Xiuran (Mimi) Xia

Supervisor: Prof. David Clausi

Background

Most

current

Multi-touch

tables

that

are

marketed

as

multi-user

lacks

the

ability

to

distinguish

the

users

initiating

a

specific

touch.

The

technology

used

in

this

project

is

the

Frustrated

Total

Internal

Reflection

(FTIR)

multi-touch

table.

FTIR

tables

are

currently

limited

in

their

ability

to

interact

with

the

user,

and

have

the

user

actions

interpreted

fully.

[1]

Recognizing

the

user

who

performs

a

touch

is

beneficial

when

user

specific

action

is

required.

For

example,

the

multiple

users

working

on

the

surface

have

different

permissions

to

perform

actions,

games

where

moves

are

made

and

need

to

be

attributed

to

specific

players,

and

multiple

members

of

a

family

in

an

IKEA

showroom

simultaneously

designing

their

own

rooms

on

one

surface.

For this purpose our group will work with the Table-Top group consisting of Arthur Chow, Gartheepan Rasaratnam, and Tianyang Chang; Their group will develop an application with a user-interface that provides seamless user experience, allowing family members to simultaneously (multi-user) design their own rooms.

Project description

The objective for out final system is to be able to support a multi-touch table for 2~4 able-bodied users. The system would non intrusively identify each user using computer vision. One single web cam is used to get over head video feed which is then processed by software. The system has support for an user authentication module. To facilitate natural interaction, the system must not have a significant lag.

Design methodology

The

implementation

of

the

system

has

been

deliberately

kept

modular,

such

that

it

could

be

easily

upgraded

in

the

future

to

accommodate

evolving

needs

of

the

system.

Each

sub-system

is

being

designed

in

a

iterative

method.

For

example,

the

user

identification

modules

is

being

developed

separately

of

the

function

which

maps

user

hand

location

to

the

corresponding

pixels

on

the

display.

Currently,

we

are

using

probabilistic

colour-detection

algorithms

to

identify

the

location

of

the

users

hands

as

well

as

identify

them

uniquely

[2].

The

current

algorithm

has

some

limitations

that

will

hopefully

be

addressed

in

later

iteration

that

would

support

more

users.

The

skin

detection

algorithm

can

also

be

easily

adapted

to

identify

different

colour

markers

worn

by

different

user,

should

such

a

system

be

implemented.

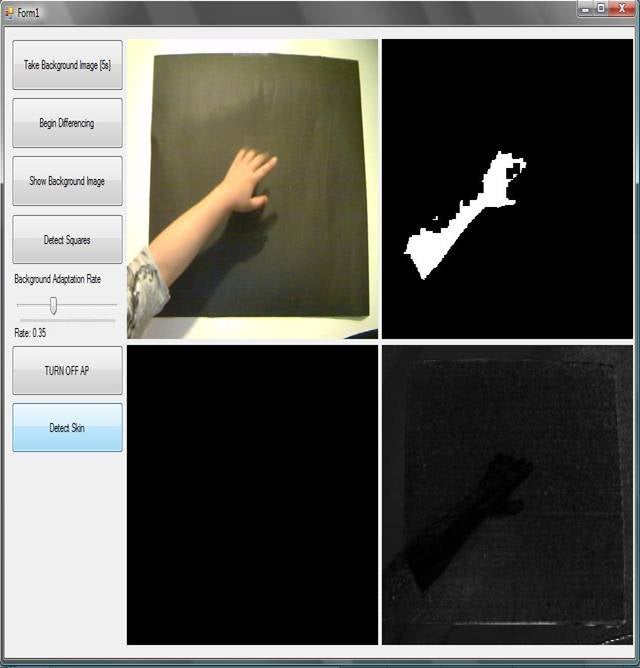

Colour Detection Algorithm applied to skin detection of a prerecorded video

To ensure robustness of the system, the colour-detection system is supplemented with an adaptive back ground motion detection algorithm to capture users' moving hands.

Adaptive Background Motion Detection

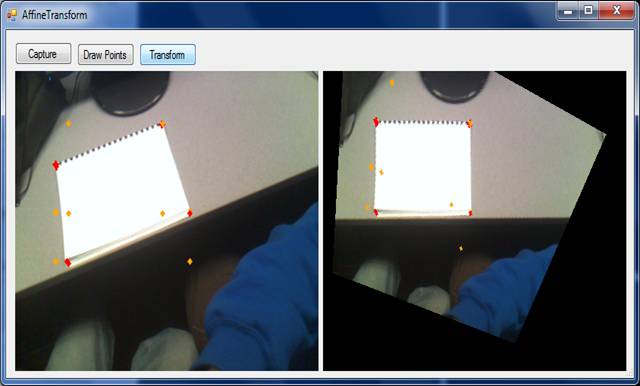

The other main objective of this system is to match users’ motion in the image to actual locations on the tabletop. To accomplish this, the corners of the table top are located first. Following, these points are used to compute a perspective transformation matrix. Using this matrix, points in the video can be transformed to points on the tabletop. In this way, when a user is detected in the video, the system will computer where that user is spatially using the table.

Affine transform used to correct perspective of the rectangle

References

[1] Dohse, K.C.; Dohse, T.; Still, J.D.; Parkhurst, D.J., "Enhancing Multi-user Interaction with Multi-touch Tabletop Displays Using Hand Tracking," Advances in Computer-Human Interaction, 2008 First International Conference on , vol., no., pp.297-302, 10-15 Feb. 2008

[2] H Chang. and Robles, U (2000, May) Skin Color Model. [Online]. http://www-cs-students.stanford.edu/~robles/ee368/skincolor.html