A new study by Dr. Alexander Wong, professor of systems design engineering (SYDE) at the University of Waterloo and researchers from DarwinAI was featured in TechTalks, a leading artificial intelligence online publication.

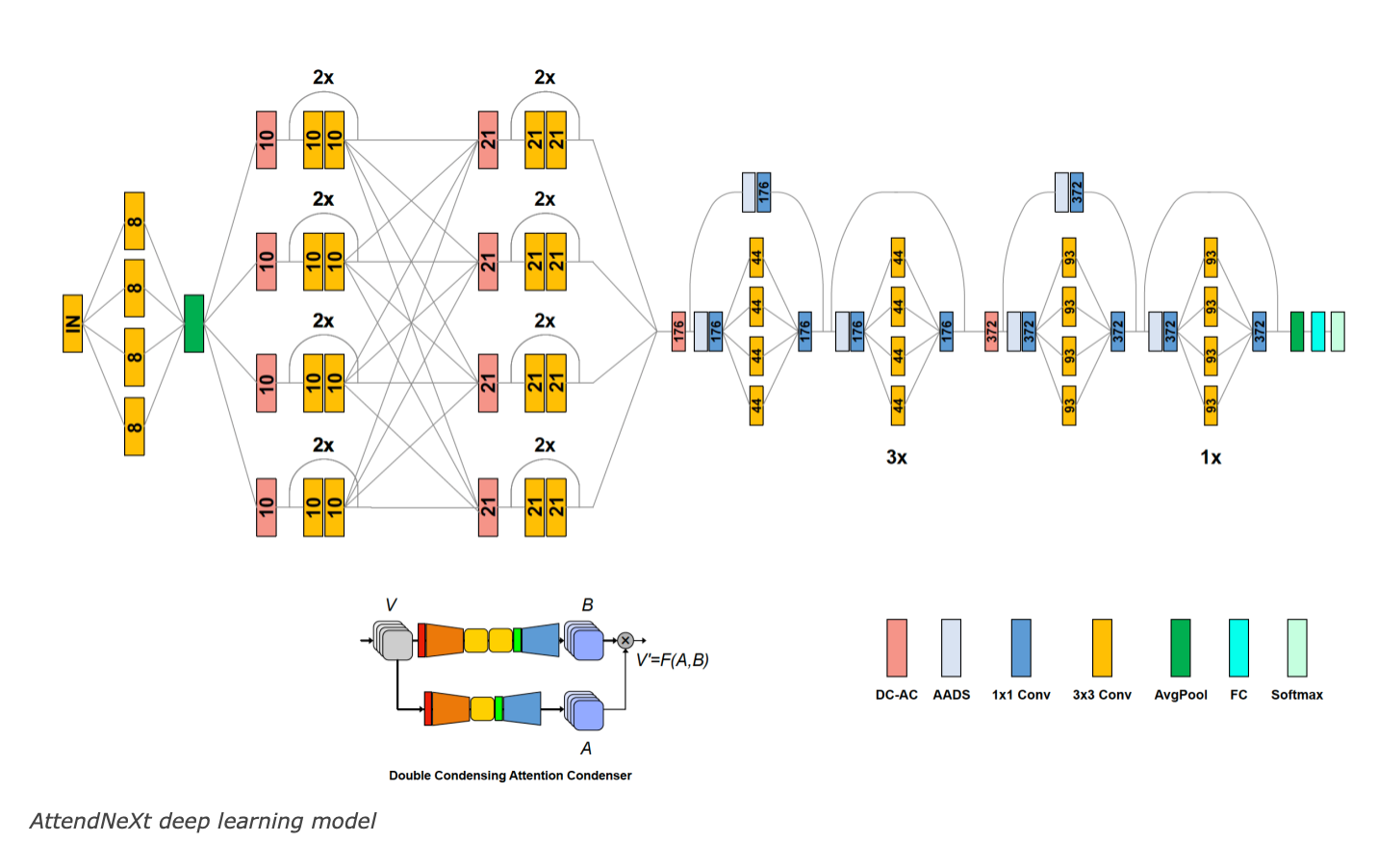

The paper introduces a new deep learning architecture that brings highly efficient self-attention to TinyML. Called “double-condensing attention condenser (DC-AC),” the architecture builds on the team’s previous work and is promising for edge AI applications.

TinyML is a branch of machine learning (ML) employed in resource-constrained environments, such as battery-operated devices or in the absence of an internet connection. Demand for innovations in TinyML, to create smaller and faster deep neural networks is driven by the range of commercial applications, from smartphones and wearable technologies to managing patient data in healthcare settings.

The results of the study show opportunities to improve performance in the realm of TinyML, using a newly designed neural network architecture called “AttendNeXt” and enhancing self-attention mechanisms.

“By introducing this double-condensing attention condenser mechanism, we were able to greatly reduce complexity while maintaining high representation performance, meaning that we can create even smaller network designs at high accuracy given the better balance,” Wong said.

The columnar architecture of the network enables different branches to learn disentangled embeddings in the early layers. In the deeper layers of the network, the columns merge and the channels gradually increase, enabling the self-attention blocks to cover wider areas of the original input.

Read the full paper: "Faster Attention Is What You Need: A Fast Self-Attention Neural Network Backbone Architecture for the Edge via Double-Condensing Attention Condensers"