Design team members: Joannes Chan, Jason Dai, Raymond Szeto

Supervisor: Professor P. Fieguth

Background

Picture yourself browsing around the local IKEA on a quest to find a new bookshelf for your bedroom. After an exhausting couple of hours of searching you finally spot it; you see the perfect bookshelf that you feel you absolutely must own. However, while it looks perfectly fine in the store's mock bedroom, you are still unsure about some things. Is your own room at home big enough to fit the shelf? Will its colour match your wallpaper? Does the slightly more expensive mahogany version of the shelf actually look better with the rest of your furniture? You could, of course, just gamble on the leniency of the store's return policy and buy the bookshelf with the hopes that everything works out fine. However, there is a better way.

Imagine that instead of going out to the store to find the right bookshelf, you could sit at home and have various bookshelves instantly come to you. More sophisticated than just a simple web-page shopping system, you would be able to actually place each shelf in your room to your liking, walk around it to get a sense of whether or not it fits, and if not you would have the option to move on to the next one. After deciding on the right shelf you could then order it online with the confidence that it will fit in your room without ever having to leave your home. While seemingly infeasible, a shopping experience such as this can now be made possible with the use of augmented reality technology.

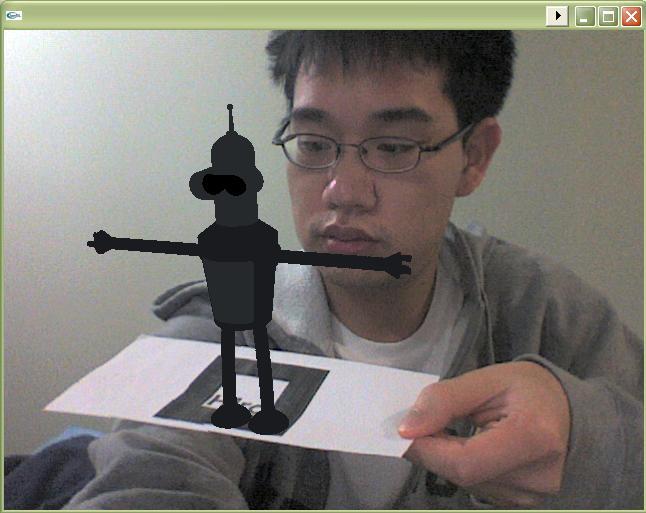

Augmented reality describes a hybrid reality where people are given to ability to visualize virtual objects in their immediate physical environments in the form of real-time 3D computer graphics. This is typically achieved by giving the user a headset which overlays the relevant virtual objects with the correct spatial orientation so that they look as if they exist in the real environment. Within the context of the furniture example, augmented reality technology could allow users to position and overlay virtual bookcases around their room. Figure 1 illustrates the concept of augmented reality with a virtual robot character correctly oriented so as to lie on the plane of the real paper. Figure 2 further illustrates the possibilities of blending real and virtual objects within the augmented reality space.

Figure 1: Projecting a virtual object into a real space

Figure 2: Blending the use of virtual and real objects

Augmented reality is an extremely promising area of research that has progressed steadily in the last several years. As such, there now exists many tools which allow software developers to project virtual objects onto physical markers embedded with specific patterns. Our group is focusing on what we feel is the next stage in research: the manipulation of virtual objects in an augmented reality system.

Our group hopes to use concepts from human factors and cognitive ergonomics to evaluate various options for user interactions. As well, we hope to use image processing and pattern recognition techniques to develop a system that robustly extracts and analyzes user motion. As a bare minimum, we hope to develop a system that will allow a user to intuitively translate, rotate and scale virtual objects. Furthermore, we wish to develop a user-friendly interface that will allow users to move points of articulation on these virtual objects as well.

Project description

Our overall objective is to design a solution which enables the general public to intuitively manipulate virtual objects within their immediate physical environments. There are four main components to the overall solution:

- A physical marker must be tracked and virtual object projected onto it accordingly.

- A headset needs to be developed which will allow a person to view the virtual object.

- An input device needs to be created which will allow for interaction with the virtual objects.

- A system needs to be developed that will map natural hand motions into meaningful interactions with the virtual object. Hand motions are analyzed using some form of feature extraction and pattern recognition.

Design methodology

Our group is using a nested design process, wherein a baseline project was determined from which subsequent expansions will made. The baseline project is a simplified version of the aforementioned components. In the baseline project, a person will be capable of manipulating a virtual object on their computer using a web camera and a mouse. This milestone has already been completed. In developing a tracking system, our group primarily used ARToolkit, a popular tool in the augmented reality community. We also developed our own code to allow manipulation of the virtual object with a mouse.

The group is now focusing on various expansions. We are looking into using a P5 glove as an input device; the P5 glove consists of flexible rods that surround each finger and record how far the finger bends. This will potentially allow for a user to "grasp" an object with their hands. We are also in the early stages of developing a headset for the user to wear. We plan on using two web cameras to mimic stereoscopic vision, and have a performed various experiments to that effect to ensure that the web cameras can produce a desirable stereoscopic effect

With continued progress, we hope to develop a solution that will eventually satisfy all four components of the proposed solution.