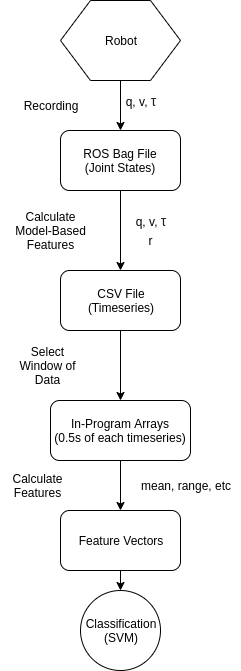

This project aims to detect and classify physical interaction between a human and the robot, and the human's intent behind this interaction. Examples of this include and accidental bump, helpful adjustments, or pushing the robot out of a dangerous situation.

By distinguishing between these cases, the robot can adjust its behaviour accordingly. The framework begins with collecting feedback from the robot's joint sensors, including position, speed, and torque. Additional features, such as model-based torque calculations and joint power, are then calculated.

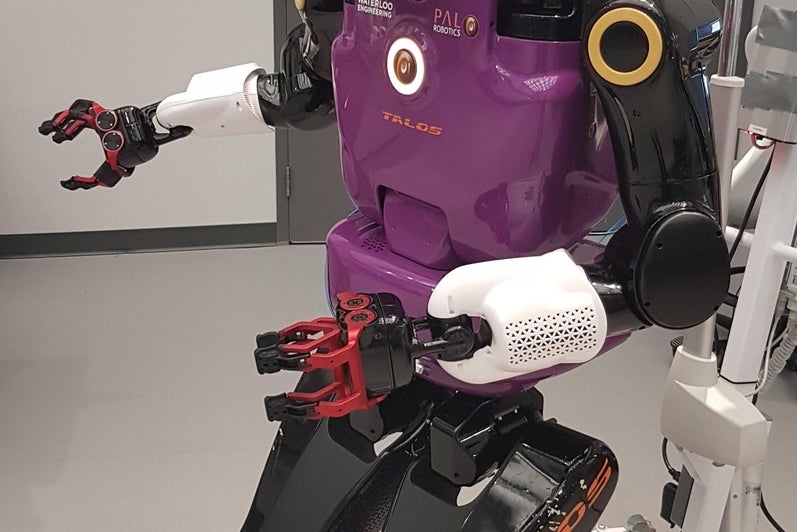

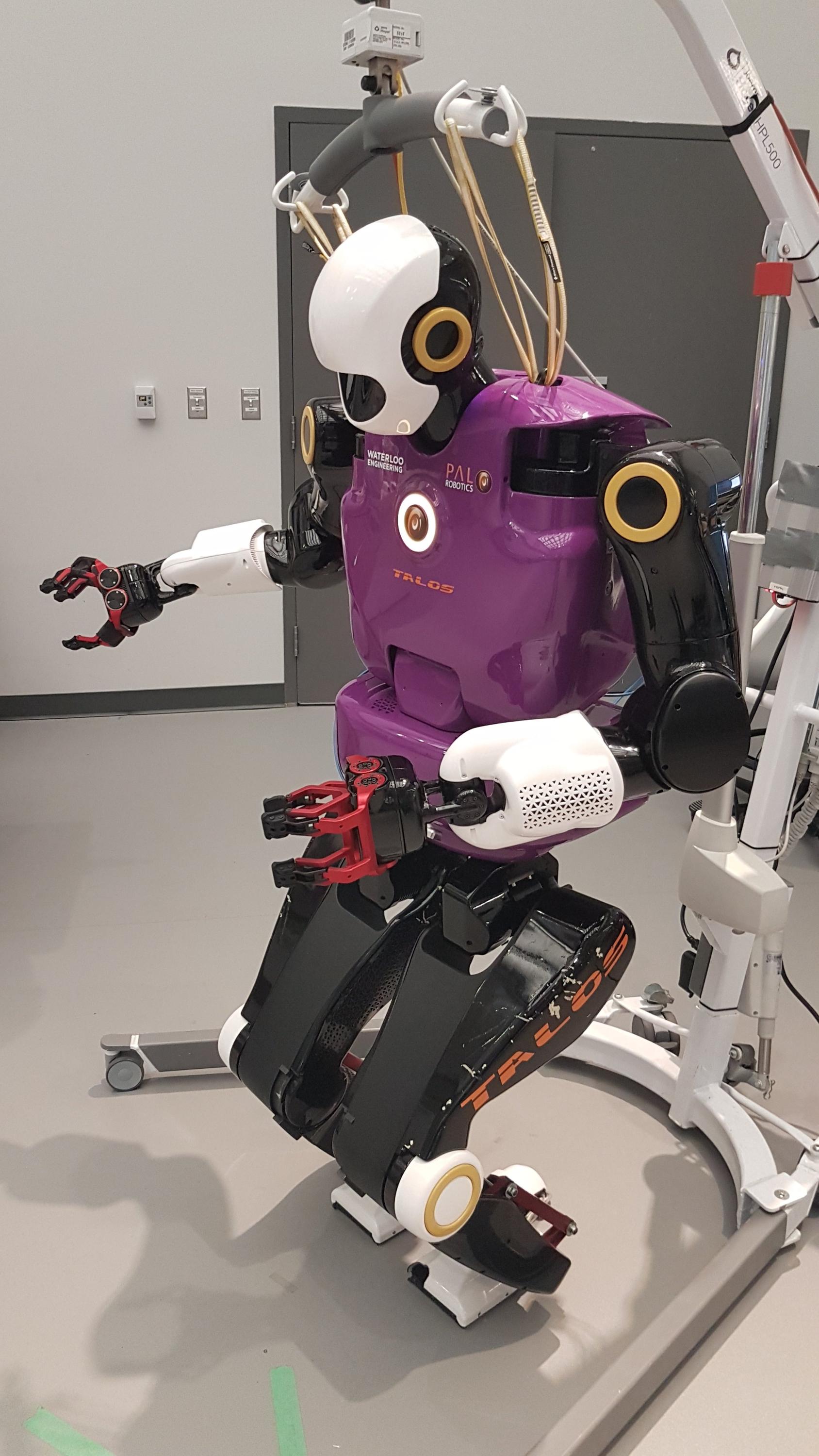

This project will then investigate two areas. The first will be using machine learning on this recorded data to classify interactions. The second will be studying the effects of various situations on the performance of such a situation. The end goal will be a functional classification system running on the TALOS humanoid robot.