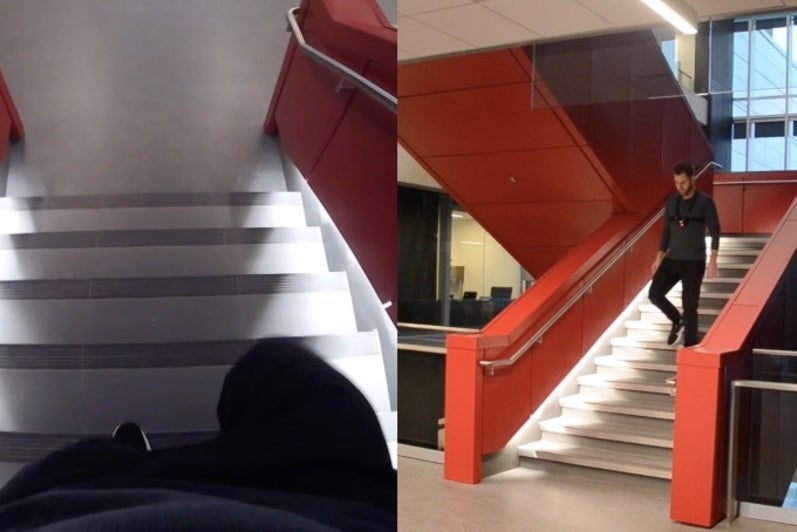

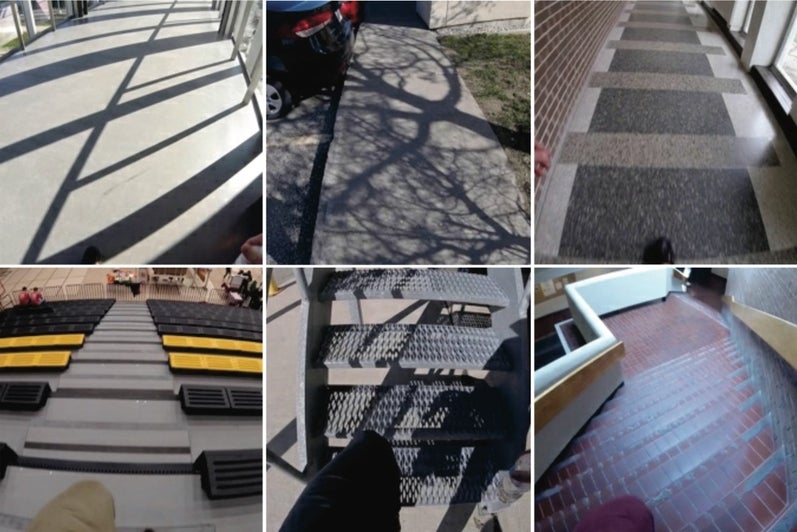

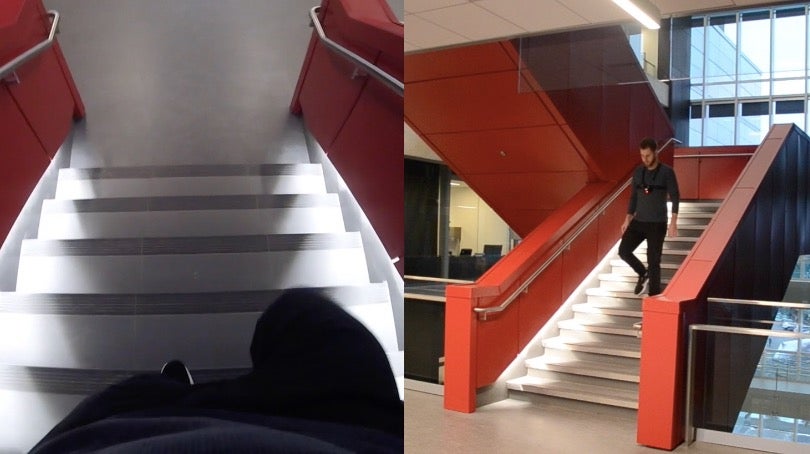

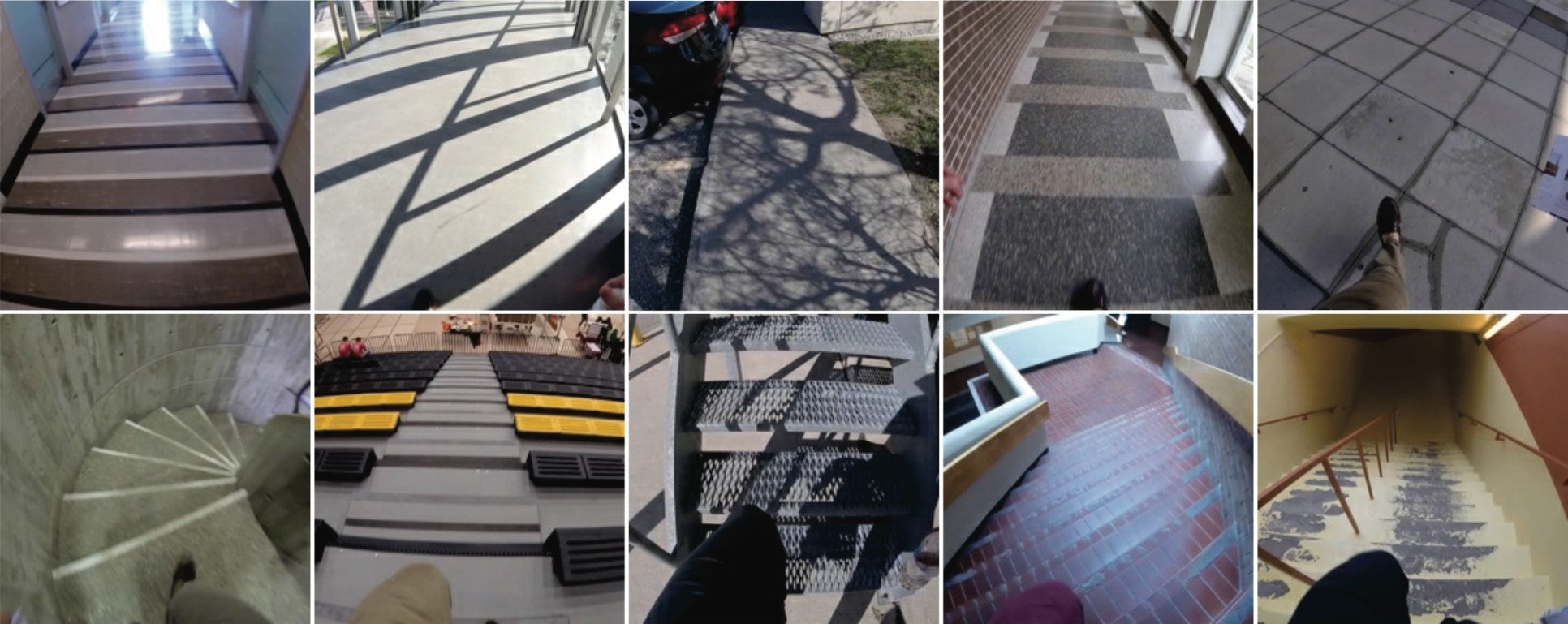

Wearable computer vision and deep learning are combined for real-time sensing and classification of human walking environments. Applications of this research include optimal path planning, obstacle avoidance, and environment-adaptive control of robotic exoskeletons. These powered biomechatronic devices provide assistance to individuals with mobility impairments resulting from ageing and/or physical disabilities.

The team is currently developing large-scale image datasets using wearable cameras and efficient convolutional neural networks for real-time environment classification. For more information on this project, see the recent publications in the IEEE International Conference on Rehabilitation Robotics (ICORR), the Frontiers in Robotics and AI, and the IEEE International Conference for Biomedical Robotics and Biomechatronics (BioRob).

The researchers recently released the benchmark classification performance on their large-scale image dataset, called ExoNet, using an efficient convolutional neural network (see paper); these lightweight deep learning algorithms are pertinent to onboard real-time inference for robotic exoskeleton control.