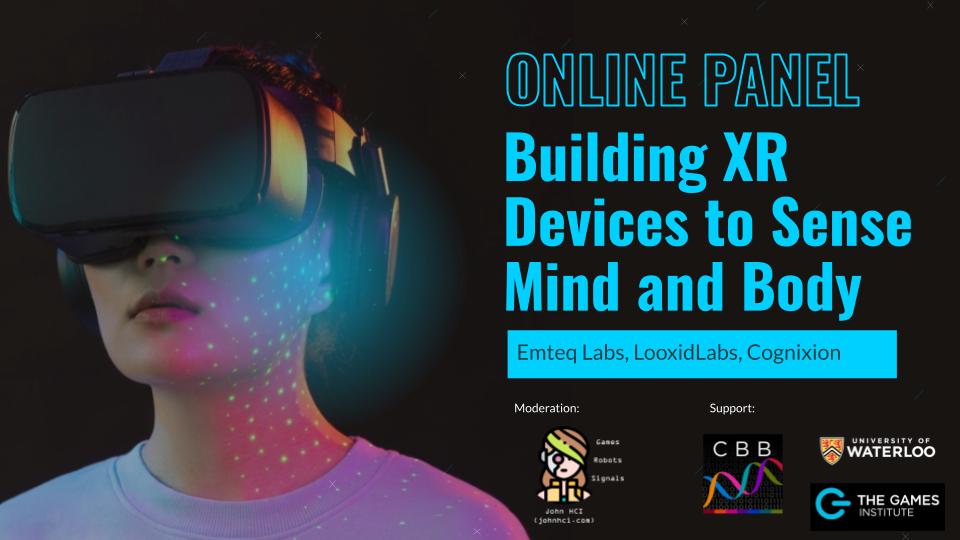

The next generation of head-mounted displays (HMD) proposes the integration of sensors capable of recording human body responses seamlessly. This feature will open endless possibilities to create extended reality (XR) technologies that are more sophisticated in capturing human’s emotions, psychological states and reactions to immersive virtual content. This panel explores how three different companies have been embedding physiological sensors into HMDs for virtual and augmented reality in order to connect mind and body and create more natural interfaces for XR applications. This integrated technology is allowing the creation of applications in the fields of assistive technology, affective and physiological computing and adaptive games for health. This panel will have three key industry players that are creating the new generation of HMDs in XR, integrating sensing technologies such as eye-tracking, electroencephalography (EEG) and facial electromyography (EMG) as well as developing novel algorithms to deconstruct the physiological information and create more humanized technologies.

This event will be hosted virtually via Zoom.

Moderator: Dr. John Muñoz, Post-Doctoral Fellow, Games Institute and Systems Design Engineering

Registration Link: https://uwaterloo.zoom.us/webinar/register/WN_5fXSShmfSqWD24M3bNL25w

Event co-hosted with Centre for Bioengineering and Biotechnology

Speakers

|

Charles is a leading facial musculature expert with over 20 years surgical experience, including 10 years as Consultant Plastic & Reconstructive Surgeon (Queen Victoria Hospital). Charles has an extensive background in research and development including clinical trials, has over 100 scientific publications and is the Medical Advisory Board chair for the charity Facial Palsy UK. |

|

Brian is a researcher with experience in brain-computer interface and human-machine interfaces. Brian holds a PhD in Computer Science from the Korea Advanced Institute of Science and Technology (KAIST) in South Korea. Brian has extensive experience integrating EEG signals in HCI applications such as teleoperation and virtual reality simulations. |

|

She is a systems design engineer with experience in biosignal processing, embedded machine learning, software development, and user experience design for mixed-reality applications. Her work focuses on developing brain-computer interfaces for communication, control, and neurorehabilitation. She uses a mixed-methods approach to solve problems using her skills in systems engineering and user experience design. |

Charles Nduka

Charles Nduka Brian Chae

Brian Chae Sarah Pearce

Sarah Pearce