When building trust with artificially intelligent (AI) agents, the key may be to make them display more human-like emotions. A study led by researchers from the University of Waterloo found that developing the humanness of AI machines could make them more accepted in the workforce where machine use continues to grow.

“Improving humanness of AI agents could enhance society’s perception of assistive technologies which going forward will improve people’s lives,” said Moojan Ghafurian, lead author of the study and a Postdoctoral Fellow in Waterloo’s David R. Cheriton School of Computer Science.

In the study, Ghafurian and co-authors associate professor Jesse Hoey and research assistant Neil Budnarain used a classic game called the “Prisoner’s Dilemma”. The game creates a hypothetical situation in which two prisoners are separately questioned by police for a crime they committed together. If one admits to the crime and the other doesn’t, the one who confesses gets no prison time while the other gets three years. If both confess, they both get two years. If neither confesses, each only serves one year. The more trust between the two criminal partners, the better the outcome for both.

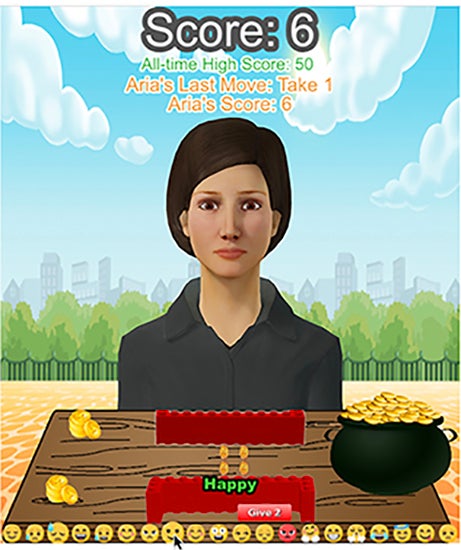

The study itself used an AI virtual human in place of a prisoner, and used gold as a reward to determine how the AI would act. The goal of the research participant was to gain as much gold as possible while trying to predict the virtual human’s actions. The AI model, developed by the Interactive Assistance Lab at the University of Colorado, Boulder, was designed to help people with cognitive disabilities interpret emotional signals from others.

An example of the game’s setting. The two-coin piles on the left show the number of coins that the participant the virtual agent have earned so far in the game.

117 participants were randomly paired off with a virtual agent and asked to rate it based on how human-like it appeared. Researchers found that on average, participants co-operated in the “Prisoner’s Dilemma” 20 out of 25 times when agents showed human-like emotion. In trials where the agent would show random emotions, participants co-operated 16 times out of 25. For agents that showed no emotion, co-operation happened 17 of 25 times.

This finding is an important step for the research team, as they hope to eventually design comfortable assistive technology for people with dementia.

The study, titled "Role of Emotions in Perception of Humanness of Virtual Agents", authored by Ghafurian, Hoey, and Budnarain, all from Waterloo’s David R. Cheriton School of Computer Science, was presented at the 18th International Conference on Autonomous Agents and Multiagent Systems (AAMAS).