Imagine travelling back to the year 2000 and telling someone that in less than two decades we will carry slim rectangular devices that are connected wirelessly to the world. The devices will have crystal-clear screens and run dozens of computer applications, from banking and financial management programs to fitness monitoring and health games among countless others. And all of these applications will be controlled not by a keyboard or mouse but by tapping and swiping a finger across the device’s interactive display.

It might seem like a gadget from an unimaginably distant future, yet such devices exist today. They’re called smart phones and they’re ubiquitous.

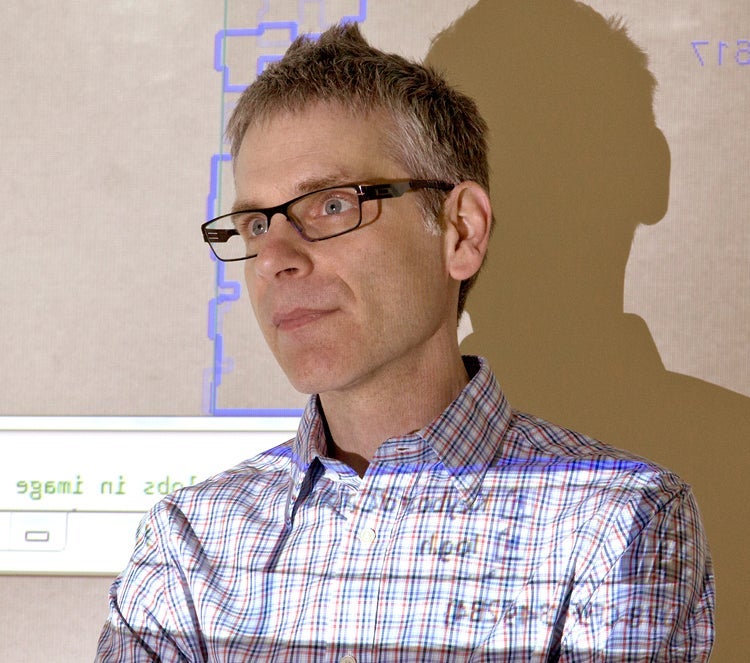

Professor Daniel Vogel wants to take human-computer interaction further, by releasing the computational genie from the bottle so that it’s everywhere around us.

“There’s long been a vision for computation to be part of the fabric of our environments,” said Vogel, a member of the human-computer interaction lab, an interdisciplinary group of computer science researchers who focus on the design, evaluation and implementation of interactive computing systems.

“In a sense, computation is part of that fabric right now, but we carry it in our pockets and there’s still a clear separation between the device — the smart phone — and the environment. We’re working to embed computation, inputs and outputs seamlessly into our environments.”

As a computer scientist, Vogel has the technical background to see such a vision come to fruition, but he also studied fine arts at the Emily Carr University of Art and Design. He’s in an ideal position to balance the potential of embedded computing with aesthetics.

The applications of this next paradigm of computing — where everything might sense input from people as well as augment its appearance without use of traditional digital displays — are manifold. They range from the subtle, perhaps using digital wallpaper in a living room to display recent social media activity or superimposing the local weather forecast on bathroom tiles so we can decide what to wear as get ready for work. Or ordinary objects in our homes and offices could act as input devices, allowing for example a person to control the furnace or air conditioning by rotating a coffee cup on a table as though it were a thermostat, a function mapped to the cup just momentarily to adjust the room’s temperature.

“Lots of systems and graphics problems need to be solved, but once information is released from computers it’s going to be different. Not everything is going to have a traditional computer interface or even look like one,” he explained. An interface might be an antique oak desk, but with digital information superimposed upon it, seamlessly merging the traditional with the novel.

Aspects of human-computer interaction are already here. Some luxury cars have sensing devices so when you’re driving you can make simple gestures like pointing, swiping or circular motions with your index finger to adjust the volume on the radio or to answer or dismiss an incoming call.

“Normally, you wouldn’t take a hand off the steering wheel and gesture like that in space, so within the context of driving that gesture can be interpreted easily by a computer. And this system would be an improvement over using a touch screen on the dashboard. Such displays require the driver to look at the screen, see where his finger is, and navigate through options and menus — and while doing that the driver has taken eyes off the road.”

For Vogel, designing systems that recognize human input elegantly is essential. “The wrong approach would be to put my coffee cup on a given spot on my desk, then go to the computer, log in, launch an application, navigate through a series of menus — room manager, devices, cup, ambient temperature — and so on to adjust the temperature. A lot of the Internet of Things currently work that way. It’s not ideal, but it’s a challenge to make computers smart enough that they can understand context like people do.”

Human-computer interaction is not just about how input is sensed by machines but how people view processed information — the output. “The real challenge here is how to communicate information that’s going to be scattered everywhere and how to scatter that information so it’s useful and aids productivity, instead of being distracting or ugly.”

A hot topic in human-computer interaction research in the 1980s was design of graphical user interfaces, direct manipulation and what is an ideal windowing model, Vogel explained.

“These are common and familiar now, but they were new concepts back then. The same thing is happening today, but instead of graphical user interfaces we’re developing augmented reality. In a world of pixels everywhere, if it’s done right, just think of the kind of immersive and beautiful experiences we could have.”