The following is excerpted from an article by Craig Daniels, published in Communitech News on November 8, 2018

Governments, educators and private companies all must act quickly to rein in the biases and excesses of autonomous systems driven by powerful artificial intelligence, a lunchtime symposium at CIGI heard Wednesday. The price of not acting is an existential threat to the fabric of human society.

“What this conversation comes down to for me is humans doing things to other humans,” said Donna Litt, COO and co-founder of the Waterloo-based AI startup Kiite and one of three panelists who took part in the discussion at CIGI, the Centre for International Governance Innovation, titled Responsible Artificial Intelligence.

“[It’s about] humans making decisions for other humans,” continued Litt, “and, in a number of circumstances, taking decisions away from other humans, and doing that at scale, without consent and without knowledge of the long-term implications.”

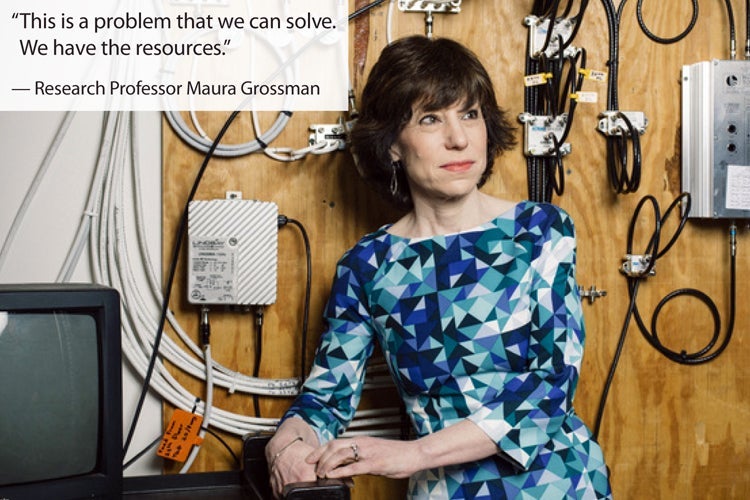

Moderated by Courtney Doagoo, a CIGI post-doctoral fellow in international law, the panel included California Polytechnic State University assistant professor of philosophy Ryan Jenkins and University of Waterloo computer science research professor and lawyer Maura Grossman.

The panelists framed the problems posed by AI — algorithms infused with racial and gender bias and autonomous weapon systems, robots and vehicles that make decisions that are potentially harmful to their human masters — and then described the cost of not addressing those problems and, finally, laid out potential solutions.

Grossman, a self-described social scientist working “in a hard computer science department,” described the difficulty in getting any one group to take responsibility for addressing the ethical implications of AI.

Computer science students, she said, are only focused on optimizing their algorithms. “They’re not concerned with where the data came from, whether it’s clean or biased.”

Likewise, Grossman said, the lawyers and law students who would be in a position to craft policy or pose questions about the moral or legal implications of AI “don’t understand the technology” and “they don’t know the questions to ask.”

“So both [groups] think it’s not their problem. We have to help them see it’s their problem. Because if it’s not their problem, it’s nobody’s problem, and that scares me.”