A key goal of curricular change is to align the students’ educational experiences with the program outcomes. At Waterloo, formal program reviews occur once every seven years. Between these formal review periods, other measures can be taken to evaluate the success of curricular changes and provide formative feedback to the department. In this section we share two Waterloo examples to highlight different strategies for continuous assessment of the program.

Example: Electrical and Computer Engineering undergraduate program

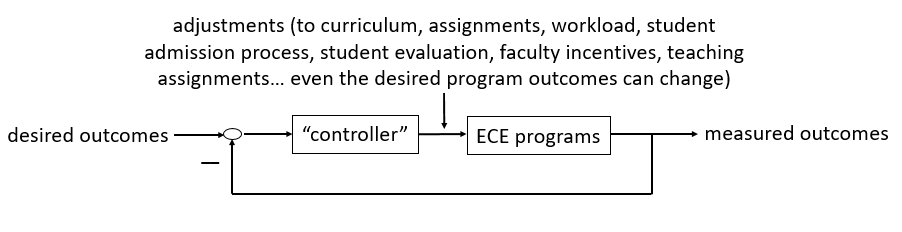

With the introduction of graduate attributes (PDF) as a component of accreditation by the Canadian Engineering Accreditation Board, engineering departments at Waterloo began making a shift toward outcomes-based assessment. To assess students’ progress toward the completion of their outcomes, Dan Davison, Associate Chair for undergraduate studies of the Electrical and Computer Engineering department, developed the following feedback process.

As shown in Figure 1, the general process identifies the system input (i.e., the desired outcomes), with a feedback loop that modifies elements based on a number of variables (e.g., assignments, workload, etc.). For more information, view the detailed diagram (PDF).

The focus of this procedure is to continually monitor students’ progress toward meeting the program outcomes. This continuous feedback provides increased opportunities to modify the curriculum or make other relevant changes rather than adjusting the curriculum only in response to formal program reviews or accreditation.

This process is in very early stages. Having created their program outcomes, the department then refined the outcomes adding a deeper level to articulate measurable requirements and to distinguish expectations for the two degrees (i.e. electrical engineering and computer engineering). Their next step was to identify both primary and secondary courses/activities that led to the development of these outcomes. They are now in the process of gathering data to measure progress toward the outcomes. We would like to thank professor Davison for allowing us to share this evaluation plan.

Example: Waterloo professional development program

The Waterloo professional development (WatPD) program was created in 2006 to enhance the professional skills of Waterloo's undergraduate students participating in co-operative education. On-going formative assessment of the program has been a priority since the program’s inception. Several evaluation models were considered and the department worked closely with the Centre for the Advancement of Co-operative Education (WatCACE) to develop its evaluation plan. Because of the nature of the program and its courses, the evaluation team selected the Kirkpatrick model, which assesses training at four levels. The four levels evaluated are:

- reaction (participants initial reaction to the program, e.g., whether they liked it, how they perceived its difficulty, etc.);

- learning (what participants actually learned);

- behaviour (whether participants’ behaviour changed as a result of the evaluation); and

- results (what impact followed as a result of the courses/program) (Kirkpatrick, 1998).

To gather on-going feedback on the effectiveness of their curriculum, WatPD uses the following data sources.

| Reaction |

|

|---|---|

| Learning |

|

| Behaviour |

|

| Results |

|

Table 1: Data sources for program evaluation (Pretti, 2009)

The program completed a self-study (PDF), which was presented to Senate in 2009. It is a good example of the range of data that can be used to provide both formative and summative assessment of a program. While the WatPD report for Senate focused on the whole program, any of the above elements could be used to assess a smaller curricular change. For example, if a suite of courses changes in a program, focus groups could be used to collect data from students in the current curriculum then the same focus group questions could be used with students after the change is made.

We would like to thank Judene Pretti and Anne-Marie Fannon of WatPD for sharing this example.