Generative artificial intelligence (GenAI) has reshaped many aspects of higher education, including how students learn and how instructors approach assessment design. This unavoidable reality presents both a challenge and an opportunity for instructors, particularly in rethinking how assessments might shift to align with changing disciplinary norms and methodologies. Most assessments will require change to some degree in the age of AI. Indeed, outside of proctored environments, students could choose to use AI at every step of an assessment regardless of what you ask. In response, instructors should be intentional about incorporating authentic assessments, accountability, and a culture of transparent AI use in their teaching. Designing AI-integrated tasks where AI use is expected, openly acknowledged, and aligned with real disciplinary practices alongside human-centered components is key.

The quickly evolving era of technology risks sending conflicting messages to students about the responsible and ethical use of AI. Instructors can help bridge the gap by explicitly addressing the role of AI in their respective disciplines, by providing clear guidance on appropriate use, and by integrating meaningful opportunities for students to develop AI literacy in the context of assessment (see the CTE Teaching Tip: Conversations with Students about GenAI Tools for more information on AI literacy).

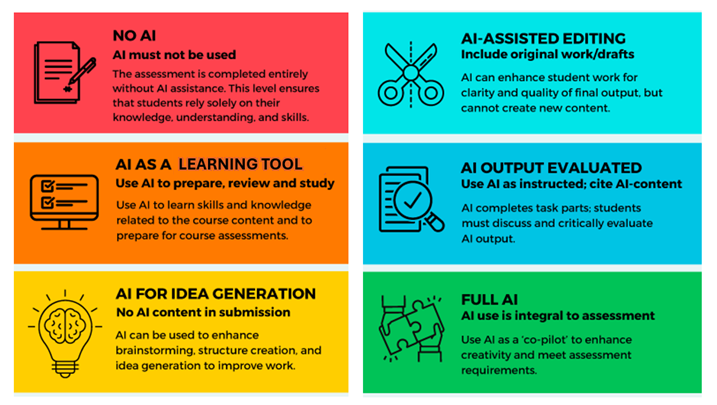

The Centre for Teaching, Learning, and Technology at the University of British Columbia has created a modified version of the AI Assessment Scale (AIAS) by Perkins et al. (2024), which can help facilitate conversations with students about GenAI tools. This framework represents one of many frameworks focused on GenAI in teaching and learning. We recommend using it as a starting point for articulating acceptable uses in your context.

Modified AIAS Framework

No AI: The use of AI tools is strictly prohibited in all aspects of the assessment. This approach is designed to support the development of foundational knowledge and essential skills, ensuring that students build a strong, independent understanding of the material.

Examples: in-person written tests, oral examinations, areas where AI is currently lacking such as math proof reasoning or accurately recounting recent events.

AI as a Learning Tool: Students can use AI tools to support their learning and studying of course content. However, the use of AI is strictly prohibited during assessments. This approach supports leveraging AI for personalized learning while ensuring that assessments accurately reflect individual understanding and skills.

Examples: generating problem sets, simulating audience questions for an upcoming presentation, organizing study notes, using a chatbot to reflect on a field course experience, simulating a health care practitioner-patient interaction.

AI For Idea Generation: Students can use AI tools for brainstorming and idea generation. AI can help overcome the ‘blank page’, expand on initial ideas, and offer various perspectives or topics for further exploration. However, it should not substitute for human judgment, critical thinking, or knowledge creation. At this level, students remain fully responsible for developing, refining, and articulating their own arguments, analysis, and conclusions.

Examples: suggesting possible hypotheses for testing, brainstorming features, services, or products for a target market, helping imagine future scenarios including risks and opportunities, connecting concepts from different disciplines or fields.

AI-Assisted Editing: Students can use AI tools to assist with editing, revising, and improving the readability of their work. These elements include support with grammar, clarity, organization, tone, and sentence structure. AI can serve as a helpful tool for refining written communication, but it should not be a replacement for the student’s own arguments, analysis, and content knowledge.

Examples: offering suggestions to adjust the tone of an email to suit a specific audience, providing feedback on grammar, sentence flow, and clarity of an initial essay draft, assisting with editing PowerPoint slides for a presentation.

AI Output Evaluated: Students can use AI tools to complete a task or generate an initial output, which then becomes the basis for further discussion, analysis, and critical evaluation. This approach emphasizes the development of higher-order thinking skills, requiring students to assess the accuracy, relevance, limitations (e.g., biases, hallucinations), and quality of AI-generated work. Students are responsible for demonstrating their understanding by critiquing and reflecting on the output,

Examples: AI provides a solution to an ethics scenario and students critically assess whether the reasoning aligns with disciplinary principles, AI conducts analyses (e.g., regression outputs) and students interpret the results, assess assumptions and identify errors or limitations, AI provides a topic summary and students assess its factual accuracy, citations, and errors.

Full AI: Students can use AI tools to assist with completing tasks or assessments. This approach allows students to engage with AI at specified stages, or throughout the entire process: asking questions, generating drafts, refining ideas, troubleshooting problems, or exploring solutions. While AI can offer support, suggestions, and efficiencies, it should not replace the student’s own understanding, decision-making, or critical thinking. Students remain fully responsible for ensuring the accuracy, quality, and academic integrity of their final work.

Examples: using DALL-E to produce futuristic urban design visualizations (PLAN 211, Dr. Katherine Perrott), using GitHub Copilot to generate code and then test, troubleshoot, and implement it, AI-assisted lab report writing to generate summaries or produce visualizations and results interpretations.

Considerations

Consider the following when determining the appropriate level of AI use for your assessments:

Explanation: Regardless of how much AI you choose to integrate into your course, it is important to engage your students in a conversation about the rationale behind that decision. Explaining why certain skills or knowledge should be developed with or without AI will deepen students’ understanding of your expectations and the purpose behind their learning. Remember, choosing ‘No AI’ is a valid and intentional choice!

Assessment (Re)Design: The integration of AI should be guided by a pedagogical purpose. Simply adopting technology for its own sake (or likewise dismissing AI entirely without any consideration) does not contribute to a meaningful learning experience; its use should support student development and align with current disciplinary practices. Furthermore, the assessment itself should change to incorporate the level of AI use. As highlighted by Corbin et. al (2025), “structural changes to assessments are [necessary] modifications that directly alter the nature, format, or mechanics of how a task must be completed... [T]hese changes reshape the underlying framework of the task, constraining or opening the student’s approach in ways that are built into the assessment itself” (p. 6-7). While communicating your AI use expectations is important, without design changes to assessments, the AIAS simply becomes another set of instructions that students are free to follow or ignore.

Optional Use of AI: Like instructors, some students may choose not to use AI tools due to concerns related to financial barriers, privacy, security, or personal preferences. Unless the use of AI is integral to achieving the course learning outcomes, consider offering an alternative pathway to complete the assessment. This approach could include a scaffolded version of the task or another equivalent option that achieves the same learning outcomes without requiring AI use.

If you would like support applying these tips to your own teaching, CTE staff members are here to help. View the CTE Support page to find the most relevant staff member to contact.

Resources

- CTE Teaching Tip: Conversations with Students about Generative Artificial Intelligence (GenAI) Tools

- CTE Teaching Tip: Writing Intended Learning Outcomes

- Guide to Assessment in the Generative AI Era

References

- Assessment design using Generative AI. A.I. In Teaching and Learning. (n.d.).

- Corbin, T., Dawson, P., & Liu, D. (2025). Talk is cheap: why structural assessment changes are needed for a time of GenAI. Assessment & Evaluation in Higher Education, 1–11.

- The Artificial Intelligence Assessment Scale (AIAS): A Framework for Ethical Integration of Generative AI in Educational Assessment. (2024). Journal of University Teaching and Learning Practice, 21(6).

This Creative Commons license lets others remix, tweak, and build upon our work non-commercially, as long as they credit us and indicate if changes were made. Use this citation format: Integrating Generative Artificial Intelligence (GenAI) in Assessments. Centre for Teaching Excellence, University of Waterloo.