Computer scientists developing new AI to bring natural language processing to African languages

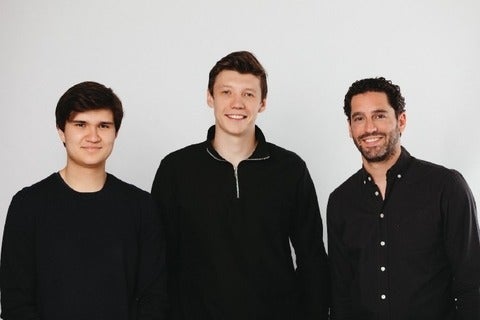

Researchers at the Cheriton School of Computer Science have developed a data-efficient pretrained transformed-based neural language model to analyze eleven African languages.

Their new neural network model, which they have dubbed AfriBERTa, is based on BERT — Bidirectional Encoder Representations from Transformers — a deep learning technique for natural language processing developed in 2018 by Google.